Scientific Session

Machine Learning for Image Reconstruction

| Wednesday Parallel 5 Live Q&A | Wednesday, 12 August 2020, 15:15 - 16:00 UTC | Moderators: Fang Liu |

|

0987. |

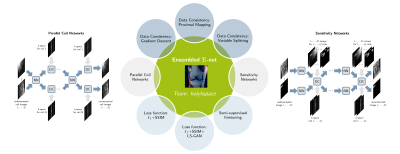

Σ-net: Ensembled Iterative Deep Neural Networks for Accelerated Parallel MR Image Reconstruction

Kerstin Hammernik1, Jo Schlemper1,2, Chen Qin1, Jinming Duan3, Gavin Seegoolam1, Cheng Ouyang1, Ronald M Summers4, and Daniel Rueckert1

1Department of Computing, Imperial College London, London, United Kingdom, 2Hyperfine Research Inc., Guilford, CT, United States, 3School of Computer Science, University of Birmingham, Birmingham, United Kingdom, 4NIH Clinical Center, Bethesda, MD, United States

We propose an ensembled Ʃ-net for fast parallel MR image reconstruction, including parallel coil networks, which perform implicit coil weighting, and sensitivity networks, involving explicit sensitivity maps. The networks in Ʃ-net are trained with various ways of data consistency, i.e., gradient descent, proximal mapping, and variable splitting, and with a semi-supervised finetuning scheme to adapt to the k-space data at test time. We achieved robust and high SSIM scores by ensembling all models to a Ʃ-net. At the date of submission, Ʃ-net is the leading entry of the public fastMRI multicoil leaderboard.

|

|

0988. |

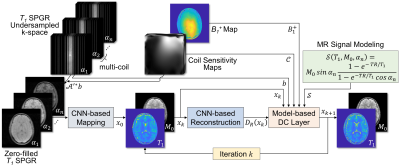

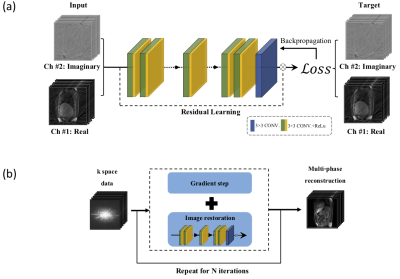

Deep Model-based MR Parameter Mapping Network (DOPAMINE) for Fast MR Reconstruction

Yohan Jun1, Hyungseob Shin1, Taejoon Eo1, Taeseong Kim1, and Dosik Hwang1

1Electrical and Electronic Engineering, Yonsei University, Seoul, Republic of Korea

In this study, a deep model-based MR parameter mapping network termed as “DOPAMINE” was developed to reconstruct MR parameter maps from undersampled multi-channel k-space data. It consists of two models: 1) MR parameter mapping model which estimates initial parameter maps from undersampled k-space data with a deep convolutional neural network (CNN-based mapping), 2) parameter map reconstruction model which removes aliasing artifacts with a deep CNN (CNN-based reconstruction) and interleaved data consistency layer by embedded MR model-based optimization procedure.

|

|

0989. |

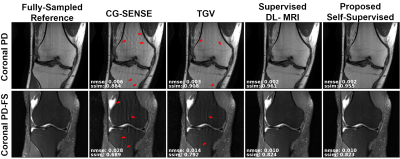

Physics-Based Self-Supervised Deep Learning for Accelerated MRI Without Fully Sampled Reference Data

Burhaneddin Yaman1,2, Seyed Amir Hossein Hosseini1,2, Steen Moeller2, Jutta Ellermann2, Kamil Ugurbil2, and Mehmet Akcakaya1,2

1Electrical and Computer Engineering, University of Minnesota, Minneapolis, MN, United States, 2Center for Magnetic Resonance Research, University of Minnesota, Minneapolis, MN, United States

Recently, deep learning (DL) has emerged as a means for improving accelerated MRI reconstruction. However, most current DL-MRI approaches depend on the availability of ground truth data, which is generally infeasible or impractical to acquire due to various constraints such as organ motion. In this work, we tackle this issue by proposing a physics-based self-supervised DL approach, where we split acquired measurements into two sets. The first one is used for data consistency while training the network, while the second is used to define the loss. The proposed technique enables training of high-quality DL-MRI reconstruction without fully-sampled data.

|

|

0990. |

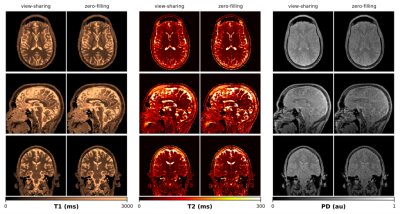

Synchronizing dimension reduction and parameter inference in 3D multiparametric MRI: A hybrid dual-pathway neural network approach

Carolin M Pirkl1,2, Izabela Horvath1,2, Sebastian Endt1,2, Guido Buonincontri3,4, Marion I Menzel2,5, Pedro A Gómez1, and Bjoern H Menze1

1Informatics, Technical University of Munich, Munich, Germany, 2GE Healthcare, Munich, Germany, 3Fondazione Imago7, Pisa, Italy, 4IRCCS Fondazione Stella Maris, Pisa, Italy, 5Physics, Technical University of Munich, Munich, Germany

Complementing the fast acquisition of coupled multiparametric MR signals, multiple studies have dealt with improving and accelerating parameter quantification using machine learning techniques. Here we synchronize dimension reduction and parameter inference and propose a hybrid neural network with a signal-encoding layer followed by a dual-pathway structure, for parameter prediction and recovery of the artifact-free signal evolution. We demonstrate our model with a 3D multiparametric MRI framework and show that it is capable of reliably inferring T1, T2 and PD estimates, while its trained latent-space projection facilitates efficient data compression already in k-space and thereby significantly accelerates image reconstruction.

|

0991. |

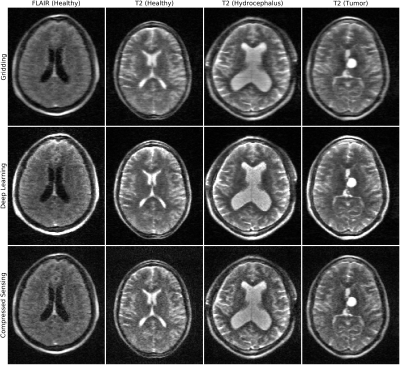

Deep Learning MRI Reconstruction in Application to Point-of-Care MRI

Jo Schlemper1, Seyed Sadegh Mohseni Salehi1, Carole Lazarus1, Hadrien Dyvorne1, Rafael O'Halloran1, Nicholas de Zwart1, Laura Sacolick1, Samantha By1, Joel M. Stein2, Daniel Rueckert3, Michal Sofka1, and Prantik Kundu1,4

1Hyperfine Research Inc., Guilford, CT, United States, 2Hospitals of the University of Pennsylvania, Philadelphia, PA, United States, 3Computing, Imperial College London, London, United Kingdom, 4Icahn School of Medicine at Mount Sinai, New York City, NY, United States

The goal of low-field (64 mT) portable point-of-care (POC) MRI is to produce low cost, clinically acceptable MR images in reasonable scan times. However, non-ideal MRI behaviors make the image quality susceptible to artifacts from system imperfections and undersampling. In this work, a deep learning approach is proposed for fast reconstruction from hardware and sampling-associated imaging artifacts. The proposed approach outperforms the reference deep learning approaches for retrospectively undersampled data with simulated system imperfections. Furthermore, we demonstrate that it yields better image quality and faster reconstruction than compressed sensing approach for unseen, prospectively undersampled low-field POC MR images.

|

|

0992. |

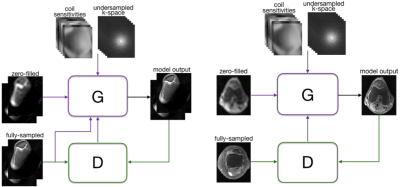

Wasserstein GANs for MR Imaging: from Paired to Unpaired Training

Ke Lei1, Morteza Mardani1,2, Shreyas S. Vasanawala2, and John M. Pauly1

1Electrical Engineering, Stanford University, Stanford, CA, United States, 2Radiology, Stanford University, Stanford, CA, United States

Lack of ground-truth MR images impedes the common supervised training of deep networks for image reconstruction. This work leverages WGANs for unpaired training of reconstruction networks. The reconstruction network is an unrolled neural network with a cascade of residual blocks and data consistency modules. The discriminator network is a multilayer CNN that acts like a critic, scoring the generated and label images. Our experiments demonstrate that unpaired WGAN training with minimal supervision is a viable option when there exists insufficient or no fully-sampled training label images that match the input images. Adding WGANs to paired training is also shown effective.

|

|

0993. |

RED-N2N: Image reconstruction for MRI using deep CNN priors trained without ground truth

Jiaming Liu1, Cihat Eldeniz1, Yu Sun1, Weijie Gan1, Sihao Chen1, Hongyu An1, and Ulugbek S. Kamilov1

1Washington University in St. Louis, St. Louis, MO, United States

We propose a new MR image reconstruction method that systematically enforces data consistency while also exploiting deep-learning imaging priors. The prior is specified through a convolutional neural network (CNN) trained to remove undersampling artifacts from MR images without any artifact-free ground truth. The results on reconstructing free-breathing MRI data into ten respiratory phases show that the method can form high-quality 4D images from severely undersampled measurements corresponding to acquisitions of about 1 minute in length. The results also highlight the improved performance of the method compared to several popular alternatives, including compressive sensing and UNet3D.

|

|

|

0994. |

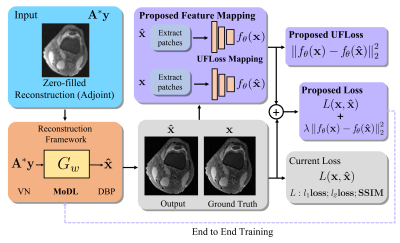

High-Fidelity Reconstruction with Instance-wise Discriminative Feature Matching Loss

Ke Wang1, Jonathan I. Tamir1,2, Stella X. Yu1,3, and Michael Lustig1

1Electrical Engineering and Computer Sciences, University of California, Berkeley, Berkeley, CA, United States, 2Electrical and Computer Engineering, The University of Texas at Austin, Austin, TX, United States, 3International Computer Science Institute, University of California, Berkeley, Berkeley, CA, United States

Machine-learning based reconstructions have shown great potential to reduce scan time while maintaining high image quality. However, commonly used per-pixel losses for the training don’t capture perceptual differences between the reconstructed and the ground truth images, leading to blurring or reduced texture. Thus, we incorporate a novel feature representation-based loss function with the existing reconstruction pipelines (e.g. MoDL), which we called Unsupervised Feature Loss (UFLoss). In-vivo results on both 2D and 3D reconstructions show that the addition of the UFLoss can encourage more realistic reconstructed images with much more detail compared to conventional methods (MoDL and Compressed Sensing).

|

0995. |

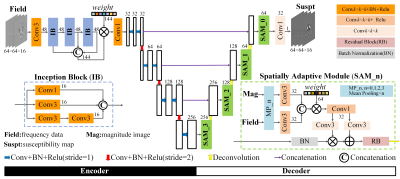

A spatially adaptive cross-modality based three-dimensional reconstruction network for susceptibility imaging

Lijun Bao1 and Hongyuan Zhang1

1Xiamen University, Xiamen, China

In this work, we propose a spatially adaptive cross-modality based three-dimensional reconstruction network to determine the susceptibility distribution from the magnetic field measurement. To compensate the information lost in previous encoder layers, a set of spatially adaptive modules in different resolutions are embedded into multiscale decoders, which extract features from magnitude images and field maps adaptively. Thus, the magnitude regularization is incorporated into the network architecture while the training stability is improved. It is potential to solve inverse problems of three-dimensional data, especially for cross-modality related reconstructions.

|

|

0996. |

MRI Reconstruction Using Deep Bayesian Inference

Guanxiong Luo1 and Peng Cao1

1The University of Hong Kong, Hong Kong, China A deep neural network provides a practical approach to extract features from existing image database. For MRI reconstruction, we presented a novel method to take advantage of such feature extraction by Bayesian inference. The innovation of this work includes 1) the definition of image prior based on an autoregressive network, and 2) the method uniquely permits the flexibility and generality and caters for changing various MRI acquisition settings, such as the number of radio-frequency coils, and matrix size or spatial resolution. |

Back to Program-at-a-Glance

Back to Program-at-a-Glance Watch the Video

Watch the Video Back to Top

Back to Top