Digital Posters

Prostate: Deep Learning

ISMRM & SMRT Annual Meeting • 15-20 May 2021

| Concurrent 5 | 17:00 - 18:00 |

4105. |

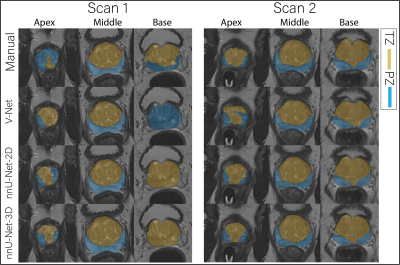

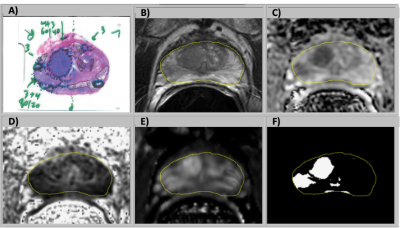

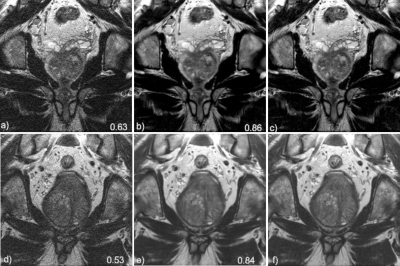

The repeatability of deep learning-based segmentation of the prostate, peripheral and transition zones on T2-weighted MR images

Mohammed R. S. Sunoqrot1, Kirsten M. Selnæs1,2, Elise Sandsmark2, Sverre Langørgen2, Helena Bertilsson3,4, Tone F. Bathen1,2, and Mattijs Elschot1,2

1Department of Circulation and Medical Imaging, NTNU, Norwegian University of Science and Technolog, Trondheim, Norway, 2Department of Radiology and Nuclear Medicine, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 3Department of Cancer Research and Molecular, NTNU, Norwegian University of Science and Technolog, Trondheim, Norway, 4Department of Urology, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway

Organ segmentation is an essential step in computer-aided diagnosis systems. Deep learning (DL)-based methods provide good performance for prostate segmentation, but little is known about their repeatability. In this work, we investigated the intra-patient repeatability of shape features for DL-based segmentation methods of the whole prostate (WP), peripheral zone (PZ) and transition zone (TZ) on T2-weighted MRI, and compared it to the repeatability of manual segmentations. We found that the repeatability of the investigated methods is comparable to manual for most of the investigated shape features from the WP and TZ segmentations, but not for PZ segmentations in all methods.

|

|||

4106. |

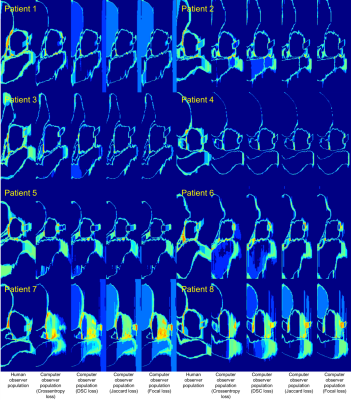

Assessing the variability of contours performed by DL algorithms in prostate MRI

Jeremiah Sanders1, Henry Mok2, Alexander Hanania3, Aradhana Venkatesan4, Chad Tang2, Teresa Bruno2, Howard Thames5, Rajat Kudchadker6, and Steven Frank2

1Imaging Physics, UT MD Anderson Cancer Center, Houston, TX, United States, 2Radiation Oncology, UT MD Anderson Cancer Center, Houston, TX, United States, 3Radiation Oncology, Baylor College of Medicine, Houston, TX, United States, 4Diagnostic Radiology, UT MD Anderson Cancer Center, Houston, TX, United States, 5Biostatistics, UT MD Anderson Cancer Center, Houston, TX, United States, 6Radiation Physics, UT MD Anderson Cancer Center, Houston, TX, United States

Quantitative techniques for characterizing deep learning (DL) algorithms are necessary to inform their clinical application, use, and quality assurance. This work analyzes the performance of DL algorithms for segmentation in prostate MRI at a population level. We performed computational observer studies and spatial entropy mapping for characterizing the variability of DL segmentation algorithms and evaluated them on a clinical MRI task that informs the treatment and management of prostate cancer patients. Specifically, we analyzed the task of prostate and peri-prostatic anatomy segmentation in prostate MRI and compared human and computer observer populations against one another.

|

|||

4107. |

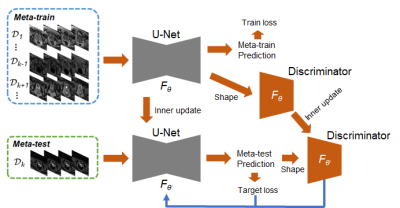

Few-shot Meta-learning with Adversarial Shape Prior for Zonal Prostate Segmentation on T2 Weighted MRI

Han Yu1, Varut Vardhanabhuti1, and Peng Cao1

1The University of Hong Kong, Hong Kong, Hong Kong

We propose a novel gradient-based meta-learning scheme to tackle the challenges when deploying the model to a different medical center with the lack of labeled data. A pre-trained model is always suboptimal when deploying to different medical centers, where various protocols and scanners are used. Our method combines a 2D U-Net as a segmentor to generate segmentation maps and an adversarial network to learn from the shape prior in the meta-train and meta-test. Evaluation results on the public prostate MRI data and our HKU local database show that our approach outperformed the existing naive U-Net methods.

|

|||

4108. |

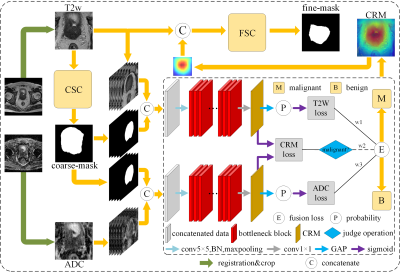

A mutual communicated model based on multi-parametric MRI for automated prostate cancer segmentation and classification

Piqiang Li1, Zhao Li2, Qinjia Bao2, Kewen Liu1, Xiangyu Wang3, Guangyao Wu4, and Chaoyang Liu2

1School of Information Engineering, Wuhan University of Technology, Wuhan, China, 2State Key Laboratory of Magnetic Resonance and Atomic and Molecular Physics, Wuhan Institute of Physics and Mathmatics, Innovation Academy for Precision Measurement Science and Technology, Wuhan, China, 3Department of Radiology, The First Affiliated Hospital of Shenzhen University, Shenzhen, China, 4Department of Radiology, Shenzhen University General Hospital, Shenzhen, China

We proposed a Mutual Communicated Deep learning Segmentation and Classification Network (MC-DSCN) for prostate cancer based on multi-parametric MRI. The network consists of three mutual bootstrapping components: the coarse segmentation component provides coarse-mask information for the classification component, the mask-guided classification component based on multi-parametric MRI generates the location maps, and the fine segmentation component guided by the located maps. By jointly performing segmentation based on pixel-level information and classification based on image-level information, both segmentation and classification accuracy are improved simultaneously.

|

|||

4109. |

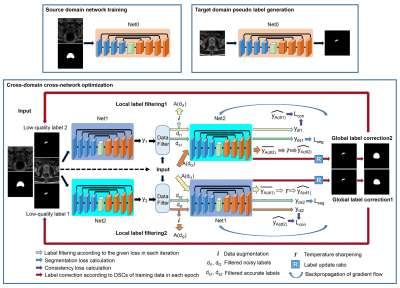

A novel unsupervised domain adaptation method for deep learning-based prostate MR image segmentation

Cheng Li1, Hui Sun1, Taohui Xiao1, Xin Liu1, Hairong Zheng1, and Shanshan Wang1

1Paul C. Lauterbur Research Center for Biomedical Imaging, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Automatic prostate MR image segmentation is needed to help doctors achieve fast and accurate disease diagnosis and treatment planning. Deep learning (DL) has shown promising achievements. However, DL models often face challenges in applications when there are large discrepancies between the training (source domain) and test (target domain) data. Here we propose a novel unsupervised domain adaptation method to address this issue without utilizing any target domain labels. Our method introduces two models trained in parallel to filter and correct the pseudo-labels generated for the target domain training data and thus, achieves substantially improved segmentation results on the test data.

|

|||

4110. |

Automated image segmentation of prostate MR elastography by dense-like U-net.

Nader Aldoj1, Federico Biavati1, Sebastian Stober2, Marc Dewey1, Patrick Asbach1, and Ingolf Sack1

1Charité, Berlin, Germany, 2Ovgu Magdeburg, Magdeburg, Germany

The purpose was to investigate the impact of individual or combined MR Elastogrphy maps and MRI sequences on the overall segmentation of prostate gland and its subsequent zones using dense-like U-net. Our study showed that the obtained dice score of MRE maps was higher (i.e. more accurate segmentation) than the one obtained with MRI sequences. Moreover, we found that the magnitude MRE map had the highest importance for accurate segmentation among all tested maps/sequences. In conclusion, MRE maps resulted in excellent segmentations even when compared to T2w images which are the standard choice for segmentation tasks.

|

|||

4111. |

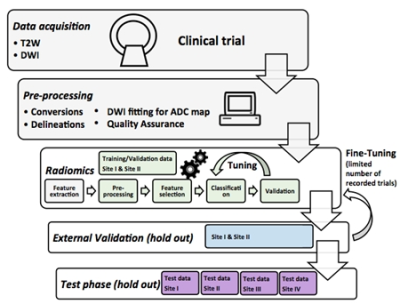

Machine learning challenge using uniform prostate MRI scans from 4 centers (PRORAD)

Harri Merisaari1, Pekka Taimen2, Otto Ettala2, Juha Knaapila2, Kari T Syvänen2, Esa Kähkönen2, Aida Steiner2, Janne Verho2, Paula Vainio2, Marjo Seppänen3, Jarno Riikonen4, Sanna Mari Vimpeli4, Antti Rannikko5, Outi Oksanen5,

Tuomas Mirtti5, Ileana Montoya Perez1, Tapio Pahikkala1, Parisa Movahedi1, Tarja Lamminen2, Jani Saunavaara2, Peter J Boström2, Hannu J Aronen1, and Ivan Jambor6

1University of Turku, Turku, Finland, 2Turku University Hospital, Turku, Finland, 3Satakunta Central Hospital, Pori, Finland, 4Tampere University Hospital, Tampere, Finland, 5Helsinki University Hospital, Helsinki, Finland, 6Icahn School of Medicine at Mount Sinai, New York, NY, United States

PRORAD is a series of machine learning challenges hosted at CodaLab which provide access to prostate MRI data sets from 4 centers performed using a publicly available IMPROD bpMRI acquisition protocol. The challenge is designed for purposes of developing, validating and independent testing of various machine learning methods for prostate MRI.

|

|||

4112. |

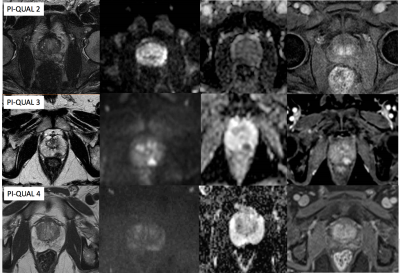

Evaluation of the inter-reader reproducibility of the PI-QUAL scoring system for prostate MRI quality

Francesco Giganti1, Eoin Dinneen1, Veeru Kasivisvanathan1, Aiman Haider2, Alex Freeman2, Mark Emberton1, Greg Shaw2, Caroline M Moore1, and Clare Allen2

1University College London, London, United Kingdom, 2University College London Hospital, London, United Kingdom

We assessed the interobserver reproducibility of the Prostate Imaging Quality (PI-QUAL) score for prostate MR quality between two expert radiologists who independently scored a total of 41 multiparametric prostate MR scans from different vendors and scanners. All men included in this study had biopsy-confirmed prostate cancer and received robotic-assisted laparoscopic prostatectomy after imaging. Agreement was substantial (κ = 0.77; percent agreement = 80%) when assessing each single PI-QUAL score (1 to 5). Two expert radiologists achieved substantial reproducibility for the PI-QUAL score, but the composition of the scoring system will need to undergo further refinements.

|

|||

4113. |

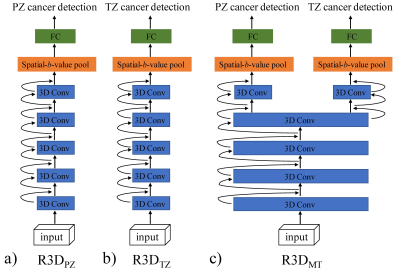

Prostate Cancer Detection Using High b-Value Diffusion MRI with a Multi-task 3D Residual Convolutional Neural Network

Guangyu Dan1,2, Min Li3, Mingshuai Wang4, Zheng Zhong1,2, Kaibao Sun1, Muge Karaman1,2, Tao Jiang3, and Xiaohong Joe Zhou1,2,5

1Center for MR Research, University of Illinois at Chicago, Chicago, IL, United States, 2Department of Bioengineering, University of Illinois at Chicago, Chicago, IL, United States, 3Department of Radiology, Beijing Chaoyang Hospital, Capital Medical University, Beijing, China, 4Department of Urology, Beijing Chaoyang Hospital, Capital Medical University, Beijing, China, 5Departments of Radiology and Neurosurgery, University of Illinois at Chicago, Chicago, IL, United States

Diffusion-weighted signal attenuation pattern contains valuable information regarding diffusion properties of the underlying tissue microstructures. With their extraordinary pattern recognition capability, deep learning (DL) techniques have a great potential to analyze diffusion signal decay. In this study, we proposed a 3D residual convolutional neural network (R3D) to detect prostate cancer by embedding the diffusion signal decay into one of the convolutional dimensions. By combining R3D with multi-task learning (R3DMT), an excellent and stable prostate cancer detection performance was achieved in the peripheral zone (AUC of 0.990±0.008) and the transitional zone (AUC of 0.983±0.016).

|

|||

4114. |

T2-Weighted MRI-Derived Texture Features in Characterization of Prostate Cancer

Dharmesh Singh1, Virendra Kumar2, Chandan J Das3, Anup Singh1, and Amit Mehndiratta1

1Centre for Biomedical Engineering (CBME), Indian Institute of Technology (IIT) Delhi, New Delhi, India, 2Department of NMR, All India Institute of Medical Sciences (AIIMS) Delhi, New Delhi, India, 3Department of Radiology, All India Institute of Medical Sciences (AIIMS) Delhi, New Delhi, India

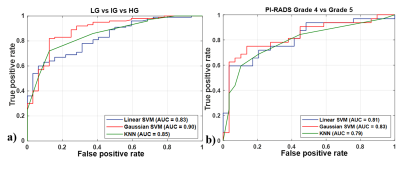

Automatic grading of prostate cancer (PCa) can play a major role in its early diagnosis, which has a significant impact on patient survival rates. The objective of this study was to develop and validate a framework for classification of PCa grades using texture features of T2-weighted MR images. Evaluation of classification result shows accuracy of 85.10 ± 2.43% using random forest feature selection and Gaussian support-vector machine classifier.

|

|||

4115. |

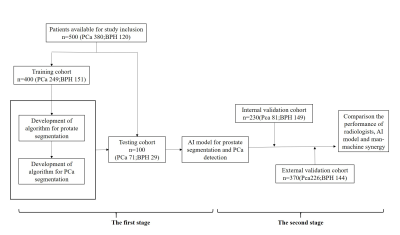

Classification of Cancer at Prostate MRI: Artificial Intelligence versus Clinical Assessment and Human-Machine Synergy

guiqin LIU1, Guangyu Wu1, yongming Dai2, Ke Xue2, and Shu Liao3

1Radiology, Renji Hospital,Shanghai Jiaotong University School of Medicine, Shanghai, China, 2United Imaing Healthcare, Shanghai, China, 3Shanghai United Imaging Intelligence Co. Ltd, Shanghai, China

The interpretation of mpMRI is limited by expertise required and interobserver variability. Here we present an AI model, with ordinary accuracy level for diagnosing prostate cancer, the remarkable of false negative and sensitivity could help to reduce missed-diagnosis of PCa especially csPCa. To assess its performance in clinical setting, we curated internal and external validation, and performance of radiologists, AI model, human-machine synergy were compared, although AI performed suboptimal, the human-led synergy method performed equivalent to clinical assessment with improved consistency, which can serve as a comparison standard to more complex deep learning and synergy approaches in the future.

|

|||

4116. |

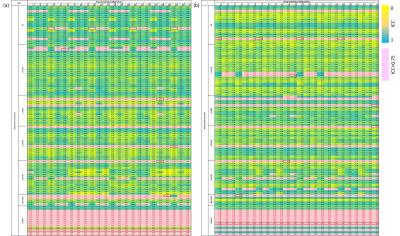

Repeatability of Radiomic Features in T2-Weighted Prostate MRI: Impact of Pre-processing Configurations

Dyah Ekashanti Octorina Dewi1, Mohammed R. S. Sunoqrot1, Gabriel Addio Nketiah1, Elise Sandsmark2, Sverre Langørgen2, Helena Bertilsson3,4, Mattijs Elschot1,2, and Tone Frost Bathen1,2

1Department of Circulation and Medical Imaging, Norwegian University of Science and Technology, Trondheim, Norway, 2Department of Radiology and Nuclear Medicine, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 3Department of Cancer Research and Molecular Medicine, Norwegian University of Science and Technology, Trondheim, Norway, 4Department of Urology, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway

Identifying repeatability of radiomic features in T2-Weighted prostate MRI is important to develop consistent imaging biomarkers and evaluate prostate cancer. This study aims to investigate the impact of pre-processing configurations on radiomic features repeatability in two short-term measurements. Repeatability was analyzed through pre-processing combinations of quantization, bin number, normalization, and filtering, and PyRadiomics feature extraction tool. The results show that repeatability of texture features varies depending on anatomical zones and lesions, pre-processing, and feature itself. Four texture features provide good repeatability independently of pre-processing configuration. Moreover, different feature groups have the highest repeatability in whole prostate, prostate zones and lesions.

|

|||

4117. |

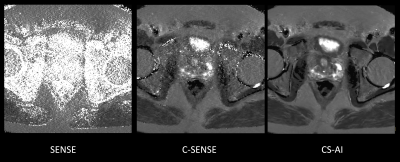

Rapid submillimeter high-resolution prostate T2 mapping with a deep learning constrained Compressed SENSE reconstruction

Masami Yoneyama1, Takashige Yoshida2, Jihun Kwon1, Kohei Yuda2, Yuki Furukawa2, Nobuo Kawauchi2, Johannes M Peeters3, and Marc Van Cauteren3

1Philips Japan, Tokyo, Japan, 2Radiology, Tokyo Metropolitan Police Hospital, Tokyo, Japan, 3Philips Healthcare, Best, Netherlands

Compressed SENSE-AI, based on Adaptive-CS-Net, clearly reduces noise artifacts and significantly improves the accuracy and robustness of T2 values in submillimeter (0.7mm) high-resolution prostate multi-echo turbo spin-echo T2 mapping compared with conventional SENSE and Compressed SENSE techniques, without any penalty for scan parameters. This technique may prove of value in better discriminating prostate cancer from healthy tissues.

|

|||

4118. |

Sensitivity of radiomics to inter-reader variations in prostate cancer delineation on MRI should be considered to improve generalizability

Rakesh Shiradkar1, Michael Sobota1, Leonardo Kayat Bittencourt2, Sreeharsha Tirumani2, Justin Ream3, Ryan Ward3, Amogh Hiremath1, Ansh Roge1, Amr Mahran1, Andrei Purysko3, Lee Ponsky2, and Anant Madabhushi1

1Case Western Reserve University, Cleveland, OH, United States, 2University Hospitals Cleveland Medical Center, Cleveland, OH, United States, 3Cleveland Clinic, Cleveland, OH, United States

Radiomic approaches for prostate cancer risk stratification largely depend on radiologist delineation of prostate cancer regions of interest (ROI) on MRI. In this study, we acquired multi-reader delineations of ROIs, derived radiomic features within the ROIs trained and evaluated machine learning classifiers. We observed that variation in delineations did not affect the classification performance within a cohort but it did affect when evaluated on an independent validation set. We observed that a more conservative approach in delineations may ensure better generalizability and classification performance of machine learning models.

|

|||

4119. |

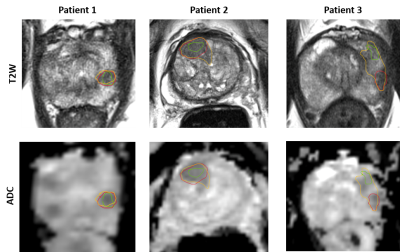

Radiomics models based on ADC maps for predicting high-grade prostate cancer at radical prostatectomy: comparison with preoperative biopsy

Chao Han1, Shuai Ma1, Xiang Liu1, Yi Liu1, Changxin Li2, Yaofeng Zhang2, Xiaodong Zhang1, and Xiaoying Wang1

1Department of Radiology, Peking University First Hospital, Beijing, China, 2Beijing Smart Tree Medical Technology Co. Ltd., Beijing, China

MR-based radiomics has been showed the feasibility in predicting high-grade prostate cancer (PCa), but most of the volumes of interest (VOIs) were based on manual segmentation. We develop and test 4 radiomics models based on manual/automatic segmentation of prostate gland/PCa lesion from apparent diffusion coefficient (ADC) maps to predict high-grade (Gleason score, GS ≥4+3) PCa at radical prostatectomy. Radiomics models based on automatic segmentation may obtain roughly the same diagnostic efficacy as manual segmentation and preoperative biopsy, which suggests the possibility of a fully automatic workflow combining automated segmentation and radiomics analysis.

|

|||

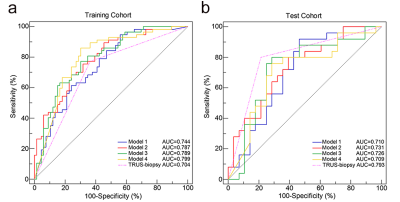

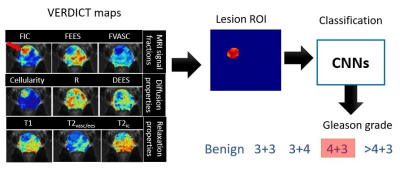

4120. |

Non-invasive Gleason Score Classification with VERDICT-MRI

Vanya V Valindria1, Saurabh Singh2, Eleni Chiou1, Thomy Mertzanidou1, Baris Kanber1, Shonit Punwani2, Marco Palombo1, and Eleftheria Panagiotaki1

1Centre for Medical Image Computing, Department of Computer Science, University College London, London, United Kingdom, 2Centre for Medical Imaging, University College London, London, United Kingdom

This study proposes non-invasive Gleason Score (GS) classification for prostate cancer with VERDICT-MRI using convolutional neural networks (CNNs). We evaluate GS classification using parametric maps from the VERDICT prostate model with compensated relaxation. We classify lesions using two CNN architectures: DenseNet and SE-ResNet. Results show that VERDICT achieves high GS classification performance using SE-ResNet with all parametric maps as input. Also in comparison with published GS classification multi-parametric MRI studies, VERDICT maps achieve higher metrics.

|

|||

4121. |

Prostate Cancer Risk Maps Derived from Multi-parametric MRI and Validated by Histopathology

Matthew Gibbons1, Jeffry P Simko2,3, Peter R Carroll2, and Susan Noworolski1

1Radiology and Biomedical Imaging, University of California, San Francisco, San Francisco, CA, United States, 2Urology, University of California, San Francisco, San Francisco, CA, United States, 3Pathology, University of California, San Francisco, San Francisco, CA, United States

Multi-parametric MRI has proven itself to be a clinically useful tool to assess prostate cancer through a qualitative assessment of multiple parameters within the guidelines of PI-RADs. Our objective is to leverage quantitative data combinations to characterize progression risk utilizing an automated procedure. This study showed the feasibility of MRI generated cancer risk maps, created from a combination of pre-prostatectomy, multiparametric MR images (mpMRI), to detect prostate cancer lesions >0.1cc as validated with histopathology. The method also quantified the volume of cancer within the prostate. Method improvements were identified by determining root causes for over and underestimation of cancer volumes.

|

|||

4122. |

Deep learning reconstruction enables highly accelerated T2 weighted prostate MRI

Patricia M Johnson1, Angela Tong1, Paul Smereka1, Awani Donthireddy1, Robert Petrocelli1, Hersh Chandarana1, and Florian Knoll1

1Center for Advanced Imaging Innovation and Research (CAI2R), Department of Radiology, New York University School of Medicine, New york, NY, United States Early diagnosis and treatment of prostate cancer (PCa) can be curative, but the blood test for PSA is limited in detecting clinically significant PCa. Current abbreviated MR imaging protocols do not sufficiently reduce scan time for practical routine screening. In this work we extend a variational network (VN) deep learning image reconstruction method for accelerated clinical prostate images. Our results show that VN reconstructions of accelerated T2W images have comparable image quality to the current clinical protocol and require ≤1 minute of acquisition time, which can enable rapid screening prostate MRI. |

|||

4123. |

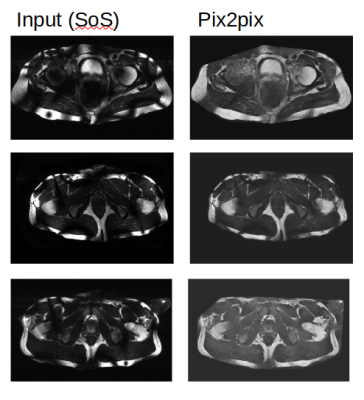

Reduction of B1-field induced inhomogeneity for body imaging at 7T using deep learning and synthetic training data.

Seb Harrevelt1, Lieke Wildenberg2, Dennis Klomp2, C.A.T. van den Berg2, Josien Pluim3, and Alexander Raaijmakers1

1TU Eindhoven, Utrecht, Netherlands, 2UMC Utrecht, Utrecht, Netherlands, 3TU Eindhoven, Rossum, Netherlands

Ultra high-field MR images suffer from severe image inhomogeneity and artefacts due to the B1 field. Deep learning is a potential solution to this problem but training is difficult because no perfectly homogeneous 7T images exist that could serve as a ground truth. In this work, artificial training data has been created using numerically simulated 7T B1 fields, perfectly homogeneous 1.5T images and a signal model to add typical 7T B1 inhomogeneity on top of 1.5T images. A Pix2Pix model has been trained and tested on out-of-domain data where it out-performs classic bias field reducing algorithms.

|

|||

4124. |

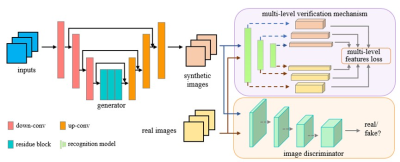

Deep learning for synthesizing apparent diffusion coefficient maps of the prostate: A tentative study based on generative adversarial networks

lei hu1, Jungong Zhao1, Caixia Fu2, and Thomas Benkert3

1Department of Diagnostic and Interventional Radiology, Shanghai Jiao Tong University Affiliated Sixt, 上海, China, 2MR Application Development, Siemens Shenzhen magnetic Resonance Ltd, shenzhen, China, 3MR Application Predevelopment, Siemens Healthcare, Erlangen, Gernmany, Erlangen, Germany

We developed a supervised learning framework based on GAN in order to synthesize apparent diffusion coefficient maps (s-ADC) using full-FOV DWI images; zoomed-FOV ADC (z-ADC) served as the reference. Synthesized ADC using DWI with b=1000 mm2/s (S-ADCb1000) has statistically significant lower RMSE and higher PSNR, SSIM, and FSIM than s-ADCb50 and s-ADCb1500 (All P < 0.001). Both z-ADC and s-ADCb1000 had better reproducibility regarding quantitative ADC values in all evaluated tissues and better performance in tumor detection and classification than full-FOV ADC (f-ADC). A deep learning framework based on GAN is a promising method to synthesize realistic z-ADC sets with good image quality and accuracy in prostate cancer detection.

|

The International Society for Magnetic Resonance in Medicine is accredited by the Accreditation Council for Continuing Medical Education to provide continuing medical education for physicians.