Hongyan Liu1, Oscar van der Heide1, Cornelis A.T. van den Berg1, and Alessandro Sbrizzi1

1Computational Imaging Group for MR diagnostics & therapy, Center for Image Sciences, UMC Utrecht, Utrecht, Netherlands

1Computational Imaging Group for MR diagnostics & therapy, Center for Image Sciences, UMC Utrecht, Utrecht, Netherlands

We propose a Recurrent Neural Network (RNN) model for quickly computing large-scale MR signals and derivatives. The proposed RNN model can be used for accelerating different qMRI applications within seconds, in particular MRF dictionary generation and optimal experimental design.

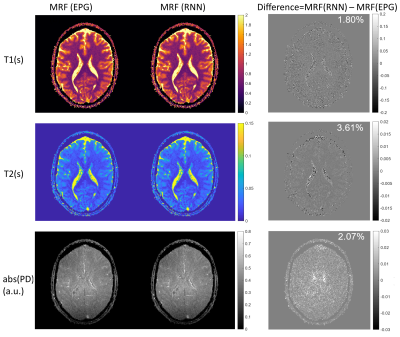

Fig.4. MRF reconstructions of in-vivo data using EPG and RNN generated

dictionaries.

[First, second

and third rows] ,$$$T_1, T_2$$$, and $$$abs(PD)$$$ maps for the in-vivo brain data, respectively. NRMSEs are reported on the top-right corner of the difference maps.

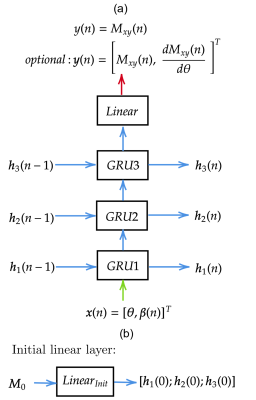

Fig.1. RNN structure for learning the EPG model. (a) RNN architecture with 3 stacked Gated Recurrent Units (GRU) for the n-th time step. At each time

step, GRU1 receives inputs x(n) including tissue parameter θ and time-varying sequence parameter β(n). The hidden states h1(n), h2(n), h3(n), all with size of 32x1, are computed and used for the next time step.

A Linear layer is added after GRU3 to compute the magnetization and derivatives

using h3(n). (b) An initial linear

layer, LinearInit, is used for computing the

initial hidden state h1(0), h2(0), h3(0) from initial magnetization M0.