Antoine Théberge1, Christian Desrosiers2, Maxime Descoteaux1, and Pierre-Marc Jodoin1

1Faculté des Sciences, Université de Sherbrooke, Sherbrooke, QC, Canada, 2Département de génie logiciel et des TI, École de technologie supérieure, Montréal, QC, Canada

1Faculté des Sciences, Université de Sherbrooke, Sherbrooke, QC, Canada, 2Département de génie logiciel et des TI, École de technologie supérieure, Montréal, QC, Canada

By learning tractography algorithms via deep reinforcement learning, we are able to obtain competitive results compared to supervised learning approaches, while demonstrating far superior generalization capabilities to new datasets than prior work.

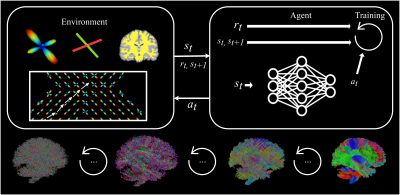

Representation of the framework. Top: The RL loop, where states, rewards and actions are exchanged between the learning agent and the environment. Top-left: the environment keeps track of the reconstructed streamlines and computes states and rewards accordingly. Top-right: The agent uses states and rewards received to improve itself and output actions. Bottom: Reconstructed tractograms are iteratively more plausible as training goes on.

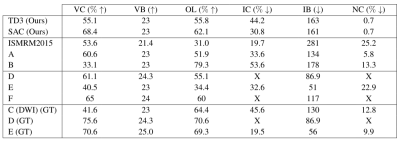

Results for experiment 2. A, B, C refer to the same methods as in Figure 3. D refers to Neher et al.15,16, E refers to Benou et al.17, F refers to Wegmayr et al. (2020)18. ISMRM2015 refers to the mean results of the original challenge11. (GT) indicates that the method was trained on the ground-truth bundles. X indicates measures that were not reported by the original authors. Reported metrics are the same as in Figure 3.