Malte Hoffmann1,2, Benjamin Billot3, Juan Eugenio Iglesias1,2,3,4, Bruce Fischl1,2,4, and Adrian V Dalca1,2,4

1Department of Radiology, Harvard Medical School, Boston, MA, United States, 2Department of Radiology, Massachusetts General Hospital, Boston, MA, United States, 3Centre for Medical Image Computing, University College London, London, United Kingdom, 4Computer Science and Artificial Intelligence Laboratory, MIT, Cambridge, MA, United States

1Department of Radiology, Harvard Medical School, Boston, MA, United States, 2Department of Radiology, Massachusetts General Hospital, Boston, MA, United States, 3Centre for Medical Image Computing, University College London, London, United Kingdom, 4Computer Science and Artificial Intelligence Laboratory, MIT, Cambridge, MA, United States

We leverage a generative strategy for diverse synthetic label maps and images to learn deformable registration without acquired images. Training on these data results in powerful neural networks that generalize to a landscape of unseen MRI contrasts, eliminating the need for retraining.

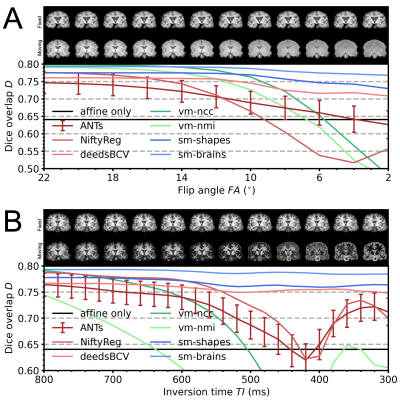

Figure 4. Robustness to moving-image MRI contrast across 10 realistic A spoiled gradient-echo and B MPRAGE image pairs. In each cross-subject registration, the fixed image has consistent T1w contrast. Towards the right, the T1-contrast weighting of the moving image increases. Our method (sm) remains robust while most others break down, including VoxelMorph (vm). As errors are comparable across methods, we show error bars for ANTs only and indicate the standard error of the mean over subjects.

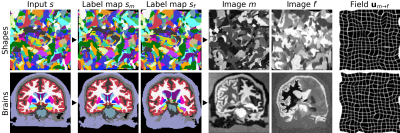

Figure 3. Image synthesis from random shapes (top) or, if available, from brain segmentations (bottom). We generate a pair of label maps {sm,sf} and from them images {m,f} with arbitrary contrast. The registration network then predicts the displacement u. In practice, we generate {sm,sf} from separate subjects if anatomical labels maps are used. We emphasize that no acquired images are involved.