Alexandros Patsanis1, Mohammed R. S. Sunoqrot 1, Elise Sandsmark 2, Sverre Langørgen 2, Helena Bertilsson 3,4, Kirsten M. Selnæs 1,2, Hao Wang5, Tone F. Bathen 1,2, and Mattijs Elschot 1,2

1Department of Circulation and Medical Imaging, Norwegian University of Science and Technology - NTNU, Trondheim, Norway, 2Department of Radiology and Nuclear Medicine, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 3Department of Clinical and Molecular Medicine, Norwegian University of Science and Technology - NTNU, Trondheim, Norway, 4Department of Urology, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 5Department of Computer Science, Norwegian University of Science and Technology - NTNU, Gjøvik, Norway

1Department of Circulation and Medical Imaging, Norwegian University of Science and Technology - NTNU, Trondheim, Norway, 2Department of Radiology and Nuclear Medicine, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 3Department of Clinical and Molecular Medicine, Norwegian University of Science and Technology - NTNU, Trondheim, Norway, 4Department of Urology, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 5Department of Computer Science, Norwegian University of Science and Technology - NTNU, Gjøvik, Norway

Weakly-supervised GANs trained on

normalized, randomly sampled images of size 128x128 with a 0.5 mm pixel spacing

gave the best AUC for detection of prostate cancer on T2-weighted MR images.

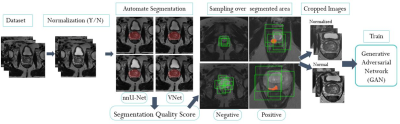

Figure 1: The

proposed end-to-end pipeline includes automated

intensity normalization using AutoRef5, automated prostate

segmentation using VNet6 and nnU-Net7 followed by an

automated Quality Control step, and the sampling of cropped images with

different techniques and settings. The cropped images were then used to train weakly-supervised (Fixed-Point GAN)

and unsupervised GAN (f-AnoGAN) models.

Figure 3: f-AnoGAN and Fixed-Point

GAN - 3.a) f-AnoGAN: Linear latent space interpolation for random endpoints of

trained f-AnoGAN shows that the model does not focus only on one part of the

training dataset. 3.b) f-AnoGAN: Mapping

from image space (query) back to GAN's latent space should yield resembled

images. Here, the mapped images are similar but not entirely identical. 3.c) f-AnoGAN:

Positive test case that fails– 3.d) Fixed-Point GAN: negative and positive tested cases, no

differences for the negative case, whereas positive case found, and localized (difference).