Maarten Terpstra1,2, Matteo Maspero1,2, Tom Bruijnen1,2, Joost Verhoeff1, Jan Lagendijk1, and Cornelis A.T. van den Berg1,2

1Department of Radiotherapy, University Medical Center Utrecht, Utrecht, Netherlands, 2Computational Imaging Group for MR diagnostics & therapy, University Medical Center Utrecht, Utrecht, Netherlands

1Department of Radiotherapy, University Medical Center Utrecht, Utrecht, Netherlands, 2Computational Imaging Group for MR diagnostics & therapy, University Medical Center Utrecht, Utrecht, Netherlands

We propose a deep learning approach for real-time motion estimation from highly accelerated NUFFT-reconstructed acquisitions. At R=30, the model produces accurate motion fields within 200 ms, including k-space acquisition. The proposed model even generalizes to 4D-CT without retraining.

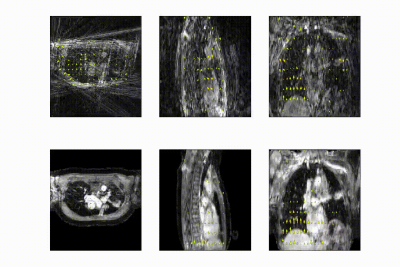

Figure 3: Typical motion reconstruction at R~18. The computed DVFs were computed using

NUFFTreconstructed respiratory-resolved images with 70 respiratory phases (top row, R~18), while the bottom row shows optical

flow computed on compressed sense reconstructions. Compared to these

DVFs, our model achieves a mean EPE of \(2.48\pm0.42\) mm compared to compressed sense optical flow. However, some motion artifacts remain at the anterior side due to undersampling artifacts.

Figure 1: Schematic of 4D reconstruction and multi-level motion estimation. Long radial acquisitions (7.3 min.) with motion mixing are respiratory-binned and reconstructed to three spatial resolutions by cropping k-space around k0. These images serve as training data for our multi-resolution motion model -- together with optical flow to inhale, exhale, and midvent positions -- to learn a three-dimensional deformation vector field. The lowest resolution CNN has 3x3x3 3D convolutional filters, the

middle CNN has 5x5x5 filters, and the final CNN again uses 3x3x3

filters.