Mahmut Yurt1,2, Salman Ul Hassan Dar1,2, Berk Tinaz1,2,3, Muzaffer Ozbey1,2, Yilmaz Korkmaz1,2, and Tolga Çukur1,2,4

1Department of Electrical and Electronics Engineering, Bilkent University, Ankara, Turkey, 2National Magnetic Resonance Research Center, Bilkent University, Ankara, Turkey, 3Department of Electrical and Computer Engineering, University of Southern California, Los Angeles, CA, United States, 4Neuroscience Program, Aysel Sabuncu Brain Research Center, Bilkent University, Ankara, Turkey

1Department of Electrical and Electronics Engineering, Bilkent University, Ankara, Turkey, 2National Magnetic Resonance Research Center, Bilkent University, Ankara, Turkey, 3Department of Electrical and Computer Engineering, University of Southern California, Los Angeles, CA, United States, 4Neuroscience Program, Aysel Sabuncu Brain Research Center, Bilkent University, Ankara, Turkey

We propose a semi-supervised model for mutually accelerated multi-contrast MRI that enables synthesis of fully-sampled target images without demanding large datasets of costly fully-sampled source or ground-truth target acquisitions.

Fig. 1: a) Supervised methods learn a source-to-target mapping using costly datasets of fully-sampled images of the source- and target-contrasts. b) The proposed semi-supervised model can be trained to synthesize fully-sampled target images using only undersampled ground-truth acquisitions of the target-contrast and allows recovery from undersampled sources to further reduce data requirements. c) The proposed method involves a selective loss function measuring the error only on the acquired k-space coefficients in terms of k-space, image-domain L1 and adversarial losses.

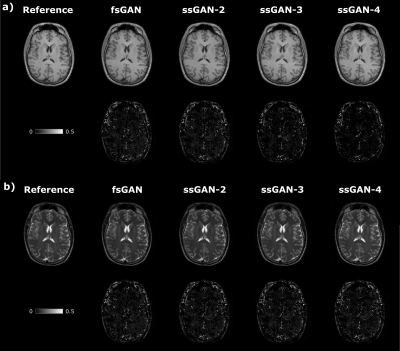

Fig. 2: Representative results from the ssGAN and fsGAN models are displayed for the synthesis tasks in the IXI dataset with fully-sampled source images (Rsource=1): a) T1-weighted image synthesis and b) T2-weighted image synthesis. Synthesized target-contrast images are displayed for ssGAN-2 (Rtarget=2), ssGAN-3 (Rtarget=3), ssGAN-4 (Rtarget=4), and fsGAN together with fully-sampled reference images.