Xiaodong Ma1, Kamil Uğurbil1, and Xiaoping Wu1

1Center for Magnetic Resonance Research, Radiology, Medical School, University of Minnesota, Minneapolis, MN, United States

1Center for Magnetic Resonance Research, Radiology, Medical School, University of Minnesota, Minneapolis, MN, United States

We propose a novel deep-learning framework, dubbed deepPTx, which aims to train a deep neural network to directly predict pTx-style images from images obtained with single transmission (sTx). Its feasibility is demonstrated using 7 Tesla high-resolution, whole-brain diffusion MRI.

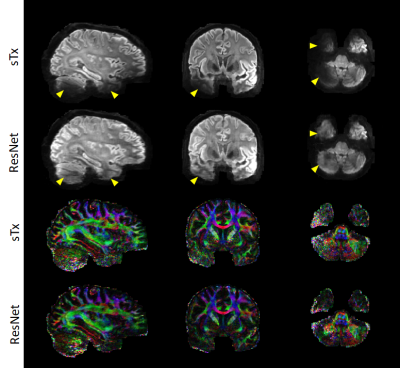

Fig.5 Testing of the final model on a new

subject randomly chosen from the 7T HCP database. Shown are mean

diffusion-weighted images with b1000 (averaged across all directions)

and color-coded FA maps in sagittal, coronal and axial views.

The final model with tuned hyperparameters was trained on our entire dataset of

5 subjects. Note that the final model (ResNet) substantially

enhanced the image quality by restoring signal dropout observed

in the lower brain regions (as marked by yellow arrowheads), producing color-coded

FA maps with reduced noise levels in those challenging regions.

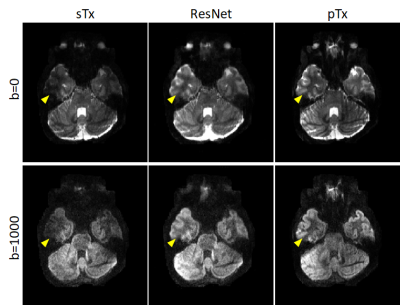

Fig.3 Example b0 and b1000 diffusion images (of one diffusion

direction) for single transmission (sTx) vs deep-learned pTx (ResNet) in

reference to acquired pTx images. Shown is a representative axial slice in

lower brain from one subject, in which case the model with tuned

hyperparameters was trained on data of the other 4 subjects. Note that the use

of ResNet substantially improved the image quality, effectively recovering the signal

dropout observed in the lower temporal lobe (as marked by the yellow

arrowheads) and producing images that were comparable to those obtained with pTx.