Rui Zeng1, Jinglei Lv2, He Wang3, Luping Zhou2, Michael Barnett2, Fernando Calamante2, and Chenyu Wang2

1School of Biomedical Engineering, The University of Sydney, Sydney, Australia, 2The University of Sydney, Sydney, Australia, 3Fudan University, Shanghai, China

1School of Biomedical Engineering, The University of Sydney, Sydney, Australia, 2The University of Sydney, Sydney, Australia, 3Fudan University, Shanghai, China

A deep learning model called FODSRM was developed for fiber

orientation distribution (FOD) super-resolution, which enhances single-shell

FOD computed from clinic-quality dMRI data to obtain the

super-resolved quality that would have been produced

from advanced research scanners.

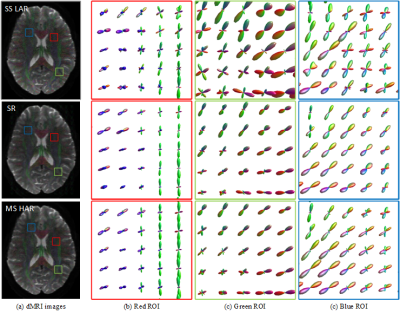

Figure 1. An

overview of the pipeline for applying FODSRM in connectome reconstruction. A

given single-shell low-angular-resolution (LAR) dMRI image is first processed

by single-shell 3-tissue CSD method to generate the single-shell LAR FOD image,

which is then taken as input by FODSRM to generate the super-resovled FOD

image. The super-resolved FOD image is used for reliable connectome reconstruction.

Figure 2. Illustration of FOD super

resolution. Our model is able to generate high-angular-resolution (HAR) FOD

images by enhancing the corresponding low-angular-resolution (LAR) FOD images.

The LAR FOD images are obtained with SS3T-CSD on single-shell

low-angular-resolution (typically around 32 gradient directions) data, which

are extensively used for clinical purposes. The recovered HAR FOD images have

comparable quality to those from MSMT-CSD. Three representative zoomed regions

(red, green and blue) are shown.