Oriana Vanesa Arsenov1, Karin Shmueli1, and Anita Karsa1

1Medical Physics and Biomedical Engineering, University College London, London, United Kingdom

1Medical Physics and Biomedical Engineering, University College London, London, United Kingdom

We trained a deep-learning network

for background field removal using random spatial deformations to simulate

realistic fields (Model 1) and to augment field maps measured in vivo (Model

2). Model 1 predicts local fields better for synthetic vs in-vivo images. Model

2 performed better in vivo.

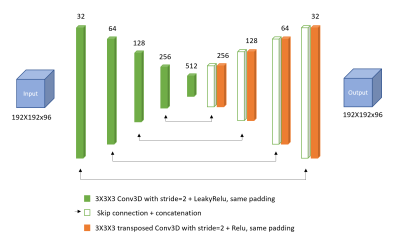

Figure 1. The architecture of the 3D U-net used for Background field removal

(BGFR). The convolutional neural network (CNN) displays the output local field

maps (Bint) the same size (192x192x96) as the input total field maps

(Btot) . The number of feature maps is shown on top of each of the

network’s layers.

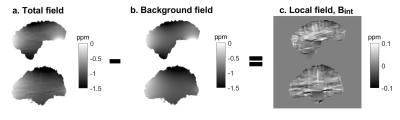

Figure 2. A set of training field maps inside the brain simulated in a head

and neck phantom with random deformations. A sagittal and a coronal slice of

the total Btot (a), background Bext (b), and local Bint (c) field maps. The

local fields are equal to the total fields minus the background fields: Bint

= Btot - Bext.