1Stanford University, Palo Alto, CA, United States

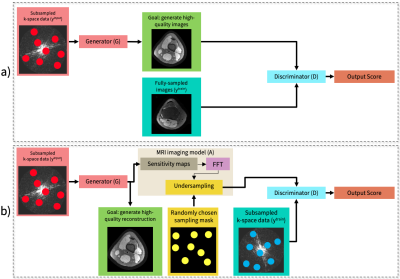

Figure 1. (a) Framework overview example in a supervised setting with a conditional GAN when fully-sampled datasets are available.

(b) Our proposed framework overview in an unsupervised setting. A sensing matrix comprised of coil sensitivity maps, an FFT and a randomized undersampling mask is applied to the generated image to simulate the imaging process. The discriminator takes simulated and observed measurements as inputs and tries to differentiate between them. The generator’s loss is based on the discriminator as well as reducing spatial and temporal variation.

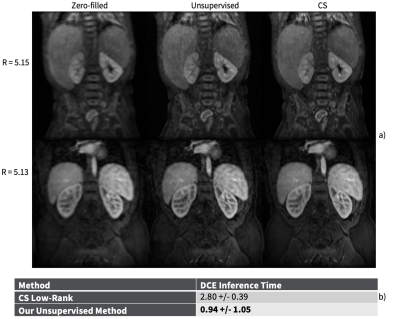

Figure 5. (a) Representative DCE images. The leftmost column is the input zero-filled reconstruction, the middle column is our generator’s reconstruction, and the rightmost column is the CS reconstruction. The generator improves the input image quality by recovering sharpness and adding more structure to the input images.

(b) Comparison of DCE inference time per three-dimensional DCE volume (2D + time) between CS low-rank and our unsupervised GAN. Our method is approximately 2.98 times faster.