Beliz Gunel1, Morteza Mardani1, Akshay Chaudhari2, Shreyas Vasanawala2, and John Pauly1

1Electrical Engineering, Stanford University, Stanford, CA, United States, 2Radiology, Stanford University, Stanford, CA, United States

1Electrical Engineering, Stanford University, Stanford, CA, United States, 2Radiology, Stanford University, Stanford, CA, United States

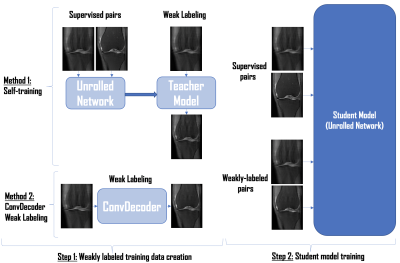

Untrained networks to construct weak labels from undersampled MR scans at training time. Use limited supervised and weakly supervised pairs to train an unrolled network with strong reconstruction performance and fast inference time, improving over supervised and self-training baselines.

Figure 1: Diagram of our two-step weakly supervised framework. Step 1 involves weakly labeled training data creation using either Self-training or ConvDecoder Weak Labeling. In Self-training, a "teacher model" is trained with fully sampled data to run inference on undersampled data, for creating weak labels. Alternatively, ConvDecoder can directly create weakly labeled data from undersampled data. Step 2 involves training a "student model" unrolled neural network using both supervised and weakly labeled pairs, which is then used at inference time.

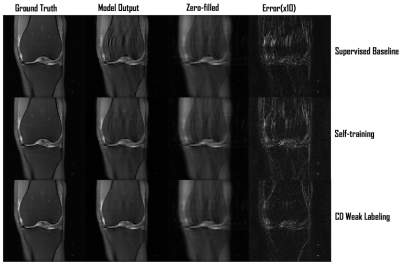

Figure 5: Image quality comparison between Supervised Baseline, Self-training, and CD Weak Labeling. All methods use 1 supervised and 5 weakly labeled volumes during training with 4x acceleration. We observe that CD Weak Labeling provides clear improvement in reducing background noise and aliasing artifacts over both Supervised Baseline and Self-training.