Zilin Deng1,2, Burhaneddin Yaman1,2, Chi Zhang1,2, Steen Moeller2, and Mehmet Akçakaya1,2

1University of Minnesota, Minneapolis, MN, United States, 2Center for Magnetic Resonance Research, Minneapolis, MN, United States

1University of Minnesota, Minneapolis, MN, United States, 2Center for Magnetic Resonance Research, Minneapolis, MN, United States

Training of 3D unrolled networks for volumetric MRI

reconstruction with small databases and limited GPU resources may be

facilitated by small-slab processing

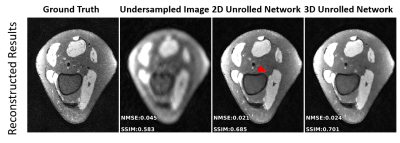

Figure 3. A

representative test slice from reconstructions using 2D and 3D unrolled

networks. 2D processing suffers from residual artifacts (red arrows), which are

suppressed with the 3D processing.

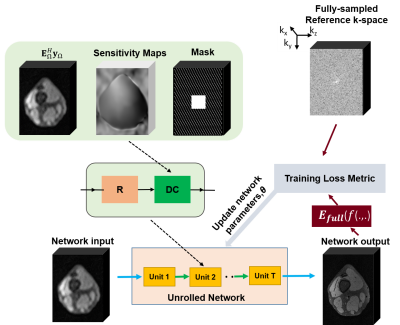

Figure 2. Schematic of the supervised training on the small slabs

generated from the volumetric datasets. Unrolled neural networks comprise a

series of data consistency and regularizer units, arising from conventional

iterative optimization algorithms. In this work, we use a 3D unrolled network.

A ResNet architecture with 5 residual blocks, consisting of 2 convolution

layers with former followed by ReLU and latter followed by a scaling layer is

used for implicit regularization. All layers of this ResNet use 3×3×3 kernels

and 64 channels.