Elisa Moya-Sáez1,2, Rodrigo de Luis-García1, and Carlos Alberola-López1

1University of Valladolid, Valladolid, Spain, 2Fundación Científica AECC, Valladolid, Spain

1University of Valladolid, Valladolid, Spain, 2Fundación Científica AECC, Valladolid, Spain

- - A self-supervised deep learning approach to compute T1, T2, and PD maps from clinical routine sequences.

- - Any realistic weighted images can be synthesized from the parametric maps.

- - The proposed self-supervised CNN achieves significant improvements in most synthesized modalities.

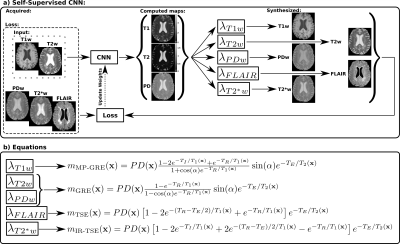

Figure 1: Overview of the proposed approach. a) Pipeline for training and testing the self-supervised convolutional-neural-network (CNN). b) Equations of the lambda layers used to synthesize the T1w, T2w, PDw, T2*w and FLAIR images from the previously computed parametric maps and with sequence parameters of Table 1. m(x) is the signal intensity of the corresponding weighted image at pixel x. Note that the CNN is pre-trained exclusively with synthetic data, as described in Ref7, and that PDw, T2*w, and FLAIR images are only used to compute the loss function and are not input to the CNN.

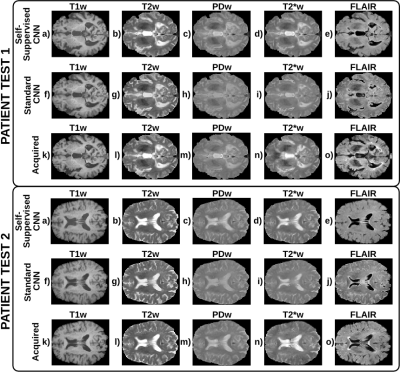

Figure 3: A representative axial slice of the synthesized and the corresponding acquired weighted images for each test patient. a-e) T1w, T2w, PDw, T2*w, and FLAIR images synthesized by the self-supervised CNN. f-j) Corresponding images synthesized by the standard CNN. k-o) Corresponding acquired images. The self-supervised CNN achieves better structural information and contrast than the standard CNN obtaining higher quality synthesis. Specifically, for the PDw, T2*w, and FLAIR which had the lowest quality synthesis in the standard CNN. See the CSF of the PDw, T2*w, and FLAIR.