Soumick Chatterjee1,2,3, Arnab Das3, Chirag Mandal3, Budhaditya Mukhopadhyay3, Manish Vipinraj3, Aniruddh Shukla3, Oliver Speck1,4,5,6, and Andreas Nürnberger2,3,6

1Department of Biomedical Magnetic Resonance, Otto von Guericke University, Magdeburg, Germany, 2Data and Knowledge Engineering Group, Otto von Guericke University, Magdeburg, Germany, 3Faculty of Computer Science, Otto von Guericke University, Magdeburg, Germany, 4German Centre for Neurodegenerative Diseases, Magdeburg, Germany, 5Leibniz Institute for Neurobiology, Magdeburg, Germany, 6Center for Behavioral Brain Sciences, Magdeburg, Germany

1Department of Biomedical Magnetic Resonance, Otto von Guericke University, Magdeburg, Germany, 2Data and Knowledge Engineering Group, Otto von Guericke University, Magdeburg, Germany, 3Faculty of Computer Science, Otto von Guericke University, Magdeburg, Germany, 4German Centre for Neurodegenerative Diseases, Magdeburg, Germany, 5Leibniz Institute for Neurobiology, Magdeburg, Germany, 6Center for Behavioral Brain Sciences, Magdeburg, Germany

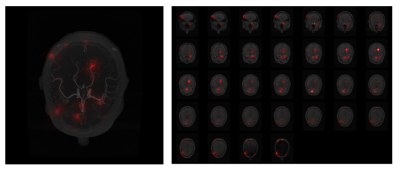

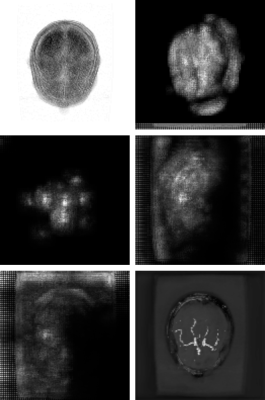

Preliminary studies based on DS6 model indicate

that our approaches are able to showcase the focus areas of the network. Furthermore,

the method helps to identify the individual focus areas of each network layer separately.