Philip Müller1, Vladimir Golkov1, Valentina Tomassini2, and Daniel Cremers1

1Computer Vision Group, Technical University of Munich, Munich, Germany, 2D’Annunzio University, Chieti–Pescara, Italy

1Computer Vision Group, Technical University of Munich, Munich, Germany, 2D’Annunzio University, Chieti–Pescara, Italy

We propose neural networks equivariant under rotations and translations for diffusion MRI (dMRI) data and therefore generalize prior work to the 6D space of dMRI scans. Our model outperforms non-rotation-equivariant models by a notable margin and requires fewer training samples.

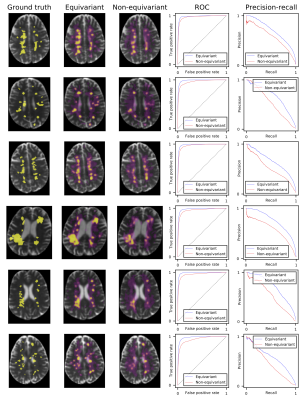

Segmentation of multiple-sclerosis lesions in six scans from the validation set. (a) Ground truth

of one example slice per scan, (b) predictions for that slice using our equivariant model, (c) predictions for that slice using the non-rotation-equivariant reference model with 3D convolutional layers, (e)

ROC curves of all models on the full scans, (f) precision-recall curves of all models on the full scans. While our equivariant model is very certain (yellow areas) at most positions, the non-rotation-equivariant model

has large areas of high uncertainty (purple areas).

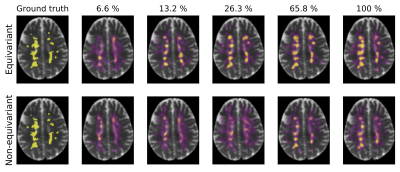

Segmentation of multiple-sclerosis lesions in a scan from the validation set (not used for training) using our equivariant (top) and the non-rotation-equivariant (bottom) model, both trained on reduced subsets of the training set (from left to right) with the ground truth segmentation in the left column. While our equivariant model achieves quite accurate segmentations when trained on 26.3% of the training scans, the segmentations of the non-rotation-equivariant model only start getting accurate when trained on 65.8% of the set, indicating better generalization of our model.