Kun Qian1, Tomoki Arichi1, A David Edwards1, and Jo V Hajnal1

1King's College London, London, United Kingdom

1King's College London, London, United Kingdom

We describe a progressive

calibration gaze interaction interface which provides accurate and robust gaze

estimation despite head movement. It has great potential for use as a next

generation platform for naturalistic fMRI experiments.

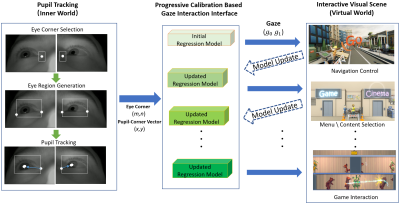

Figure 2. Overview

of the system pipeline. In eye tracking (left column), the initial eye corner

region for tracking is manually selected when the subject is ready to start.

The eye corner and pupil tracking is based on DCF-CSR [3] and adaptive

thresholding [4]. Our gaze interface will build an initial

regression model based on standard 9 points calibration [2]. The gaze

interface will convert gaze into visual content interaction information which

will progressively update gaze regression model.

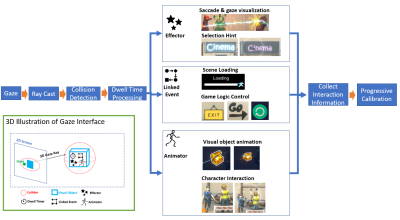

Gaze interaction interface overview. The

interface converts gaze into a 3D ray to test collision with 3D object’s

collider. Subjects see the visual object inside the collider in the 3D world.

Dwell time records how long the ray continuously intersects with the collider.

Linked events trigger associated 3D world events. Effectors and animators trigger

particle effects and corresponding animation of visual objects while the dwell

time increases.