Antonio Candito1, Matthew D Blackledge1, Fabio Zugni2, Richard Holbrey1, Sebastian Schäfer3, Matthew R Orton1, Ana Ribeiro4, Matthias Baumhauer3, Nina Tunariu1, and Dow-Mu Koh1

1The Institute of Cancer Research, London, United Kingdom, 2IEO, European Institute of Oncology IRCCS, Milan, Italy, 3Mint Medical, Heidelberg, Germany, 4The Royal Marsden NHS Foundation Trust, London, United Kingdom

1The Institute of Cancer Research, London, United Kingdom, 2IEO, European Institute of Oncology IRCCS, Milan, Italy, 3Mint Medical, Heidelberg, Germany, 4The Royal Marsden NHS Foundation Trust, London, United Kingdom

Developing a transfer learning model to classify normal bone and metastatic bone disease on Whole-Body Diffusion Weighted Imaging. The model shows promising results in classifying normal/metastatic bone on individual WBDWI slices, potentially without the requirement of Dixon imaging

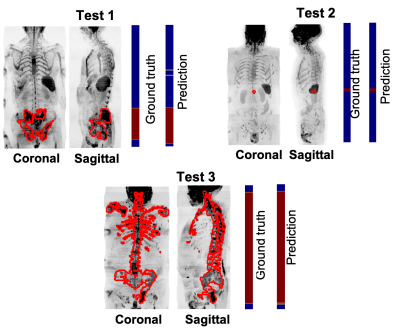

Figure 3. Assess model performance on 3 patients of the test data. Coronal and sagittal Maximum-Intensity-Projection (MIP) of SNRmap. The manual delineation of bone lesions performed by an experienced radiologist was overlayed on the MIP of SNRmap (in red). The first colormap shows the ground truth, annotated axial images with (in red) and without (in blue) metastatic bone disease. The second colormap shows the prediction performed by the trained transfer learning model.

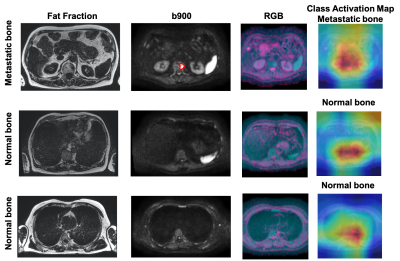

Figure 5. Axial Fat fraction, b900, RGB image and CAM from test patient 2 in Figure 3. Fat fraction combined with b900 images are typically used for manual detection of bone disease. However, using the DWI data alone, our model was able to predict presence of disease in one slice (top-row, red outline), and absence of disease in two different slice locations. CAM emphasizes where the model is ‘looking’ to derive the relevant regions within the images that lead to a particular decision. From the heatmap, red = important and blue = not important.