JUAN LIU1 and Kevin Koch2

1Yale University, New Haven, CT, United States, 2Medical College of Wisconsin, Milwaukee, WI, United States

1Yale University, New Haven, CT, United States, 2Medical College of Wisconsin, Milwaukee, WI, United States

We apply domain-specific batch normalization to address domain adaption problem of DL-based QSM method which is trained on synthetic data and applied on real data.

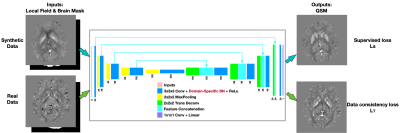

Fig 1. Neural network architecture of QSMInvNet+. It has an encoder-decoder structure with 9 convolutional layers (kernel size 3x3x3, same padding), 9x2 domain-specific batch normalization layers, 9 ReLU layers, 4 max pooling layers (pooling size 2x2x2, strides 2x2x2), 4 nearest-neighbor upsampling layers (size 2x2x2), 4 feature concatenations, and 1 convolutional layer (kernel size 1x1x1, linear activation). To

address the unsupervised domain adaption on real data, domain-specific batch normalization layers are utilized while sharing all other model parameters.

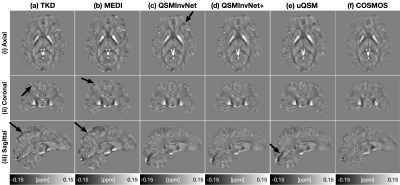

Fig 3. Comparison of QSM of a multi-orientation dataset. TDK (a), MEDI (b) and uQSM (e) results show black shading artifacts in the axial plane and streaking artifacts in the sagittal plane. QSMInvNet (c) displays better quality then TKD and MEDI, but shows subtle artifacts. QSMInvNet+(d) results have high-quality with clear details and invisible artifacts.