Florian Wiesinger1, Sandeep Kaushik1, Mathias Engström2, Mika Vogel1, Graeme McKinnon3, Maelene Lohezic1, Vanda Czipczer4, Bernadett Kolozsvári4, Borbála Deák-Karancsi4, Renáta Czabány4, Bence Gyalai4, Dorottya Hajnal4, Zsófia Karancsi4, Steven F. Petit5, Juan A. Hernandez Tamames5, Marta E. Capala5, Gerda M. Verduijn5, Jean-Paul Kleijnen5, Hazel Mccallum6, Ross Maxwell6, Jonathan J. Wyatt6, Rachel Pearson6, Katalin Hideghéty7, Emőke Borzasi7, Zsófia Együd7, Renáta Kószó7, Viktor Paczona7, Zoltán Végváry7, Suryanarayanan Kaushik3, Xinzeng Wang3, Cristina Cozzini1, and László Ruskó4

1GE Healthcare, Munich, Germany, 2GE Healthcare, Stockholm, Sweden, 3GE Healthcare, Waukesha, WI, United States, 4GE Healthcare, Budapest, Hungary, 5Erasmus MC, Rotterdam, Netherlands, 6Newcastle University, Newcastle, United Kingdom, 7University of Szeged, Szeged, Hungary

1GE Healthcare, Munich, Germany, 2GE Healthcare, Stockholm, Sweden, 3GE Healthcare, Waukesha, WI, United States, 4GE Healthcare, Budapest, Hungary, 5Erasmus MC, Rotterdam, Netherlands, 6Newcastle University, Newcastle, United Kingdom, 7University of Szeged, Szeged, Hungary

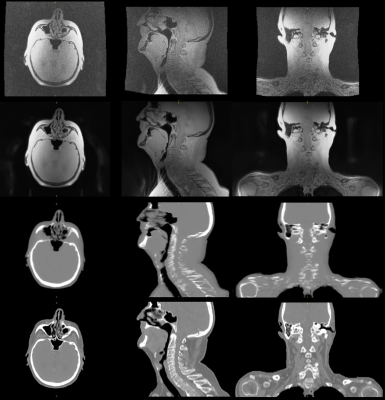

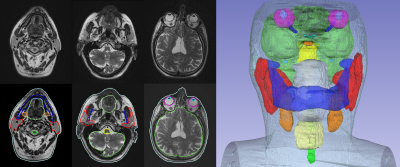

Deep Learning provides powerful tools to address unsolved problems and unmet needs of MR-only Radiation Therapy Planning (RTP) in terms of synthetic CT conversion (required for acquired dose calculation) and time-consuming organ-at-risk (OAR) delineation.