Digital Poster

Quantitative Imaging II

Joint Annual Meeting ISMRM-ESMRMB & ISMRT 31st Annual Meeting • 07-12 May 2022 • London, UK

| Computer # | ||||

|---|---|---|---|---|

2601 |

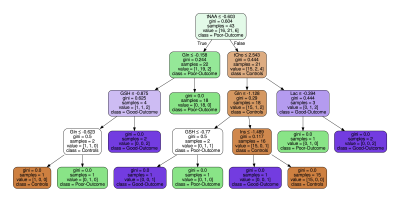

42 | Using Machine Learning to Identify Metabolite Spectral Patterns that Reflect Outcome after Cardiac Arrest

Marcia Sahaya Louis1,2, Huijun Vicky Liao2, Rohit Singh3, Ajay Joshi1, Jong Woo Lee4, and Alexander Lin2

1ECE, Boston University, Boston, MA, United States, 2Radiology, Brigham and Women's Hospital, Boston, MA, United States, 3Computer Science and Artificial Intelligence Lab, Massachusetts Institute of Technology, Cambridge, MA, United States, 4Brigham and Women's Hospital, Boston, MA, United States

More than half of patients who undergo targeted temperature management (TTM) after cardiac arrest do not survive hospitalization and 50% of those survivors suffer from long-term cognitive deficits. The goal of this study is to use machine learning methods to characterize the pattern of metabolic changes in patients with good and poor outcomes after cardiac arrest. A machine learning pipeline that incorporates z-scores, decision-tree modeling, principal component analysis, and linear support vector machine was applied to MR spectroscopy data acquired after cardiac arrest. Results confirm that N-acetylaspartate and lactate are important markers but other unexpected findings emerged as well.

|

||

2602 |

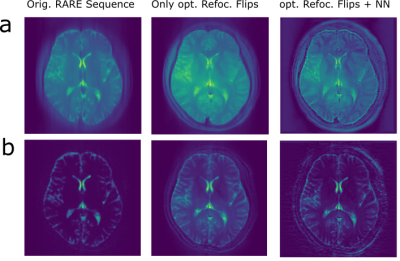

43 | Solving T2-blurring: Joint Optimization of Flip Angle Design and DenseNet Parameters for Reduced T2 Blurring in TSE Sequences

Hoai Nam Dang1, Jonathan Endres1, Felix Glang2, Simon Weinmüller1, Alexander Loktyushin2, Klaus Scheffler2, Arnd Doerfler1, Andreas Maier3, and Moritz Zaiss1,2

1Department of Neuroradiology, University Clinic Erlangen, Friedrich-Alexander University Erlangen-Nürnberg (FAU), Erlangen, Germany, 2Magnetic Resonance Center, Max-Planck-Institute for Biological Cybernetics, Tübingen, Germany, 3Department of Computer Science, Friedrich-Alexander University Erlangen-Nürnberg (FAU), Erlangen, Germany

We propose an end-to-end optimization approach to reduce T2 blurring in single-shot TSE sequences, by performing a joint optimization of Flip Angle Design and DenseNet parameters using he original transverse magnetization image at a certain TE as target. Our approach generalizes well to in vivo measurements at 3T, revealing enhanced white and grey matter contrast and fine structures in human brain.

|

||

2603 |

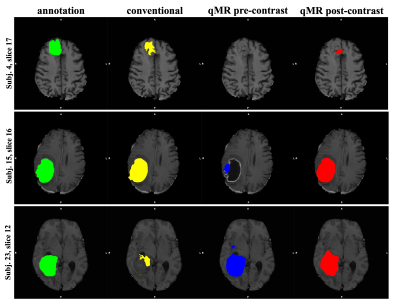

44 | Deep-learning based brain tumor segmentation using quantitative MRI

Iulian Emil Tampu1,2, Ida Blystad2,3,4, Neda Haj-Hosseini1, and Anders Eklund1,5

1Biomedical Engineering, Linkoping University, Linkoping, Sweden, 2Center for Medical Image Science and Visualization (CMIV), Linkoping University, Linkoping, Sweden, 3Department of Radiology in Linköping, Region Östergötland, Center for Diagnostics, Linkoping, Sweden, 4Department of Health, Medicine and Caring Sciences, Division of Diagnostics and Specialist Medicine, Linkoping University, Linkoping, Sweden, 5Department of Computer and Information Science, Linkoping University, Linkoping, Sweden

Manual annotation of gliomas in magnetic resonance (MR) images is a laborious task, and it is impossible to identify active tumor regions not enhanced in the conventionally acquired MR modalities. Recently, quantitative MRI (qMRI) has shown capability in capturing tumor-like values beyond the visible tumor structure. Aiming at addressing the challenges of manual annotation, qMRI data was used to train a 2D U-Net deep-learning model for brain tumor segmentation. Results on the available data show that a 7% higher Dice score is obtained when training the model on qMRI post-contrast images compared to when the conventional MR images are used.

|

||

2604 |

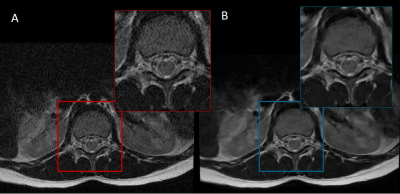

45 | Quantitative Radiomic Features of Deep Learning Image Reconstruction in MRI

Edward J Peake1, Andy N Priest1,2, and Martin J Graves1,2

1Imaging, Cambridge University Hospitals NHS Foundation Trust, Cambridge, United Kingdom, 2Department of Radiology, University of Cambridge, Cambridge, United Kingdom

Radiomic features are sensitive to changes in imaging parameters in MRI. This makes it challenging to develop robust machine learning models using imaging features. We explore the effect of clinically available deep learning image reconstruction on the performance of radiomic features. Correlation coefficient values varied (0.56 - 1.00) when comparing radiomic features of deep learning reconstructed images and ‘conventional’ MRI scans. The noise reduction level had a large impact on correlation coefficients, but variations were also significant between different types of imaging feature. Identification of highly correlated features may help identify more stable sets of radiomic features for machine learning.

|

||

2605 |

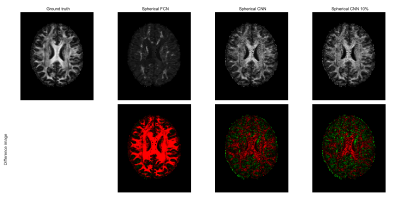

46 | Spherical-CNN based diffusion MRI parameter estimation is robust to gradient schemes and equivariant to rotation

Tobias Goodwin-Allcock1, Robert Gray2, Parashkev Nachev2, Jason McEwan3, and Hui Zhang1

1Department of Computer Science and Centre for Medical Image Computing, UCL, London, United Kingdom, 2Department of Brain Repair & Rehabilitation, Institute of Neurology, UCL, London, United Kingdom, 3Kagenova Limited, Guildford, United Kingdom

We demonstrate the advantages of spherical convolutional neural networks over conventional fully connected networks at estimating rotationally invariant microstructure indices. Fully-connected networks (FCN) have outperformed conventional model fitting for estimating microstructure indices, such as FA. However, these methods are not robust to changes diffusion weighted image sampling scheme nor are they rotationally equivariant. Recently spherical-CNN have been supposed as a solution to this problem. However, the advantages of spherical-CNNs have not been leveraged. We demonstrate both spherical-CNNs robust to new gradient schemes as well as the rotational equivariance. This has potential to decrease the number of training datapoints required.

|

||

2606 |

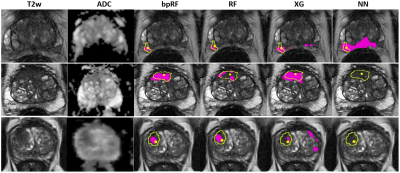

47 | Comparison of machine learning methods for detection of prostate cancer using bpMRI radiomics features

Ethan J Ulrich1, Jasser Dhaouadi1, Robben Schat2, Benjamin Spilseth2, and Randall Jones1

1Bot Image, Omaha, NE, United States, 2Radiology, University of Minnesota, Minneapolis, MN, United States

Multiple prostate cancer detection AI models—including random forest, neural network, XGBoost, and a novel boosted parallel random forest (bpRF)—are trained and tested using radiomics features from 958 bi-parametric MRI (bpMRI) studies from 5 different MRI platforms. After data preprocessing—consisting of prostate segmentation, registration, and intensity normalization—radiomic features are extracted from the images at the pixel level. The AI models are evaluated using 5-fold cross-validation for their ability to detect and classify cancerous prostate lesions. The free-response ROC (FROC) analysis demonstrates the superior performance of the bpRF model at detecting prostate cancer and reducing false positives.

|

||

2607 |

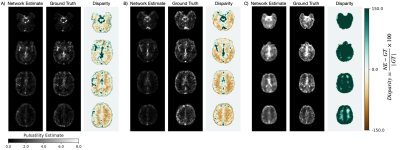

48 | Isolating cardiac-related pulsatility in blood oxygenation level-dependent MRI with deep learning

Jake Valsamis1, Nicholas Luciw1, Nandinee Haq1, Sarah Atwi 1, Simon Duchesne 2,3, William Cameron1, and Bradley J MacIntosh1,4

1Physical Sciences, Sunnybrook Research Institute, Toronto, ON, Canada, 2Radiology Department, Faculty of Medicine, Laval University, Québec, QC, Canada, 3Quebec City Mental Health Institute, Québec, QC, Canada, 4Department of Medical Biophysics, University of Toronto, Toronto, ON, Canada

Persistent exposure to highly pulsatile blood can damage the brain’s microvasculature. A convenient method for measuring cerebral pulsatility would allow investigation into its relationship with vascular dysfunction and cognitive decline. In this work, we propose a convolutional neural network (CNN) based deep learning solution to estimate cerebral pulsatility using only the frequency content from BOLD MRI scans. Various frequency component inputs were assessed, and echo time dependence was evaluated with a 5-fold cross-validation. Pulsatility was estimated from BOLD MRI data acquired on a different scanner to assess generalizability. The CNN reliably estimated pulsatility and was robust to various scan parameters.

|

||

2608 |

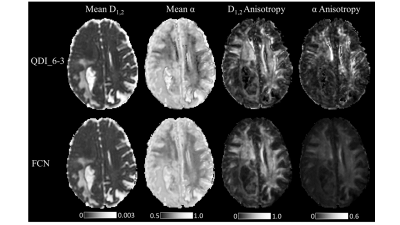

49 | Image enhancement of Quasi-Diffusion Imaging using a fully connected neural network

Ian Robert Storey1, Catherine Anne Spilling1,2, Xujiong Ye3, Thomas Richard Barrick1, and Franklyn Arron Howe1

1St George's, University of London, London, United Kingdom, 2King's College London, London, United Kingdom, 3University of Lincoln, Lincoln, United Kingdom

A fully connected neural network (FCN) was trained to map a short diffusion weighted image acquisition to high quality Quasi-Diffusion Imaging (QDI) parameter maps. The FCN produced denoised and enhanced QDI parameter maps compared to weighted least squares fitting of data to the QDI model. The FCN shows generalisation to unseen pathology such as grade IV glioma dMRI data and demonstrates the FCN can produce high quality QDI tensor maps from clinically feasible 2 minute data acquisitions. An FCN further enhances the ability of QDI to provide non-Gaussian diffusion imaging within clinically feasible acquisition times.

|

||

2609 |

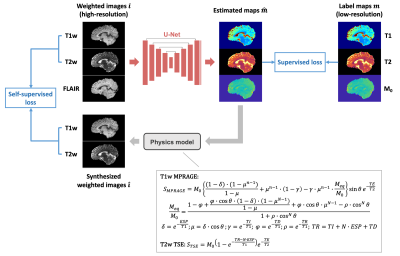

50 | Hybrid supervised and self-supervised deep learning for quantitative mapping from weighted images using low-resolution labels

Shihan Qiu1,2, Anthony G. Christodoulou1,2, Yibin Xie1, and Debiao Li1,2

1Biomedical Imaging Research Institute, Cedars-Sinai Medical Center, Los Angeles, CA, United States, 2Department of Bioengineering, UCLA, Los Angeles, CA, United States Deep learning methods have been developed to estimate quantitative maps from conventional weighted images, which has the potential to improve the availability and clinical impact of quantitative MRI. However, high-resolution labels required for network training are not commonly available in practice. In this work, a hybrid supervised and physics-informed self-supervised loss function was proposed to train parameter estimation networks when only limited low-resolution labels are accessible. By taking advantage of high-resolution information from the input weighted images, the proposed method generated sharp quantitative maps and had improved performance over the supervised training method purely relying on the low-resolution labels. |

||

The International Society for Magnetic Resonance in Medicine is accredited by the Accreditation Council for Continuing Medical Education to provide continuing medical education for physicians.