Combined Educational & Scientific Session

Artificial Intelligence for Brain Tumors

ISMRM & ISMRT Annual Meeting & Exhibition • 03-08 June 2023 • Toronto, ON, Canada

| 13:30 | Role of AI in Brain Tumors: A Clinician Perspective Javier Villanueva-Meyer | |

| 13:50 | Role of AI in Brain Tumors: Technical Challenges & Future Potential Anahita Fathi Kazerooni | |

| 14:10 | 0528. |

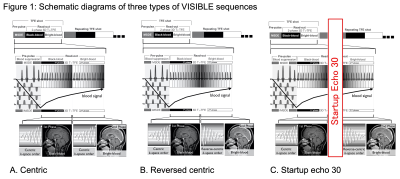

VISIBLE: Improvement in Vessel Visibility and Application of Machine Learning to Detect Brain Metastases

Kazufumi Kikuchi1, Osamu Togao2, Koji Yamashita3, Makoto Obara4, and Kousei Ishigami1

1Department of Clinical Radiology, Graduate School of Medical Sciences, Kyushu University, Fukuoka, Japan, 2Department of Molecular Imaging & Diagnosis, Graduate School of Medical Sciences, Kyushu University, Fukuoka, Japan, 3Department of Radiology Informatics & Network, Graduate School of Medical Sciences, Kyushu University, Fukuoka, Japan, 4Philips Japan, Tokyo, Japan Keywords: Tumors, Machine Learning/Artificial Intelligence, Brain metastases This study aimed to improve vessel visibility by modifying k-space filling and to verify the usefulness of volume isotropic simultaneous interleaved bright- and black-blood examination (VISIBLE) in detecting brain metastases using machine learning (ML). We tested three types of VISIBLE in different k-space fillings, and counted the number of vessels. We also tested the ML model by using VISIBLE. The number of vessels was lower in Centric and Reversed centric sequences than that in MPRAGE, but comparable in the Startup echo 30 sequence. Our ML model was achieved high sensitivity (97%) and there were no differences among three sequences. |

| 14:18 | 0519. |

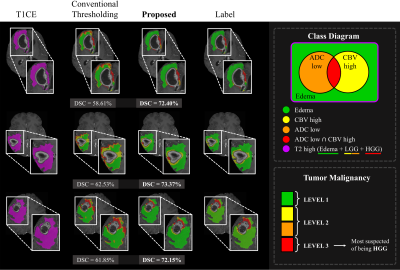

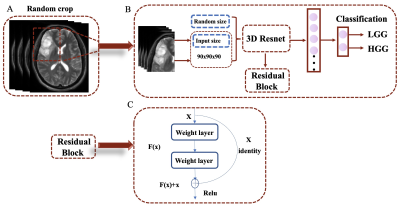

Two-Stage Deep Learning with Multi-Pathway Network for Brain Tumor Segmentation and Malignancy Identification From MR Images

Yoonseok Choi1, Mohammed A Al-masni2, Hyeok Park1, Jun-ho Kim1, Dong-Hyun Kim1, and Roh-Eul Yoo3

1Yonsei University, SEOUL, Korea, Republic of, 2Sejong Univiersity, Seoul, Korea, Republic of, 3Seoul National University Hospital, Seoul, Korea, Republic of Keywords: Tumors, Brain Accurately segmenting contrast-enhancing brain tumors plays an important role in surgical planning of high-grade gliomas. Also, precisely stratifying malignancy risk within non-enhancing T2 hyperintense area helps control the radiation dose according to the malignancy risk and prevent normal brain tissue from being unnecessarily exposed to radiation. In this work, we 1) segment brain tumors using deep learning, and 2) provide more detailed segmentation results that can show the malignancy risk within the T2 high region. We utilize a two-stage framework where we make images with restricted ROI through foreground cropping so that the model can focus on only tumor part. |

| 14:26 | 0520. |

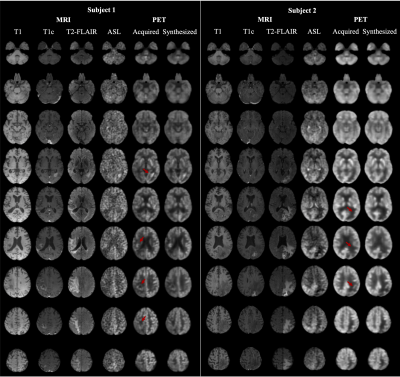

Predicting FDG PET from Multi-contrast MRIs using Deep Learning in Patients with Brain Neoplasms

Jiahong Ouyang1, Kevin T. Chen2, Jarrett Rosenberg1, and Greg Zaharchuk1

1Stanford University, Stanford, CA, United States, 2National Taiwan University, Taipei, Taiwan Keywords: Machine Learning/Artificial Intelligence, PET/MR PET is a widely used imaging technique, but it requires exposing subjects to radiation and is not offered in the majority of medical centers in the world. Here, we proposed to synthesize FDG-PET images from multi-contrast MR images using a U-Net-based network with attention modules and transformer blocks. The experiments on a dataset with 87 brain lesions in 59 patients demonstrated that the proposed method was able to generate high-quality PET from MR images without the need for radiotracer injection. We also demonstrate methods to handle potential missing or corrupted sequences. |

| 14:34 | 0521. |

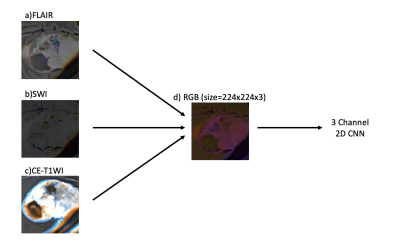

A Pretrained CNN Model Using Multiparametric MRI to Identify WHO Tumor Grade of Meningiomas

Sena Azamat1,2, Buse Buz-Yaluğ1, Alpay Ozcan3, Ayça Ersen Danyeli4,5,6, Necmettin Pamir5,7, Alp Dinçer4,8, Koray Ozduman4,7, and Esin Ozturk-Isik1,4

1Institute of Biomedical Engineering, Bogazici University, Istanbul, Turkey, 2Department of Radiology, Basaksehir Cam and Sakura City Hospital, Istanbul, Turkey, 3Electric and Electronic Engineering Department, Bogazici University, Istanbul, Turkey, 4Brain Tumor Research Group, Acibadem University, Istanbul, Turkey, 5Center for Neuroradiological Applications and Reseach, Acibadem University, Istanbul, Turkey, 6Department of Medical Pathology, Acibadem University, Istanbul, Turkey, 7Department of Neurosurgery, Acibadem University, Istanbul, Turkey, 8Department of Radiology, Acibadem University, Istanbul, Turkey Keywords: Tumors, Machine Learning/Artificial Intelligence Meningiomas are the most common primary extra-axial intracranial tumors in adults. Grade of meningioma helps to predict the patient prognosis. Sixty-two patients with preoperative MRI were included in this IRB approved study. The whole tumor volumes were segmented from FLAIR, followed by co-registration onto SWI. A pretrained convolutional neural network (CNN) was employed to classify meningiomas into high and low-grades based on SWI, CE-T1W and FLAIR MRI. The pretrained CNN with data augmentation resulted in an accuracy of 80.2% (sensitivity=82.6% and specificity=78.1%) for identifying grades in meningiomas. |

| 14:42 | 0522. |

The MRI-based 3D-ResNet-101 deep learning model for predicting preoperative grading of gliomas: a multicenter study

Darui Li1, Wanjun Hu1, Tiejun Gan1, Guangyao Liu1, Laiyang Ma1, Kai Ai2, and Jing Zhang1

1Department of Magnetic Resonance, Lanzhou University Second Hospital, Lanzhou, China, 2Philips Healthcare, Xi'an, China Keywords: Tumors, Machine Learning/Artificial Intelligence, Deep learning The preoperative accurate and non-invasive prediction of glioma grading remains challenging. To accurately predict high-or low-grade gliomas, we constructed a 3D-ResNet101 deep learning model with data from a multicenter. These data were obtained from the Second Hospital of Lanzhou University, with 708 glioma patients, and the TCIA database, with 211 patients. The areas under the curve of the 3D-ResNet-101 deep learning model are 0.97 and 0.89 in the test cohort and external test cohort, respectively. This new method can be used for non-invasive prediction of glioma grading before surgery. |

| 14:50 | 0523. |

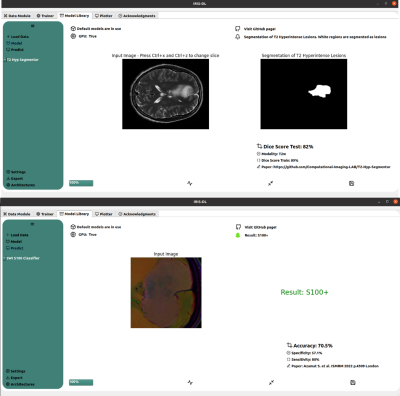

IRIS-DL: A Deep Learning Software Tool for Identifying Genetic Mutations in Gliomas and Meningiomas

Abdullah Bas1, Banu Sacli-Bilmez1, Buse Buz-Yalug1, Esra Sumer1, Sena Azamat1, Gokce Hale Hatay1, Ayca Ersen Danyeli2,3, Ozge Can2,4, Koray Ozduman2,5, Alp Dincer2,6, and Esin Ozturk-Isik1,2

1Institute of Biomedical Engineering, Bogazici University, Istanbul, Turkey, 2Brain Tumor Research Group, Acibadem University, Istanbul, Turkey, 3Department of Medical Pathology, Acibadem University, Istanbul, Turkey, 4Department of Biomedical Engineering, Acibadem University, Istanbul, Turkey, 5Department of Neurosurgery, Acibadem University, Istanbul, Turkey, 6Department of Radiology, Acıbadem University, Istanbul, Turkey Keywords: Tumors, Machine Learning/Artificial Intelligence, Deep Learning Intelligent Radiological Imaging Systems (IRIS)-DL is a deep learning software tool that includes libraries for segmenting tumor regions and identifying several genetic mutations in gliomas and meningiomas. The tool has three modules, which are “Model Library”, “Trainer”, and “Plotter”. In the “Model Library”, the users could run pre-trained models on their local data. The “Trainer” module is for creating custom AI (conventional machine learning, artificial neural networks, and deep learning) models on the user data. Lastly, “Plotter” module is for data visualization and explorative data analysis. |

| 14:58 | 0524. |

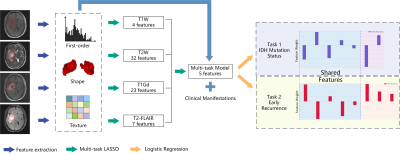

Multi-Task Radiomics Approach for Prediction of IDH Mutation Status and Early Recurrence of Gliomas from Preoperative MRI

Hongxi Yang1, Ankang Gao2, Yida Wang1, Yong Zhang2, Jingliang Cheng2, Yang Song3, and Guang Yang1

1Shanghai Key Laboratory of Magnetic Resonance, East China Normal University, Shanghai, China, 2Department of MRI, The First Affiliated Hospital of Zhengzhou University, Zhengzhou, China, 3MR Scientific Marketing, Siemens Healthcare, Shanghai, China Keywords: Tumors, Brain We retrospectively enrolled 243 patients to develop a multi-task radiomics approach to predict IDH mutation status and early recurrence simultaneously in patients with WHO II-IV gliomas from preoperative multi-parametric MRI (mp-MRI). Firstly, multi-task LASSO was performed to find features shared between the two tasks, which were then combined with task-specific features selected recursively to build radiomics models. The models achieved test AUCs of 0.826 and 0.770 for IDH mutation status identification and early recurrence prediction, respectively. Shared features enabled the models to achieve satisfactory performance with minimum number of features, avoiding overfitting and making the models more interpretable. |

| 15:06 | 0525. |

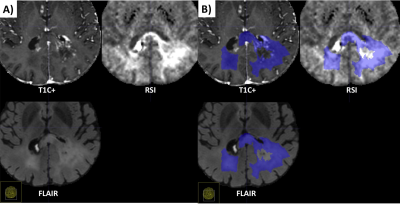

Differentiation of recurrent tumor from post-treatment changes in Glioblastoma patients using Deep Learning and Restriction Spectrum Imaging

Louis Gagnon1, Diviya Gupta1, Nathan S White2, Vaness Goodwill3, Carrie McDonald4, Thomas Beaumont5, Tyler M Seibert1,4,6, Jona Hattangadi-Gluth4, Santosh Kesari7, Jessica Schulte8, David Piccioni8, Anders M Dale1,8, Nikdokht Farid1, and Jeffrey D Rudie1

1Department of Radiology, University of California San Diego, La Jolla, CA, United States, 2Cortechs.ai, San Diego, CA, United States, 3Department of Pathology, University of California San Diego, La Jolla, CA, United States, 4Department of Radiation Medicine and Applied Sciences, University of California San Diego, La Jolla, CA, United States, 5Department of Neurological Surgery, University of California San Diego, La Jolla, CA, United States, 6Department of Bioengineering, University of California San Diego, La Jolla, CA, United States, 7Department of Translational Neurosciences, Pacific Neuroscience Institute and Saint John’s Cancer Institute at Providence Saint Johns’ Health Center, Santa Monica, CA, United States, 8Department of Neuroscience, University of California San Diego, La Jolla, CA, United States Keywords: Tumors, Diffusion/other diffusion imaging techniques Differentiating recurrent tumor from post-treatment changes is challenging in glioblastoma. Using restriction spectrum imaging (RSI) and deep learning, we were able to accurately identify and segment residual and recurrent enhancing and non-enhancing cellular tumor in post-treatment brain MRIs. Including RSI in the deep learning model improved tumor segmentation due to the ability of RSI to separate cellular tumor from peritumoral edema and treatment related enhancement. The volume of cellular tumor was also predictive of survival. Our results suggest that combining deep learning and RSI may identify recurrent tumor in glioblastoma patients, which could improve targeted treatments and guide clinical decision-making. |

15:14 |

0526. |

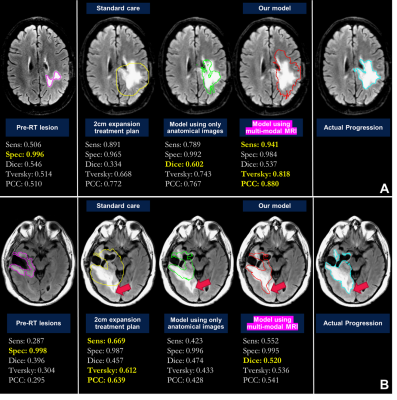

Defining radiation target volumes for glioblastoma from predictions of tumor recurrence with AI and diffusion & metabolic MRI

Nate Tran1,2,3, Jacob Ellison1,2,3, Tracy Luks1, Yan Li1,3, Angela Jakary1, Oluwaseun Adegbite1,2, Ozan Genc1,3, Bo Liu1,2, Hui Lin2,4, Javier Villanueva-Meyer1,3, Olivier Morin4, Steve Braunstein4, Nicholas Butowski5, Jennifer Clarke5, Susan M. Chang5, and Janine M. Lupo1,2,3 1Department of Radiology and Biomedical Imaging, University of California, San Francisco, San Francisco, CA, United States, 2UC Berkeley - UCSF Graduate Program in Bioengineering, University of California, San Francisco, San Francisco, CA, United States, 3Center for Intelligent Imaging, University of California, San Francisco, San Francisco, CA, United States, 4Department of Radiation Oncology, University of California, San Francisco, San Francisco, CA, United States, 5Department of Neurological Surgery, University of California, San Francisco, San Francisco, CA, United States Keywords: Tumors, Radiotherapy Using pre-radiotherapy anatomical, diffusion, and metabolic MRI from 42 patients newly-diagnosed with GBM, we first used Random Forest models to identify voxels that later exhibit either contrast-enhancing or T2 lesion progression. We then applied convolutional encoder-decoder neural networks to pre-radiotherapy imaging to segment subsequent tumor progression and found that the resulting predicted region better covered the actual tumor progression while sparing normal brain compared to the standard uniform 2cm expansion of the anatomical lesion to define the radiation target volume. This shows that multi-parametric MRI with deep learning has the potential to assist in future RT treatment planning. |

| 15:22 | 0527. |

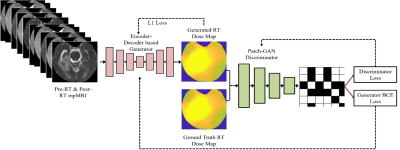

Predicting Post-Stereotactic Radiotherapy Magnetic Resonance Images: A Proof-of-Concept Study in Breast Cancer Metastases to the Brain

Shraddha Pandey1,2, Tugce Kutuk3, Matthew N Mills4, Mahmoud Abdalah5, Olya Stringfield5, Kujtim Latifi4, Wilfrido Moreno1, Kamran Ahmed4, and Natarajan Raghunand2

1Electrical Engineering, University of South Florida, Tampa, FL, United States, 2Department of Cancer Physiology, H.Lee Moffitt Cancer Center and Research Institute, Tampa, FL, United States, 3Department of Radiation Oncology, Baptist Health South Florida, Miami, FL, United States, 4Department of Radiation Oncology, H.Lee Moffitt Cancer Center and Research Institute, Tampa, FL, United States, 5Quantitative Imaging Shared Service, H.Lee Moffitt Cancer Center and Research Institute, Tampa, FL, United States Keywords: Tumors, Radiotherapy, Image Prediction Stereotactic radiosurgery (SRS) can provide effective local control of breast cancer metastases to the brain while limiting damage to surrounding healthy tissues. Knowledge-based algorithms have been reported that can alleviate the manual aspects of radiation dose planning, but these do not currently provide voxel-level dose prescriptions that are optimized for tumor control and avoidance of radionecrosis and associated toxicity. On the assumption that a voxelwise relationship exists between pre-SRS MR images, the RT dose map, and the resulting post-SRS MR images, we have investigated a deep learning framework to predict the latter from the former two. |

The International Society for Magnetic Resonance in Medicine is accredited by the Accreditation Council for Continuing Medical Education to provide continuing medical education for physicians.