Digital Poster

ML/AI for Data Synthesis, Generative Models & Quantitative MRI I

ISMRM & ISMRT Annual Meeting & Exhibition • 03-08 June 2023 • Toronto, ON, Canada

| Computer # | |||

|---|---|---|---|

5026. |

81 |

Deep learning-based APT imaging using synthetically generated

training data

Malvika Viswanathan1,

Leqi Yin2,

Yashwant Kurmi1,

and Zhongliang Zu3

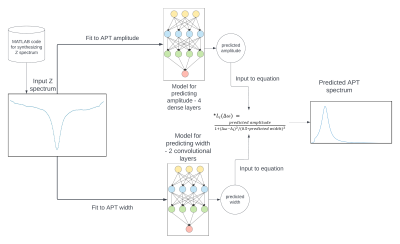

1Vanderbilt University Medical Center, Vanderbilt University Institute of Imaging Sciences, Nashville, TN, United States, 2Vanderbilt University, Nashville, TN, United States, 3Department of Radiology and Radiological Sciences, Vanderbilt University Institute of Imaging Sciences, Nashville, TN, United States Keywords: Machine Learning/Artificial Intelligence, CEST & MT Machine learning is increasingly applied to address challenges in specifically quantifying APT effect. The models are usually trained on measured data, which, however are usually lack of ground truth and sufficient training data. Synthetically generated data from both measurements and simulations can create training data which mimic tissues better than full simulations, cover all possible variations in sample parameters, and provide the ground truth. We evaluated the feasibility to use synthetic data to train models for predicting APT effect. Results show that the machine learning predicted APT is more close to the ground truth than the conventional multiple-pool Lorentzian fit. |

|

5027. |

82 |

Conditional VAE for Single-Voxel MRS Data Generation

Dennis van de Sande1,

Sina Amirrajab1,

Mitko Veta1,

and Marcel Breeuwer1,2

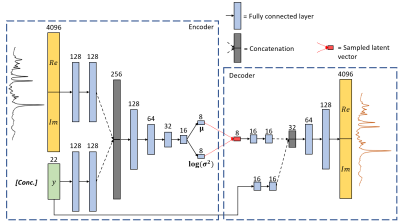

1Biomedical Engineering - Medical Image Analysis Group, Eindhoven University of Technology, Eindhoven, Netherlands, 2MR R&D - Clinical Science, Philips Healthcare, Best, Netherlands Keywords: Machine Learning/Artificial Intelligence, Spectroscopy, deep learning, generative modelling We propose a conditional VAE to synthesize single-voxel MRS data. This deep learning method can be used to enrich in-vivo datasets for other machine learning applications, without using any physics-based models. Our work is a proof-of-concept study which demonstrates the potential of a cVAE for MRS data generation by using a synthetic dataset of 8,000 spectra for training. We evaluate our model by performing a linear interpolation of the latent space, which shows that spectral properties are captured in the latent space, meaning that our model can learn spectral features from the data and can generate new samples. |

|

5028. |

83 |

Estimating uncertainty in diffusion MRI models using generative

deep learning

Frank Zijlstra1,2 and

Peter T While1,2

1Department of Radiology and Nuclear Medicine, St. Olav's University Hospital, Trondheim, Norway, 2Department of Circulation and Medical Imaging, NTNU - Norwegian University of Science and Technology, Trondheim, Norway Keywords: Machine Learning/Artificial Intelligence, Diffusion/other diffusion imaging techniques, Model fitting, uncertainty, IVIM Uncertainty is an important aspect of fitting quantitative models to diffusion MRI data, which is often overlooked. This study presents a method for estimating uncertainty intrinsic to a model using a generative deep learning approach (Denoising Diffusion Probabilistic Models (DDPM)). We numerically validate that the approach provides accurate uncertainty estimates, and demonstrate its use in providing signal-specific uncertainty estimates. Furthermore, we show that DDPM can be used as a fitting method that estimates uncertainty, and show both ADC and IVIM fitting on an in vivo brain scan. This shows promise for DDPM, as both an investigate tool and fitting method. |

|

5029. |

84 |

Synthesized 7T MRI from 3T MRI using generative adversarial

network: validation in clinical brain imaging

Caohui Duan1,

Xiangbing Bian1,

Kun Cheng1,

Jinhao Lyu1,

Yongqin Xiong1,

Jianxun Qu2,

Xin Zhou3,

and Xin Lou1

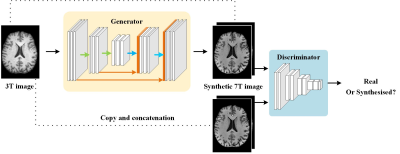

1Department of Radiology, Chinese PLA General Hospital, Beijing, China, 2MR Collaboration, Siemens Healthineers Ltd., Beijing, China, 3Key Laboratory of Magnetic Resonance in Biological Systems, State Key Laboratory of Magnetic Resonance and Atomic and Molecular Physics, National Center for Magnetic Resonance in Wuhan, Wuhan Institute of Physics and Mathematics, Innovation Academy for Precision Measurement Science and Technology, Chinese Academy of Sciences‒Wuhan National Laboratory for Optoelectronics, Wuhan, China Keywords: Machine Learning/Artificial Intelligence, Brain Ultra-high field 7T MRI provides exceptional tissue contrast and anatomical details but is often cost-prohibitive and not widely accessible in clinics. A generative adversarial network (SynGAN) was developed to generate synthetic 7T images from the widely used 3T images. The synthetic 7T images achieved improved tissue contrast and anatomical details compared to the 3T images. Meanwhile, the synthetic 7T images showed comparable diagnostic performance to the authentic 7T images for visualizing a wide range of pathology, including cerebral infarction, demyelination, and brain tumor. |

|

5030. |

85 |

Synthetising myelin water fraction from T1-weighted and

T2-weighted data: an image-to-image translation approach

Matteo Mancini1,

Carolyn McNabb1,

Derek Jones1,

and Mara Cercignani1

1Cardiff University Brain Research Imaging Centre, Cardiff University, Cardiff, United Kingdom Keywords: Machine Learning/Artificial Intelligence, Brain Myelin biomarkers are a fundamental tool for both neuroscience research and clinical applications. Despite several quantitative MRI methods available for their estimation, in several cases qualitative approaches are the only viable solution. To get the best of both the quantitative and qualitative worlds, here we propose an image-to-image translation method to learn the mapping between common routine scans and a quantitative myelin metric. To achieve this goal, we trained a generative adversarial network on a relatively large dataset of healthy subjects. Both the qualitative and quantitative results show good agreement between the predicted and the ground-truth maps. |

|

5031. |

86 |

Resilience of synthetic CT DL network to varying ZTE-MRI input

SNR

Sandeep Kaushik1,2,

Cristina Cozzini1,

Mikael Bylund3,

Steven Petit4,

Bjoern Menze2,

and Florian Wiesinger1

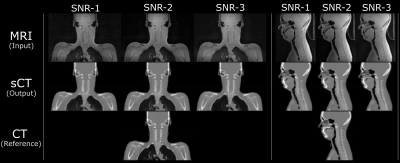

1GE Healthcare, Munich, Germany, 2Department of Quantitative Biomedicine, University of Zurich, Zurich, Switzerland, 3Umeå University, Umeå, Sweden, 4Erasmus MC Cancer Institute, Rotterdam, Netherlands Keywords: Machine Learning/Artificial Intelligence, Radiotherapy, MR-only RT, Synthetic CT, Multi-task CNN, PET/MR Many recent works have proposed methods to convert MRI into synthetic CT (sCT). While they have demonstrated a certain level of accuracy, not many have studied the robustness of those methods. In this work, we study the robustness of a multi-task deep learning (DL) model that computes sCT images from fast ZTE MR images under different levels of image noise. We evaluate its impact on radiation therapy planning. The proposed method demonstrates resilience against input noise variations. It makes way for a clinically acceptable dose calculation with a fast input image acquisition. |

|

5032. |

87 |

Deep learning-based synthesis of TSPO PET from T1-weighted MRI

images only

Matteo Ferrante1,

Marianna Inglese2,

Ludovica Brusaferri3,

Marco L Loggia3,

and Nicola Toschi4,5

1Biomedicine and prevention, University of Rome Tor Vergata, Roma, Italy, 2Biomedicine and prevention, University of Rome Tor Vergata, Rome, Italy, 3Martinos Center For Biomedical Imaging, MGH and Harvard Medical School (USA), Boston, MA, United States, 4BioMedicine and prevention, University of Rome Tor Vergata, Rome, Italy, 5Department of Radiology,, Athinoula A. Martinos Center for Biomedical Imaging and Harvard Medical school, Boston, MA, USA, Boston, MA, United States Keywords: Machine Learning/Artificial Intelligence, Neuroinflammation, PET, image synthesis Chronic pain-related biomarkers can be found using a specific binding radiotracer called [11C]PBR28 able to target the translocator protein (TSPO), whose expression is increased in activated glia and can be considered as a biomarker for neuroinflammation. One of the main drawbacks of PET imaging is radiation exposure, for which we attempted to develop a deep learning model able to synthesize PET images of the brain from T1w MRI only. Our model produces synthetic TSPO-PET images from T1W MRI which are statistically indistinguishable from the original PET images both on a voxel-wise and on a ROI-wise level. |

|

5033. |

88 |

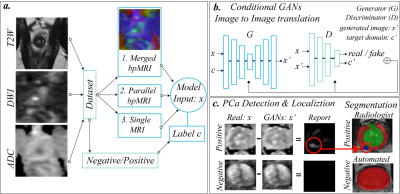

Improving Prostate Cancer Detection Using Bi-parametric MRI with

Conditional Generative Adversarial Networks

Alexandros Patsanis1,

Mohammed R. S. Sunoqrot1,2,

Elise Sandsmark2,

Sverre Langørgen2,

Helena Bertilsson3,4,

Kirsten Margrete Selnæs1,2,

Hao Wang5,

Tone Frost Bathen1,2,

and Mattijs Elschot1,2

1Department of Circulation and Medical Imaging, Norwegian University of Science and Technology - NTNU, Trondheim, Norway, 2Department of Radiology and Nuclear Medicine, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 3Department of Clinical and Molecular Medicine, Norwegian University of Science and Technology - NTNU, Trondheim, Norway, 4Department of Urology, St. Olavs Hospital, Trondheim University Hospital, Trondheim, Norway, 5Department of Computer Science, Norwegian University of Science and Technology - NTNU, Gjøvik, Norway Keywords: Machine Learning/Artificial Intelligence, Data Analysis, Deep Learning This study investigated automated detection and localization of prostate cancer on biparametric MRI (bpMRI). Conditional Generative Adversarial Networks (GANs) were used for image-to-image translation. We used an in-house collected dataset of 811 patients with T2- and diffusion-weighted MR images for training, validation, and testing of two different bpMRI models in comparison to three single modality models (T2-weighted, ADC, high b-value diffusion). The bpMRI models outperformed T2-weighted and high b-value models, but not ADC. GANs show promise for detecting and localizing prostate cancer on MRI, but further research is needed to improve stability, performance and generalizability of the bpMRI models. |

|

5034. |

89 |

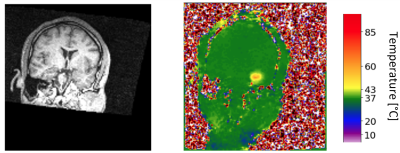

AI-based mapping from MRI to MR thermometry for MR-guided laser

interstitial thermal therapy using a conditional generative

adversarial network

Saba Sadatamin1,2,

Steven Robbins3,

Richard Tyc3,

Adam C. Waspe2,4,

Lueder A. Kahrs1,5,6,7,

and James M. Drake1,2,8

1Institute of Biomedical Engineering, University of Toronto, Toronto, ON, Canada, 2Posluns Centre for Image Guided Innovation & Therapeutic Intervention, Hospital of Sick Children, Toronto, ON, Canada, 3Monteris Medical, Winnipeg, MB, Canada, 4Department of Medical Imaging, University of Toronto, Toronto, ON, Canada, 5Medical Computer Vision and Robotics Lab, University of Toronto, Toronto, ON, Canada, 6Department of Mathematical & Computational Sciences, University of Toronto Mississauga, Toronto, ON, Canada, 7Department of Computer Science, University of Toronto, Toronto, ON, Canada, 8Department of Surgery, University of Toronto, Toronto, ON, Canada Keywords: Machine Learning/Artificial Intelligence, MR-Guided Interventions, Laser Interstitial Thermal Therapy MR-guided laser interstitial thermal therapy (MRgLITT) is a minimally-invasive treatment for brain tumors where a surgeon inserts a laser fiber along a fixed trajectory. Repositioning the laser is invasive and predicting thermal spread close to heat sinks is difficult. To address this problem, MR thermometry prediction using artificial intelligence (AI) modeling will be developed to aid the surgeon to determine whether a selected laser position is ideal before the treatment starts. AI algorithms will be trained to model the nonlinear mapping from anatomical MRI planning images to MR thermometry. A surgeon will choose a better fiber trajectory by AI model. |

|

5035. |

90 |

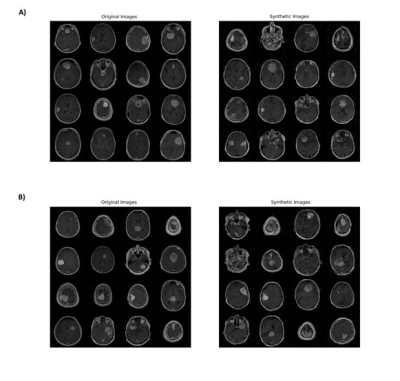

Prediction of NF2 Loss in Meningiomas Using T1-Weighted Contrast

Enhanced MRI Generated by Deep Convolutional Generative

Adversarial Networks

Sukru Samet Dindar1,

Buse Buz-Yalug2,

Kubra Tan3,

Ayca Ersen Danyeli4,5,

Ozge Can5,6,

Necmettin Pamir5,7,

Alp Dincer5,8,

Koray Ozduman5,7,

Yasemin P. Kahya1,

and Esin Ozturk-Isik2,5

1Electrical and Electronics Engineering, Bogazici University, Istanbul, Turkey, 2Institute of Biomedical Engineering, Bogazici University, Istanbul, Turkey, 3Health Institutes of Turkey, Istanbul, Turkey, 4Department of Medical Pathology, Acibadem University, Istanbul, Turkey, 5Brain Tumor Research Group, Acibadem University, Istanbul, Turkey, 6Department of Medical Engineering, Acibadem University, Istanbul, Turkey, 7Department of Neurosurgery, Acibadem University, Istanbul, Turkey, 8Department of Radiology, Acibadem University, Istanbul, Turkey Keywords: Machine Learning/Artificial Intelligence, Machine Learning/Artificial Intelligence Neurofibromatosis type 2 (NF2) gene mutations have been linked to tumorigenesis in meningiomas. This study aims to improve the prediction of NF2 loss in meningiomas using T1-weighted contrast-enhanced MRI augmented by a deep convolutional generative adversarial network (DCGAN). Synthetically generated MRI increased the training accuracy from 78.9% to 93% and test accuracy from 69.4% to 79.5% in this study. |

|

5036. |

91 |

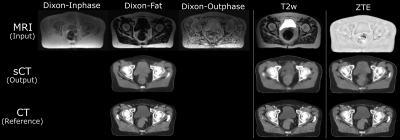

Synthetic CT generation from different MR contrast inputs and

evaluation of its quantitative accuracy

Sandeep Kaushik1,2,

Cristina Cozzini1,

Jonathan J Wyatt3,

Hazel McCallum3,

Ross Maxwell4,

Bjoern Menze2,

and Florian Wiesinger1

1GE Healthcare, Munich, Germany, 2Department of Quantitative Biomedicine, University of Zurich, Zurich, Switzerland, 3Newcastle University and Northern Centre for Cancer Care, Newcastle upon Tyne, United Kingdom, 4Newcastle University, Newcastle upon Tyne, United Kingdom Keywords: Machine Learning/Artificial Intelligence, Radiotherapy, Synthetic CT, radiation therapy planning, Multi-task network, deep learning MRI to synthetic CT image conversion is a problem of interest for clinical applications such as MR-radiation therapy planning, PET/MR attenuation correction, MR bone imaging. Many methods proposed for this purpose use different MR inputs. In this work, we compare the sCT generated from different 3D MR inputs, including Zero TE (ZTE), fast spin echo (CUBE), and fast spoiled gradient echo with Dixon-type fat-water separation (LAVA-Flex), using a multi-task deep learning (DL) model. We analyze the qualitative and quantitative accuracy of the generated sCT image from each input and highlight the aspects relevant for different clinical applications. |

|

5037. |

92 |

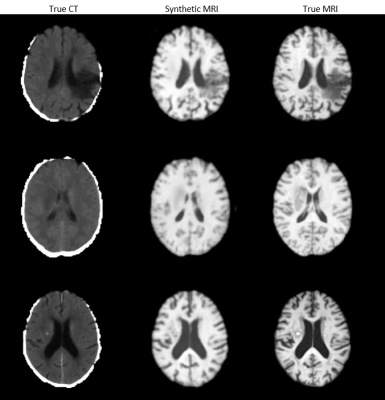

Synthesising MRIs from CTs to Improve Stroke Treatment Using

Deep Learning

Grace Wen1,

Jake McNaughton1,

Ben Chong1,

Vickie Shim1,

Justin Fernandez1,

Samantha Holdsworth2,3,

and Alan Wang1,2,3

1Auckland Bioengineering Institute, University of Auckland, Auckland, New Zealand, 2Faculty of Medical and Health Sciences, University of Auckland, Auckland, New Zealand, 3Mātai Medical Research Institute, Tairāwhiti-Gisborne, New Zealand Keywords: Machine Learning/Artificial Intelligence, Stroke, Image Synthesis MRI holds an important role in diagnosing brain conditions, however, many patients do not receive an MRI before their diagnosis and onset of treatment. We propose to use deep learning to generate an MRI from a patient's CT and have implemented multiple models to compare their results. Using CT/MRI pairs from 181 stroke patients, we use mutiple deep learning models to generate MRI from the CT images. The model produces high quality images and accurately translates lesions onto the target image. |

|

5038. |

93 |

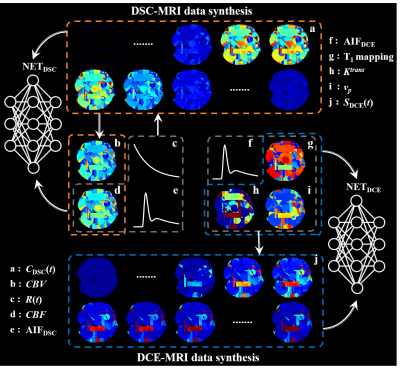

Synthetic data driven learning for full-automatic hemoperfusion

parameter estimation

Lu Wang1,

Pujie Zhang1,

Zhen Xing2,

Congbo Cai1,

Zhong Chen1,

Dairong Cao2,

Zhigang Wu3,

and Shuhui Cai1

1Department of Electronic Science, Xiamen University, Xiamen, China, 2Department of Radiology, First Affiliated Hospital of Fujian Medical University, Fuzhou, China, 3MSC Clinical & Technical Solutions, Philips Healthcare, Shenzhen, China Keywords: Machine Learning/Artificial Intelligence, Brain, Hemoperfusion parameter estimation Hemoperfusion magnetic resonance (MR) imaging derived parameters characterize both endothelial hyperplasia and neovascularization that are associated with tumor aggressiveness and growth. However, the hemoperfusion parameter estimation is still limited by low reliability, high bias, long processing time, and operator experience dependency up to now. In this study, a synthetic data driven learning method for hemoperfusion parameter estimation is proposed. Image analysis shows that the proposed method improves the reliability and precision of hemodynamic parameter estimation in a full-automatic and high-efficient way. |

|

5039. |

94 |

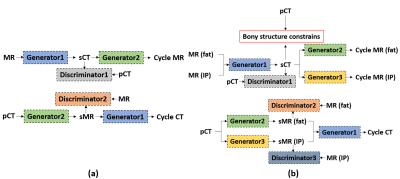

Bony structure enhanced synthetic CT generation using Dixon

sequences for pelvis MR-only radiotherapy

Xiao Liang1,

Ti Bai1,

Andrew Godley1,

Chenyang Shen1,

Junjie Wu1,

Boyu Meng1,

Mu-Han Lin1,

Paul Medin1,

Yulong Yang1,

Steve Jiang1,

and Jie Deng1

1Radiation Oncology, University of Texas Southwestern Medical Center, Dallas, TX, United States Keywords: Machine Learning/Artificial Intelligence, Machine Learning/Artificial Intelligence Synthetic CT (sCT) image generated from MRI by unsupervised deep learning models tends to have large errors around bone area. To generate better sCT image quality in bone area, we propose to add bony structure constrains in the loss function of the unsupervised CycleGAN model, and modify the single-channel CycleGAN to a multi-channel CycleGAN that takes Dixon constructed MR images as inputs. The proposed model has lowest mean absolute error compared with single-channel CycleGAN with different MRI images as input. We found that it can generate more accurate Hounsfield Unit and anatomy of bone in sCT. |

|

5040. |

95 |

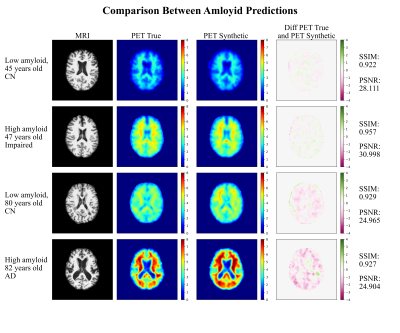

Amyloid-Beta Axial Plane PET Synthesis from Structural MRI: An

Image Translation Approach for Screening Alzheimer’s Disease

Fernando Vega1,2,3,

Abdoljalil Addeh1,2,3,

Ahmed Elmenshawi2,

and M. Ethan MacDonald1,2,3,4

1Biomedical Engineering, Schulich School of Engineering, University of Calgary, Calgary, AB, Canada, 2Electrical & Software Engineering, Schulich School of Engineering, University of Calgary, Calgary, AB, Canada, 3Hotchkiss Brain Institute, Cumming School of Medicine, University of Calgary, Calgary, AB, Canada, 4Department of Radiology, Cumming School of Medicine, University of Calgary, Calgary, AB, Canada Keywords: Machine Learning/Artificial Intelligence, Alzheimer's Disease, MRI, PET, Image Translation In this work, an image translation model is implemented to produce synthetic amyloid-beta PET images from structural MRI that are quantitatively accurate. Image pairs of amyloid-beta PET and structural MRI were used to train the model. We found that the synthetic PET images could be produced with a high degree of similarity to truth in terms of shape, contrast and overall high SSIM and PSNR. This work demonstrates that performing structural to quantitative image translation is feasible to enable the access amyloid-beta information from only MRI. |

|

5041. |

96 |

3D Lesion Generation Model considering Anatomic Localization to

Improve Object Detection in Limited Lacune Data

Daniel Kim1,

Jae-Hun Lee1,

Mohammed A. Al-masni2,

Jun-ho Kim1,

Yoonseok Choi1,

Eun-Gyu Ha1,

SunYoung Jung3,

Young Noh4,

and Dong-Hyun Kim1

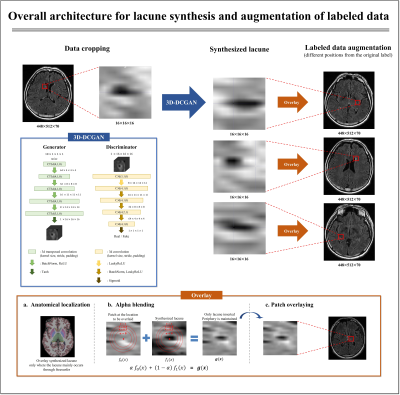

1Department of Electrical and Electronic Engineering, Yonsei Univ., Seoul, Korea, Republic of, 2Department of Artificial Intelligence, Sejong Univ., Seoul, Korea, Republic of, 3Department of Biomedical Engineering, Yonsei Univ., Wonju, Korea, Republic of, 4Department of Neurology, Gachon Univ., Incheon, Korea, Republic of Keywords: Machine Learning/Artificial Intelligence, Data Processing, Augmentation Recently, research on the detection of cerebral small vessel disease (CSVD) has been mainly implemented in two-stages (1st: candidate detection, 2nd: false-positive reduction). Previous studies presented the difficulty of collecting labeled data as a limitation. Here, we synthesized the lesion through 3D-DCGAN and insert it at different locations on the MR image considering anatomical localization and alpha blending to augment labeled data. Through this, the detecting architecture was simplified to a single-stage, and the precision and recall values were improved by an average of 0.2. |

|

5042. |

97 |

Use of an Automated Approach for Generating vADC for a Large

Patient Population Studied with 129Xe MRI

Ramtin Babaeipour1,

Maria Mihele2,

Keeirah Raguram2,

Matthew Fox2,3,

and Alexei Ouriadov1,2,3

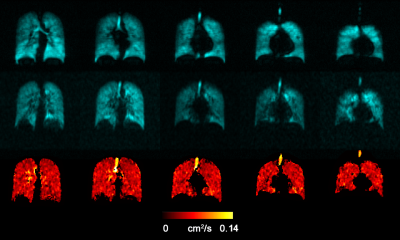

1School of Biomedical Engineering, The University of Western Ontario, London, ON, Canada, 2Department of Physics and Astronomy, The University of Western Ontario, London, ON, Canada, 3Lawson Health Research Institute, London, ON, Canada Keywords: Machine Learning/Artificial Intelligence, Segmentation, Deep learning, Transfer learning Hyperpolarized 129Xe lung MRI is an efficient technique used to investigate and assess pulmonary diseases. However, the longitudinal observation of the emphysema progression using hyperpolarized gas MRI-based ADC can be problematic, as the disease-progression can lead to increasing unventilated-lung areas, which likely excludes the largest ADC estimates. One solution to this problem is to combine static-ventilation and ADC measurements following the idea of 3He MRI ventilatory ADC (vADC). We have demonstrated this method adapted for 129Xe MRI to help overcome the above-mentioned shortcomings and provide an accurate assessment of the emphysema progression. |

|

The International Society for Magnetic Resonance in Medicine is accredited by the Accreditation Council for Continuing Medical Education to provide continuing medical education for physicians.