2025 ISMRM Review Instructions

for Registered Abstracts

Online Review & Scoring Deadline:

Please read all instructions carefully before you begin.

Thank you for agreeing to review abstracts for the 2025 ISMRM Annual Meeting. Your role as a reviewer is integral to the success of the scientific program, and your efforts are greatly appreciated. Your reviews will assist the Annual Meeting Program Committee (AMPC) as they construct the scientific program for the Annual Meeting. Please read the following instructions and check the acknowledgement before reviewing your assigned abstracts. Finally, please note that the content and scoring of all abstracts is strictly confidential.

You have agreed to review REGISTERED abstracts this year. Registered abstracts are abstracts where research methods are submitted and assessed by reviewers before the results are known. The directions to authors submitting Registered abstracts are here: https://www.ismrm.org/25m/call/registered-abstract/. You may find it useful to read these prior to completing your review. Registered abstracts must achieve two minimum requirements for acceptance:

- The abstract must include an explicit, testable hypothesis.

- The abstract plan to gather and analyze data in time for the annual meeting must be feasible.

This year the scoring of these abstracts needs to fit within the same structure as the traditional ISMRM abstracts. Your comments will be very important for the AMPC as they evaluate the Registered abstracts. Please use the comments to add context to your scores.

Clinical Impact, Technical Impact, and Quality: We will score Registered abstracts in 3 areas using a 10-point scale. We realize that some abstracts may be stronger or weaker in different areas, and we would like you to reflect that in your scoring. Details on how these categories pertain to Registered abstracts are below. Note, these categories have changed from the original announcement. This was necessary to better align with traditional ISMRM abstracts. Transparency, the use of reproducible research and open science practices, should be addressed in the Quality score.

Comments and Normalization: We ask that you provide brief comments whenever you can. Taking 30 seconds to write a comment is very helpful to the program committee. We will normalize your scores so that all reviewers’ scores impact the program.

Group Sizes and Match: Similarly to last year, we have more categories and review groups in an effort to better match reviewer preferences with abstract primary and secondary categories. Due to the broad set of categories submitted as Registered abstracts, you will receive abstracts from different categories groups. Please try to score consistently across topic areas, and avoid scoring one category higher than another.

Journal Recommendation: In an attempt to streamline submission, review, and publication of strong abstracts to our journals, we encourage reviewers to recommend compelling abstracts to be submitted to the society’s journals. For Registered abstracts, please skip this section.

TECHNICAL NOTE

We have found that Google Chrome on some Apple computers sometimes cannot render certain Latex elements. For example, Mathcal font symbols cannot be rendered, as illustrated here.

To test your browser, click this link and wait for the font to load: https://proofwiki.org/wiki/Symbols:%5Cmathcal

We have found this to be an issue with Chrome specifically running on Apple hardware and software including M1 and M2 Macs, OS Ventura and later. Chrome on other types of computers appears to be normal. Please be aware of this issue during the abstract review process as many equations may not show up correctly on some reviewers’ screens.

If your browser cannot display the Mathcal font on this website, you will only see boxes as shown here.

FULL REVIEW INSTRUCTIONS

A. Getting Started

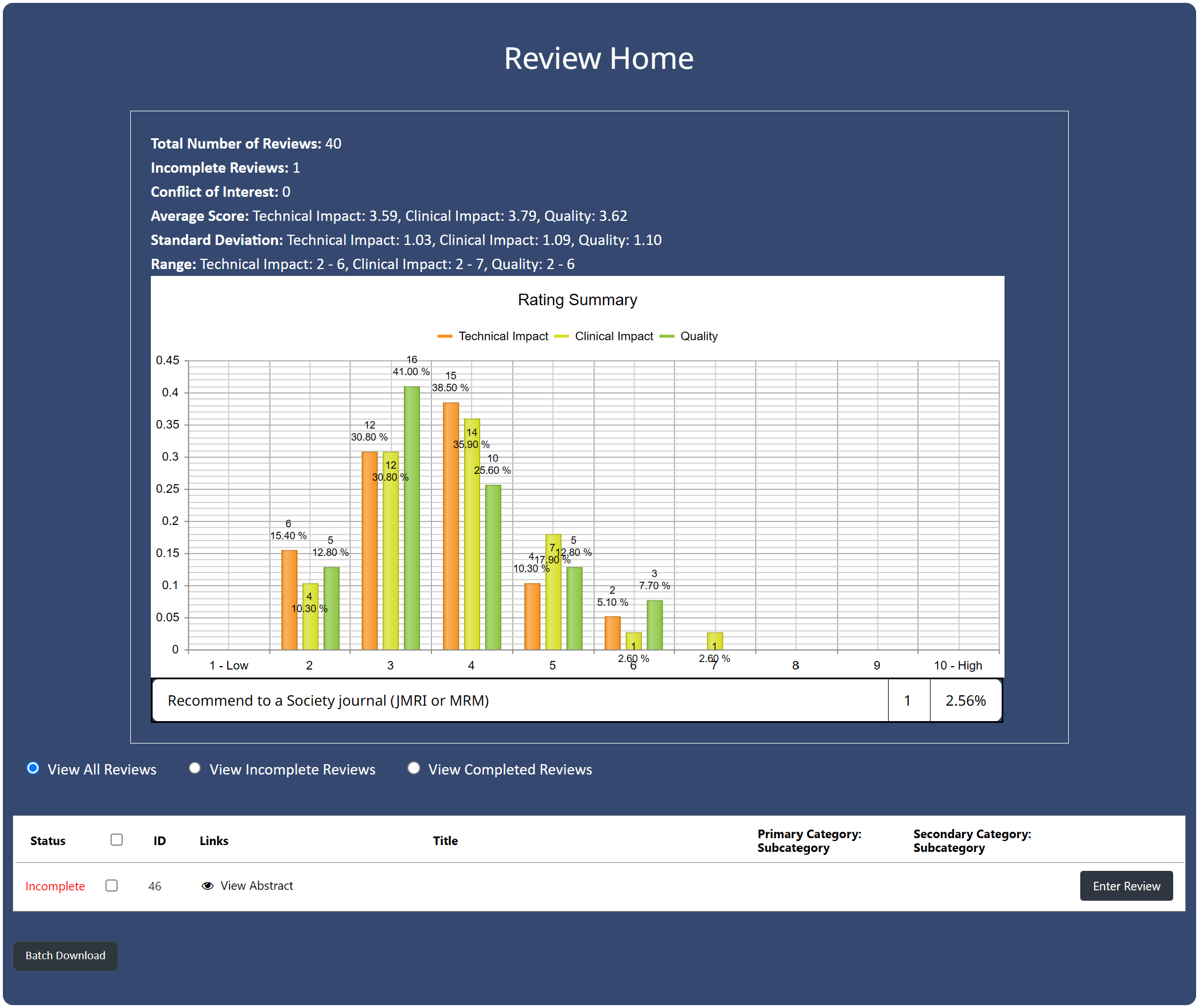

After you log in, you will see the Rating Summary graph followed by a list of abstracts assigned to you. The Rating Summary graph provides a summary of your abstract scores, including your average score, standard deviation, and range of scores. This tool is provided to help you achieve a diverse range of scores. This screen will remain visible throughout the review process.

Below the Rating Summary graph, you will see the list of abstracts that are assigned to you. You may start reviewing the first abstract (press “Enter Review”) or you may batch download the entire stack of your assigned abstracts if you wish to read them offline. If you would like to batch download all of your abstracts, please check the box to the right of “Status.” This will select all of your abstracts.

If you choose to batch download, a window with all of the abstracts listed sequentially will pop up. Please be sure that the download has completed as this may take some time. You may save these as a PDF file, print them, or save as an HTML file. With the HTML format, you can zoom in and out of the documents.

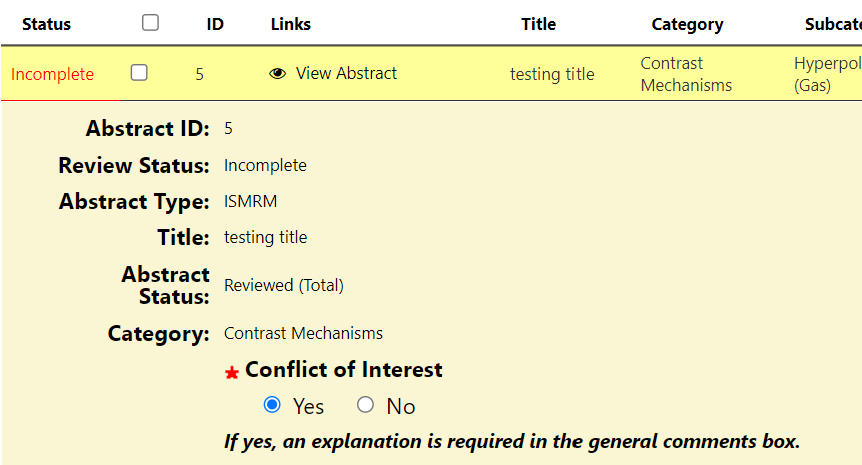

To start the review of an individual abstract, click on the button on the right indicated by “Enter Review.” This will open the individual review window below. Use the View Abstract button to bring up a pop-up window with the individual abstract. Clicking on the figure thumbnails will allow you to view the individual figures in detail.

B. Review and Scoring Guidelines

Please read the following carefully. The following four sections require your input:

- Conflict of Interest (required): Please indicate if you have a conflict. A conflict exists when you are a co-author, the work is from your institution/employer or a close collaborator, you hold patents directly related to the research, or there is any other reason generally considered a conflict. Blinded review can make it difficult to identify all conflicts based on authorship, but we ask you to do your best. Please indicate a conflict by clicking “yes” and hit the Submit button. Your review for this abstract is complete.

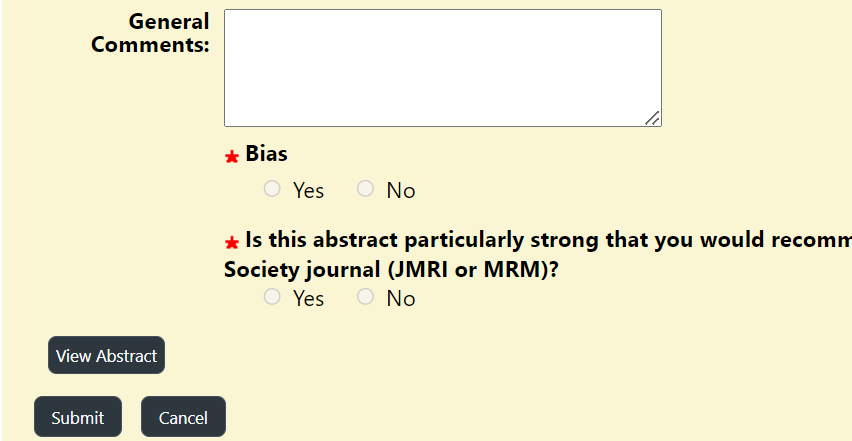

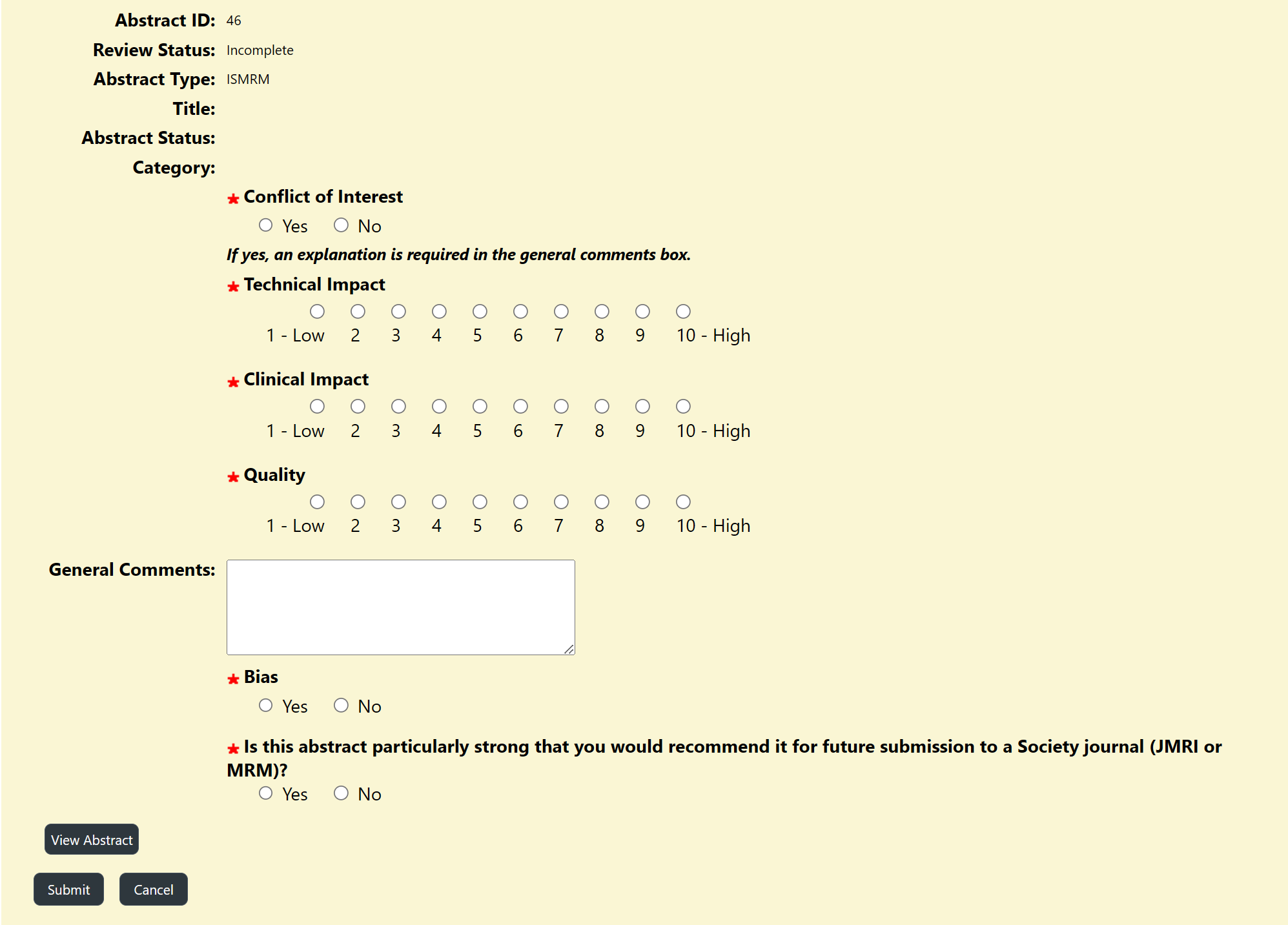

- Scores (required): The purpose of the scores is to assist the AMPC in making the program sessions, which is a complicated task that reflects the strong interdisciplinary nature of the society. We ask you to score in 3 areas for each abstract.

For Registered abstracts, impact does not come from impressive results (as the results are not yet known), rather it is evaluated on the importance of answering the research question. For example, if the answer to “is method A more motion robust than method B?” would allow for clinical translation of the more motion robust method, that could warrant a high impact score. Conversely, an abstract fine tuning a parameter for a method that is far from clinical translation will have low clinical impact. Impact can also come from the ability to definitively answer a question. For example, a large well-powered study on the effectiveness of a method would result in a high impact score.

- Technical Impact – is the degree to which the work will influence the field, including how likely the work is to change scientific discovery or further technique development and capability.

- Clinical Impact – is the overall contribution to clinical work, and how it may change clinical practice.

- Quality – reflects the quality and transparency of the research (including study design, appropriateness of the research goal/question, rigor of the data analysis, as well as use of good reproducible research and open science practices) AND the clarity with which the work is presented. Note that work may be high Quality with medium scores for Technical Impact and Clinical Impact for different reasons, or the reverse.

These individual scores are helpful to the program committee in constructing the scientific sessions.

Please use the entire 1-10 scoring range as much as possible. Note that reviewers are intentionally blinded to author preference for poster vs. oral presentation. Please use the above scoring system for all abstracts assigned to you. We will normalize all reviewers’ scores to the same mean and standard deviation, in order for all reviewers to have influence.

Comments: While comments are always appreciated, there are some important cases where we ask you to comment:

- If you believe the abstract is a duplicate of another abstract or a published paper, please score the abstract, but identify the duplicate in the comments.

- Registered abstracts much include an explicit testable hypothesis. Comments on the hypothesis (or lack thereof) are appreciated.

- Transparency is a key requirement for reproducibility. In this context, transparency means that the experiments and data analyses are well-defined with all relevant conditions and variables clearly listed. Transparency reduces the researcher degrees of freedom after data has been collected. Transparency can also mean that reasons for design choices are justified (e.g., based on pilot data or previous literature).

- The Registered abstract must show feasibility to gather and analyze data in time for the annual meeting.

C. General Principles of Reviewing Abstracts

Authors invest an enormous amount of time, effort, and resources to generate their research. As reviewers, you have a responsibility to take this process seriously, and you are serving as representatives of the ISMRM. By agreeing to review abstracts, you are agreeing to abide by the following principles:

- Confidentiality: The content of every abstract is strictly confidential until it is published in the Proceedings of the Annual Meeting. Strict adherence to confidentiality has many implications, including grant funding, intellectual property, and publications. Do not share any aspect of your assigned abstracts or your scoring with anyone.

- Conflict of Interest: Please do not score any abstracts with which you have a conflict of interest (see above).

- Reviewer Conflicts: reviewers are required to disclose all relevant financial disclosures involving any commercial interest.

- Reviewer Bias: Please remember to evaluate the science only. We do not reveal authors, institutions, or acknowledgements as a way to help you, the reviewer, focus on the science and avoid unconscious bias. However, for some submissions, you may recognize the authors or institution based on your familiarity with specialized research equipment or continuation of prior work. We ask that you set that aside and evaluate the science alone.

- Standards Involving Recommendations for Clinical Care:

- All recommendations involving clinical medicine must be based on evidence that is accepted within the profession of medicine as adequate justification for their indications and contraindications in the care of patients.

- Basic science / engineering research referred to, reported or used in support or justification of any patient care recommendation must conform to generally accepted standards of experimental design, data collection and analysis.

- Please note any deviations from these principles in the Comments section.

- Quality of Submitted Abstracts: Accepted abstracts will be published and referenced. For this reason, abstracts should be of high quality. Poorly prepared abstracts with spelling errors, confusing formatting, and poor grammar should be scored accordingly.

- Duplication of Abstracts: Clear duplication or strongly overlapping abstracts may be grounds for rejection of one or both abstracts. Please score duplicated abstracts as if they were independent, but please note any duplication (provide abstract numbers) in the comment section. Also, abstracts that are essentially the same as previously published journal articles should be identified. Please note this kind of duplication in the comment section as well, including a brief citation if possible.

- Abstract Categories: If you think an abstract is in the wrong primary category, please use the Comments field to suggest an alternate category if you can. Do not score an abstract poorly because the primary category is a poor fit – in some cases there is no good category match.

- Abstracts Outside of Your Field of Expertise: It is possible (and understandable) that some of your assigned abstracts are beyond your ability to provide an informed evaluation. You must use your judgment here – if possible please review the abstract. However, if you feel unqualified to assess an abstract fairly, please check “yes” under Conflict of Interest and make a note in the comment section that the abstract is beyond your expertise.

- Blinded Review: Abstracts are blinded by authors and institution. If an author inadvertently identifies himself or herself by name or affiliation, you may choose to review or not review, whichever you consider most appropriate. If inadvertent disclosure occurs, please note this in the Comments section and inform the ISMRM Office as quickly as possible, so this disclosure can be corrected. (If authors cite their own work, you need not comment on that.)

Staff Assistance

Rhiannon Pinson, Director of Education, rhiannon@ismrm.org

Anne-Marie Kahrovic, Executive Director, anne-marie@ismrm.org

Sally Moran, Director of IT & Web, sally@ismrm.org

Thank you for your efforts to help make the 2025 ISMRM Annual Meeting a success!