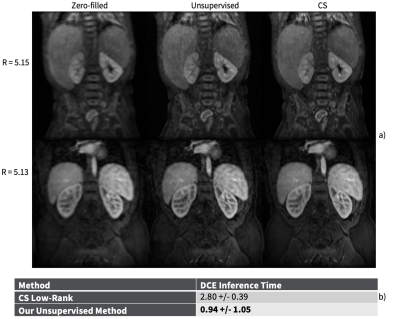

-

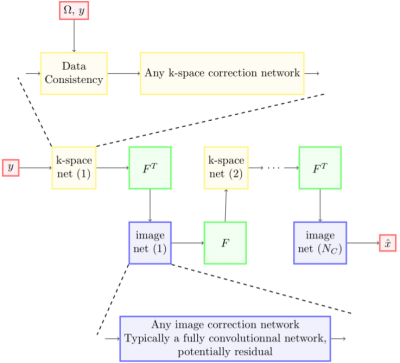

Using Untrained Convolutional Neural Networks to Accelerate MRI in 2D and 3D

Dave Van Veen1,2, Arjun Desai1, Reinhard Heckel3,4, and Akshay S. Chaudhari1

1Stanford University, Stanford, CA, United States, 2University of Texas at Austin, Austin, TX, United States, 3Rice University, Houston, TX, United States, 4Technical University of Munich, Munich, Germany

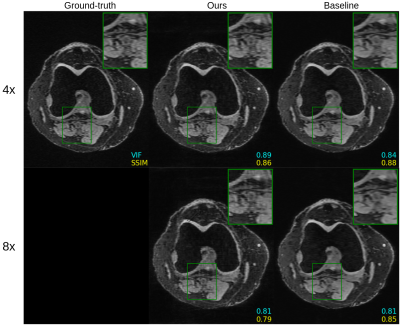

We find that untrained convolutional neural networks are comparable to supervised methods for accelerating MRI scans in both 2D and 3D. Further, we demonstrate a method for regularizing the network feature maps using undersampled k-space measurements.

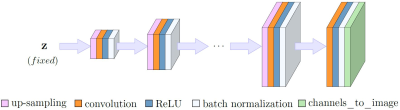

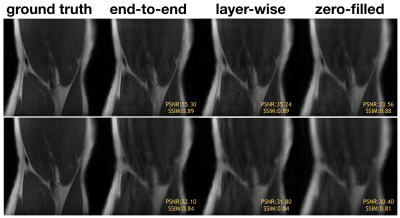

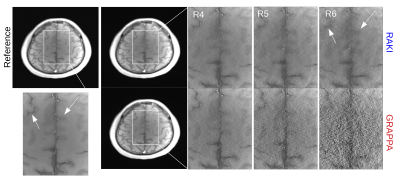

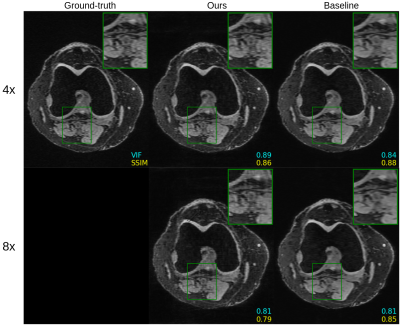

Figure 4. Comparison of untrained method against fully supervised baseline on a 3D qDESS axial slice. The k-space was downsampled using a 2D Poisson disc at acceleration factors of 4x and 8x for the top and bottom rows, respectively. The image quality of the reconstruction with the same undersampling factors is higher for 3D qDESS than 2D fastMRI data, likely because the undersampling aliasing is spread across two dimensions ($$$k_y$$$ and $$$k_z$$$).

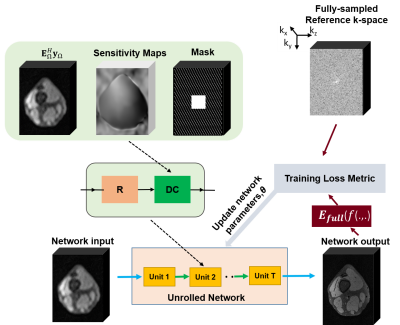

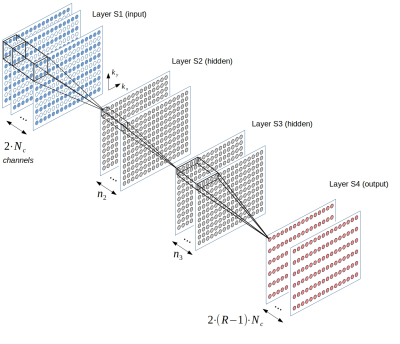

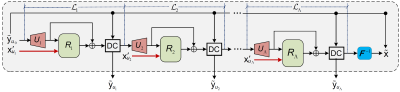

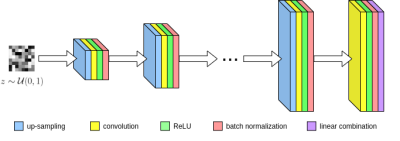

Figure 1. Network architecture for our untrained method.

-

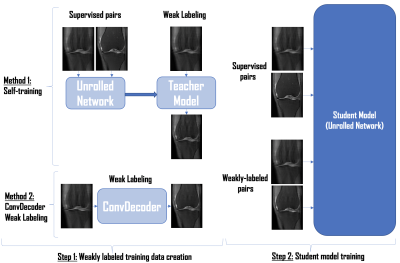

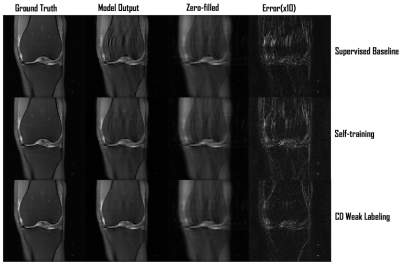

Zero-shot Learning for Unsupervised Reconstruction of Accelerated MRI Acquisitions

Yilmaz Korkmaz1,2, Salman Ul Hassan Dar1,2, Mahmut Yurt1,2, and Tolga Çukur1,2,3

1Department of Electrical and Electronics Engineering, Bilkent University, Ankara, Turkey, 2National Magnetic Resonance Research Center, Bilkent University, Ankara, Turkey, 3Neuroscience Program, Aysel Sabuncu Brain Research Center, Bilkent University, Ankara, Turkey

We propose a zero-shot learning approach for unsupervised

reconstruction of accelerated MRI without any prior information about reconstruction task. Our approach efficiently recovers undersampled acquisitions, irrespective of the contrast, acceleration rate or undersampling pattern.

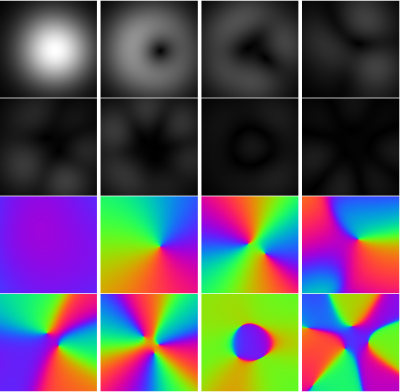

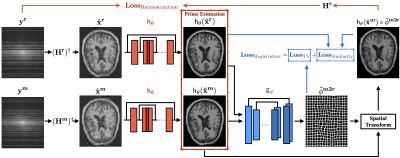

Figure 1: (a) Pretraining of the

style-generative model. A fully connected mapper to generate intermediate

latent vectors w, a synthesizer to generate images, a discriminator for

adversarial training and noise n. w and n are defined for each synthesizer

block separately, where block cover resolution from 4x4 to 256x256 pixels.

(b) Testing phase of ZSL-Net. w* and n*

correspond to optimized latent vector and noise components for the synthesizer.

Optimization is performed to minimize partial k-space loss between masked Fourier

coefficients of reconstructed and undersampled images.

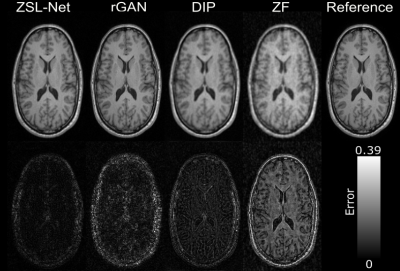

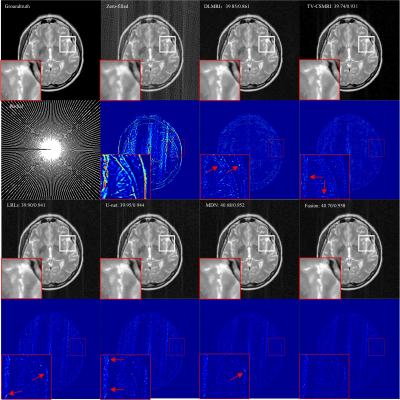

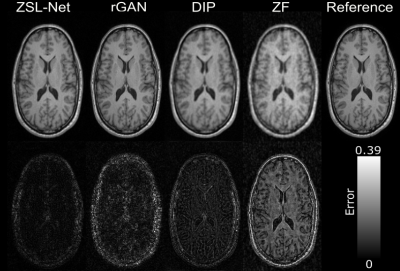

Figure 2: Demonstrations

of the proposed and competing methods on IXI for T1-contrast image

reconstruction when acceleration rate R is 8. Reconstructed images are shown

along with the error maps which are absolute differences between reconstructed

and reference images. Error map corresponds to ZSL-Net, appears to be darker compared

to the competing methods and most of the error concentrated on skull.

-

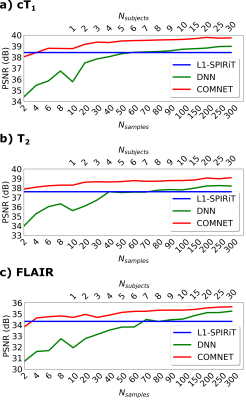

Multi-Mask Self-Supervised Deep Learning for Highly Accelerated Physics-Guided MRI Reconstruction

Burhaneddin Yaman1,2, Seyed Amir Hossein Hosseini1,2, Steen Moeller2, Jutta Ellermann2, Kâmil Uğurbil2, and Mehmet Akçakaya1,2

1University of Minnesota, Minneapolis, MN, United States, 2Center for Magnetic Resonance Research, Minneapolis, MN, United States

The proposed multi-mask

self-supervised physics-guided learning technique significantly improves upon

its previously proposed single-mask counterpart for highly accelerated MRI

reconstruction.

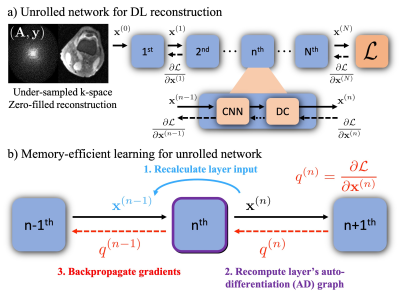

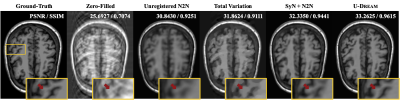

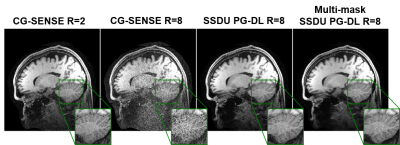

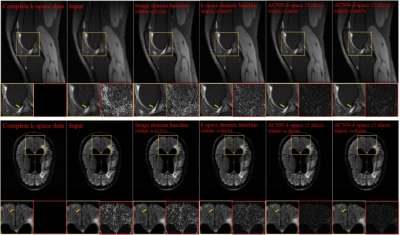

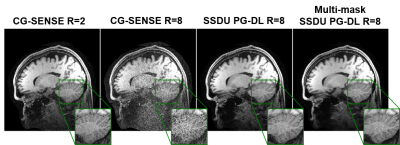

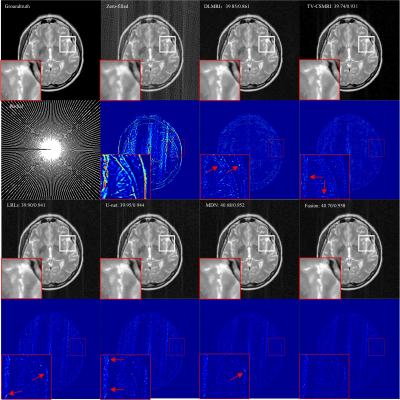

Figure 3. A representative test brain MRI slice showing reconstruction

results using CG-SENSE, SSDU PG-DL and proposed multi-mask SSDU PG-DL at R=8,

as well as CG-SENSE at acquisition acceleration R=2. CG-SENSE suffers from major

noise amplification at R=8, whereas SSDU PG-DL at R=8 achieves similar

reconstruction quality to CG-SENSE at R=2. The proposed multi-mask SSDU PG-DL

further improves the reconstruction quality compared to SSDU PG-DL.

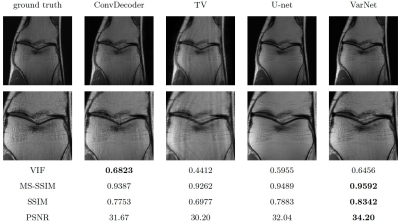

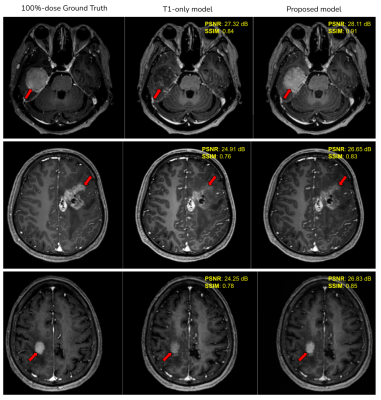

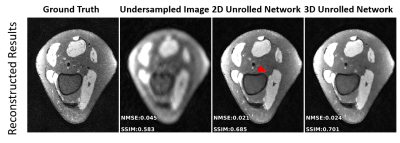

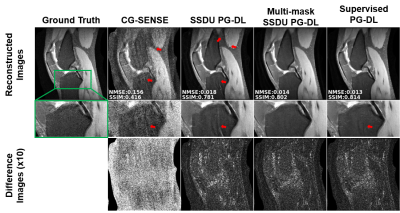

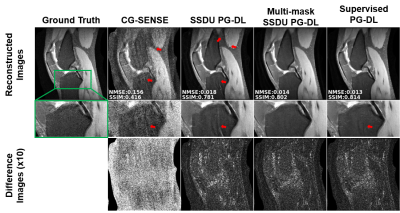

Figure 2. a) Reconstruction results on a representative test

slice at R = 8 using CG-SENSE, supervised PG-DL, SSDU PG-DL and proposed

multi-mask SSDU PG-DL. CG-SENSE suffers from major noise amplification and

artifacts. SSDU PG-DL also shows residual artifacts (red arrows) at this high

acceleration rate. Proposed multi-mask SSDU PG-DL further suppresses these

artifacts and achieve artifact-free reconstruction, removing artifacts that are

still visible in supervised PG-DL.

-

PIC-GAN: A Parallel Imaging Coupled Generative Adversarial Network for Accelerated Multi-Channel MRI Reconstruction

Jun Lyu1, Chengyan Wang2, and Guang Yang3,4

1School of Computer and Control Engineering, Yantai University, Yantai, China, 2Human Phenome Institute, Fudan University, Shanghai, China, 3Cardiovascular Research Centre, Royal Brompton Hospital, London, United Kingdom, 4National Heart and Lung Institute, Imperial College London, London, United Kingdom

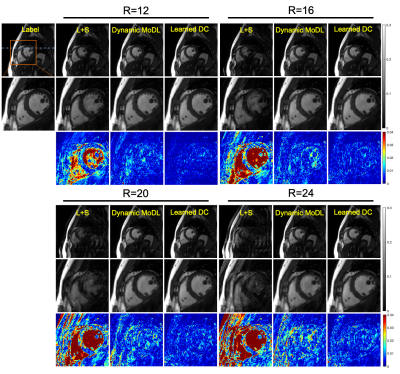

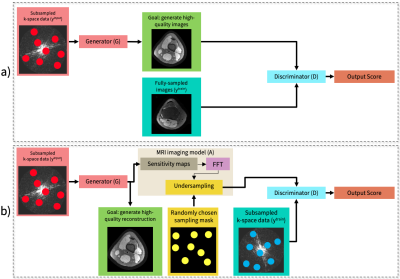

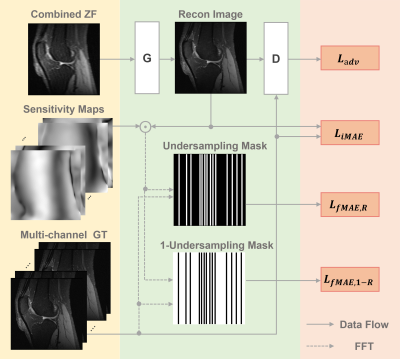

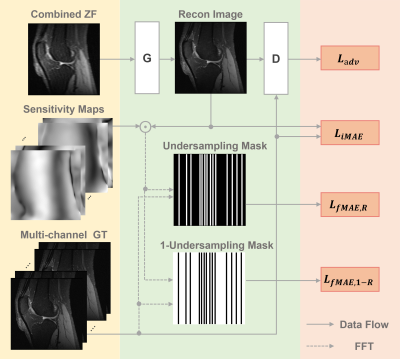

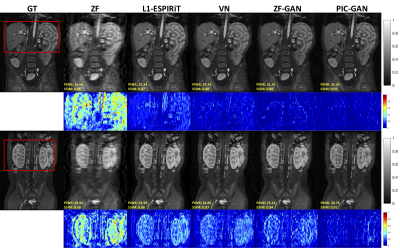

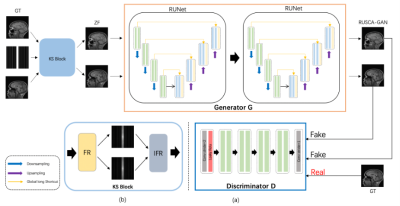

To demonstrate the feasibility of combining parallel imaging (PI) with the generative adversarial network (GAN) for accelerated multi-channel MRI reconstruction. In our proposed PIC-GAN framework, we used a progressive refinement method in both frequency and image domains.

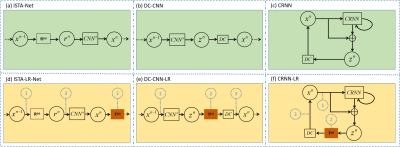

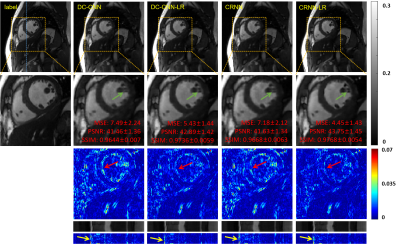

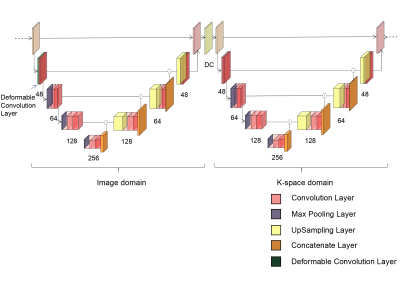

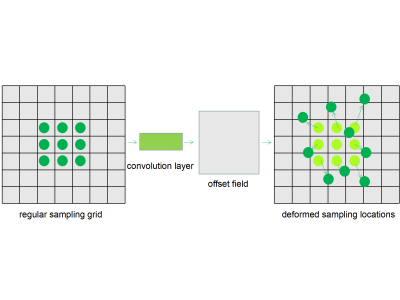

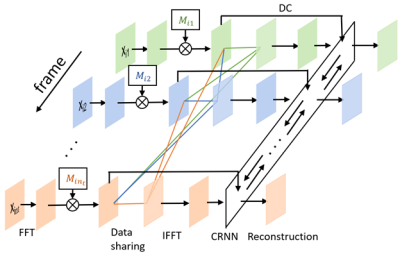

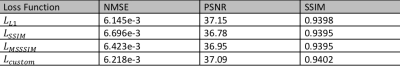

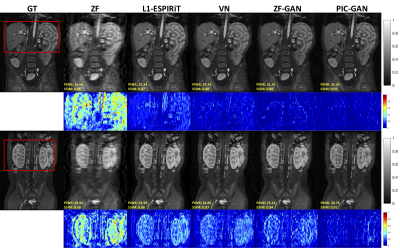

Figure 1. Schema of the proposed parallel imaging and generative adversarial network (PIC-GAN) reconstruction network.

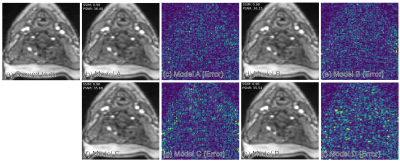

Figure 2. Representative reconstructed abdominal images with acceleration AF= 6. The 1st and 2nd rows depict reconstruction results for regular Cartesian sampling, the 3rd and 4th row depict the same for variable density random sampling. The PIC-GAN reconstruction shows reduced artifacts compared to other methods.

-

Adaptive convolutional neural networks for accelerating magnetic resonance imaging via k-space data interpolation

Tianming Du1, Yuemeng Li1, Honggang Zhang2, Stephen Pickup1, Rong Zhou1, Hee Kwon Song1, and Yong Fan1

1Radiology, University of Pennsylvania, Philadelphia, PA, United States, 2Beijing University of Posts and Telecommunications, Beijing, China

A

deep learning model with adaptive convolutional neural networks is developed for

k-space data interpolation. Ablation and experimental results show that our method

achieves better image reconstruction than existing state-of-the-art techniques.

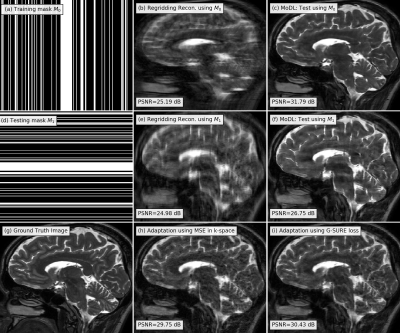

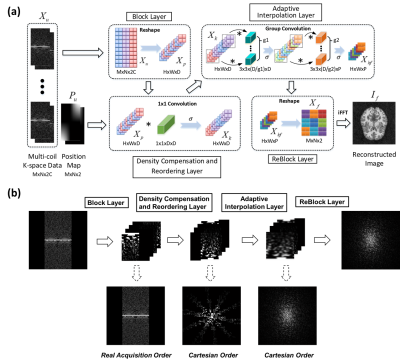

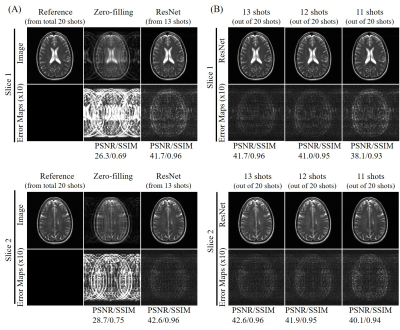

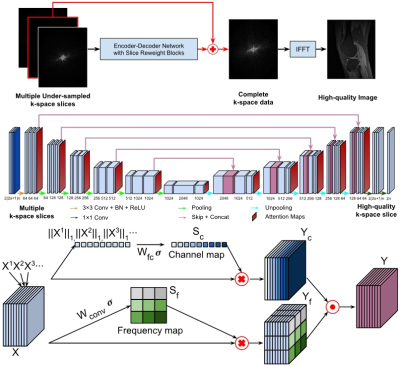

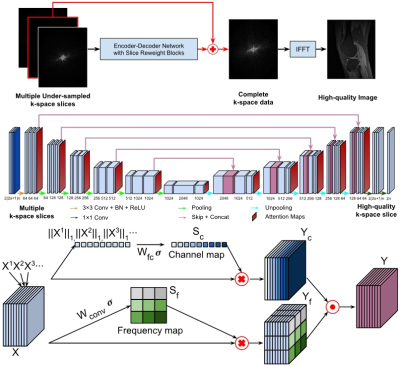

Figure-1. A

residual Encoder-Decoder network of CNNs (top and middle), enhanced by

frequency-attention and channel-attention layers (bottom), for image

reconstruction from undersampled k-space

data.

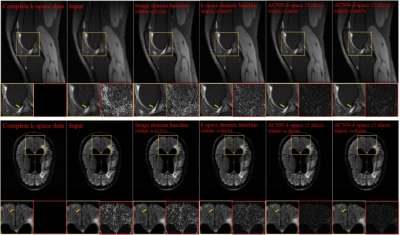

Figure-2. Visualization

of representative cases of the Stanford knee dataset (top row) and the fastMRI

brain dataset (bottom row), including images reconstructed from the fully

sampled data and from under-sampled data without CNN processing. The difference

images were amplified 5 times for Stanford cases and 10 times for fastMRI

dataset. Yellow and red boxes indicate the zoomed-in and difference images,

respectively.

-

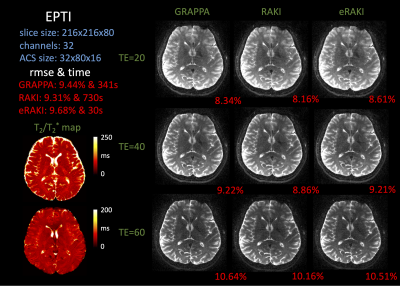

Accelerated Magnetic Resonance Spectroscopy with Model-inspired Deep Learning

Zi Wang1, Yihui Huang1, Zhangren Tu2, Di Guo2, Vladislav Orekhov3, and Xiaobo Qu1

1Department of Electronic Science, National Institute for Data Science in Health and Medicine, Xiamen University, Xiamen, China, 2School of Computer and Information Engineering, Xiamen University of Technology, Xiamen, China, 3Department of Chemistry and Molecular Biology, University of Gothenburg, Gothenburg, Sweden

The proof-of-concept

of the significance of merging the optimization with deep learning, for reliable,

high-quality, and ultra-fast accelerated NMR spectroscopy, and provides a

relatively explicit understanding of the complex mapping in deep learning.

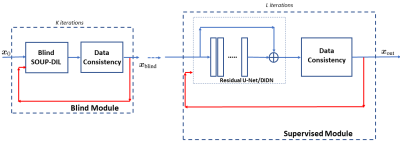

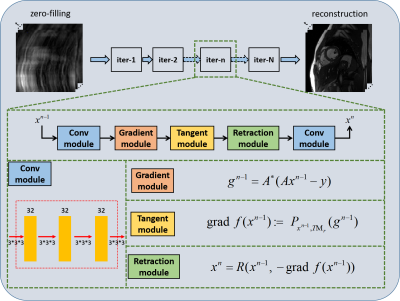

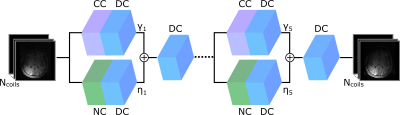

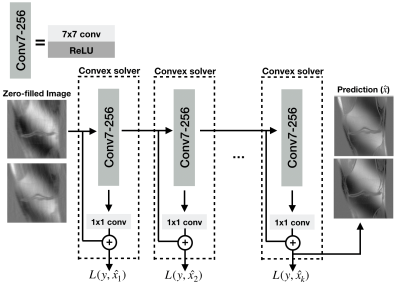

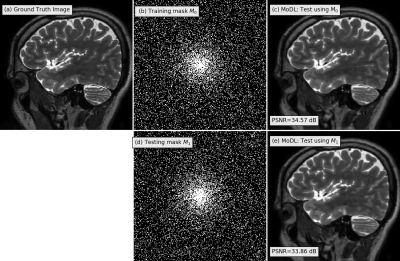

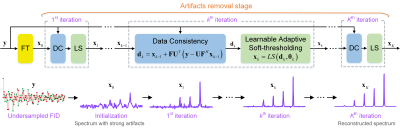

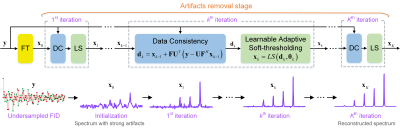

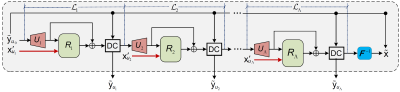

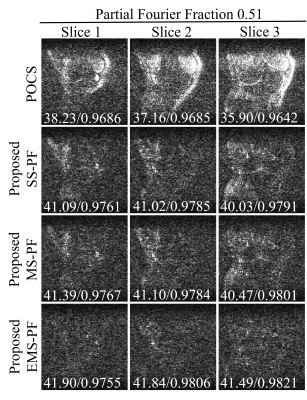

Figure 1. MoDern: The proposed Model-inspired Deep Learning framework for NMR

spectra reconstruction. The recursive MoDern framework that alternates between

the data consistency (DC), which is same to Eq. (1a), and the learnable

adaptive soft-thresholding (LS) inspired by Eq. (1b). With the increase of

iterations, artifacts are gradually removed, and finally a high-quality

reconstructed spectrum can be obtained. Note: “FT” is the Fourier transform. A

data consistency followed by a learnable adaptive soft-thresholding constitutes

an iteration.

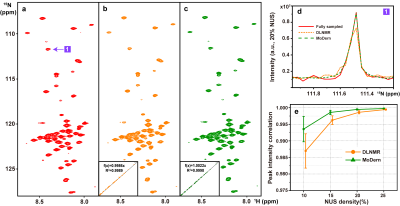

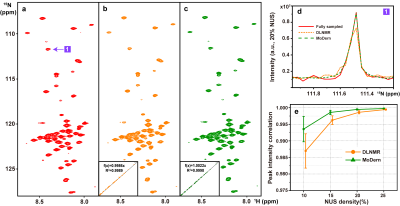

Figure 2. 2D 1H-15N

HSQC spectrum of the cytosolic domain of CD79b protein. (a) The fully sampled

spectrum. (b)-(c) are reconstructed spectra using DLNMR and MoDern from 20%

data, respectively. (d) is zoomed out 1D 15N traces. (e) is the

peak intensity correlation obtained with two methods under different NUS

levels. The insets of (b)-(c) show the corresponding peak intensity correlation

between fully sampled spectrum and reconstructed spectrum. Note: The average and standard deviations of

correlations in (e) are computed over 100 NUS trials.

-

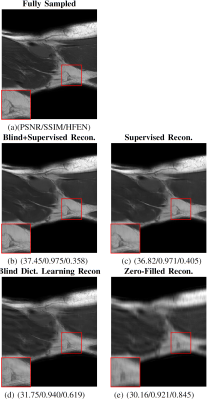

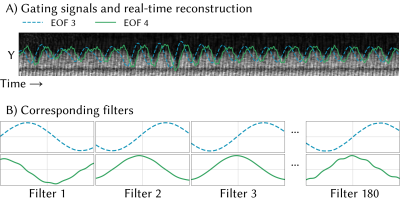

DL2 - Deep Learning + Dictionary Learning-based Regularization for Accelerated 2D Dynamic Cardiac MR Image Reconstruction

Andreas Kofler1, Tobias Schaeffter1,2,3, and Christoph Kolbitsch1,2

1Physikalisch-Technische Bundesanstalt, Berlin and Braunschweig, Berlin, Germany, 2School of Imaging Sciences and Biomedical Engineering, King's College London, London, United Kingdom, 3Department of Biomedical Engineering, Technical University of Berlin, Berlin, Germany

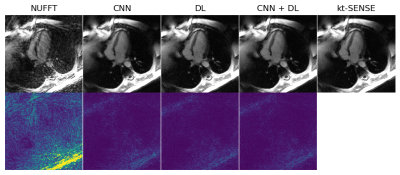

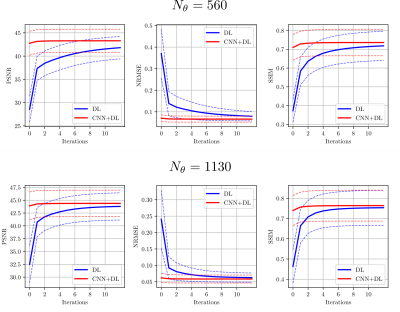

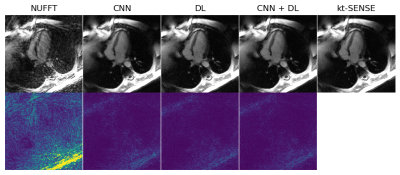

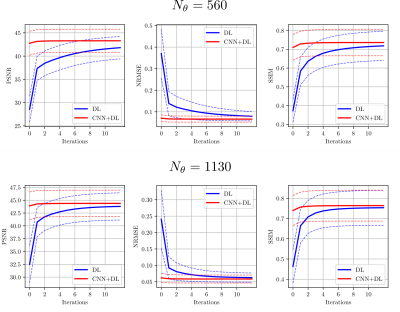

Here, we consider a image reconstruction method for dynamic cardiac MR which combines Convolutional Neural Networks (CNNs) with Dictionary Learning

(DL) and Sparse Coding (SC). The combination of CNNs with DL+SC improves the results obtained with CNNs and DL+SC used separately.

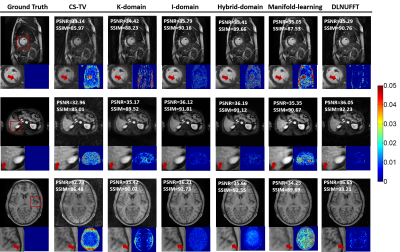

Figure 4: An example of results and corresponding point-wise error-images obtained for an acceleration factor of $$$R=18$$$. From left to right: The initial NUFFT-reconstruction obtained from $$$N_{\theta}=560$$$ radial spokes, the CNN-regularized solution10, the DL+SC-regularized solution7, the proposed method and the $$$kt$$$-SENSE reconstruction obtained from $$$N_{\theta}=3400$$$ radial spokes from which the $$$k$$$-space data was retrospectively simulated.

Figure 2: Convergence results for the proposed method (red) compared to DL and SC (blue) for the two acceleration factors $$$R=18$$$ and $$$R=9$$$ given by $$$N_{\theta}=560$$$ and $$$N_{\theta}=1130$$$ radial spokes, respectively. The solid lines correspond to the mean value of the respective mesure obtained over the entire test set. The dashed lines correspond to the mean $$$\pm$$$ the standard deviation obtained over the test set.

Note that the values differ from the ones shown in the Table because here, no masks were used to restrict the calculations to a region of interest.

-

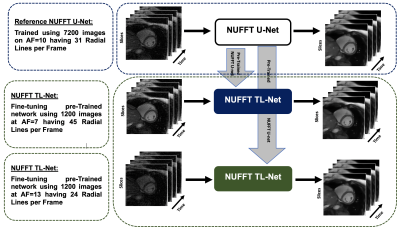

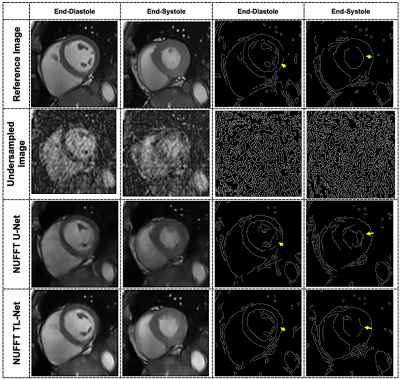

Cardiac Functional Analysis with Cine MRI via Deep Learning Reconstruction

Eric Z. Chen1, Xiao Chen1, Jingyuan Lyu2, Qi Liu2, Zhongqi Zhang3, Yu Ding2, Shuheng Zhang3, Terrence Chen1, Jian Xu2, and Shanhui Sun1

1United Imaging Intelligence, Cambridge, MA, United States, 2UIH America, Inc., Houston, TX, United States, 3United Imaging Healthcare, Shanghai, China

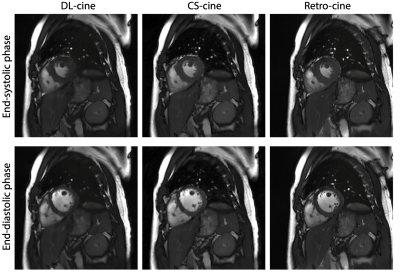

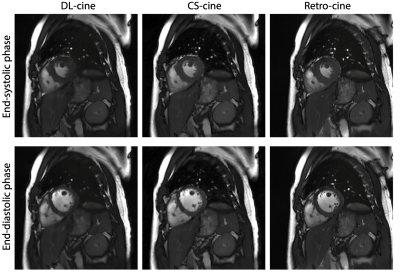

This is the first work to evaluate the cine MRI with deep learning reconstruction for cardiac function analysis. The cardiac functional values obtained from cine MRI with deep learning reconstruction are consistent with values from clinical standard retro-cine MRI.

Figure 2. Cardiac functional analysis based on DL-cine, CS-cine and retro-cine. Difference with statistical significance (p<0.05) is indicated by the star.

Figure 1. Examples of reconstructed images from DL-cine, CS-cine and retro-cine MRI. Data were acquired from the same subject but three different scans.

-

Deep Laplacian Pyramid Networks for Fast MRI Reconstruction with Multiscale T1 Priors

Xiaoxin Li1,2, Xinjie Lou1, Junwei Yang3, Yong Chen4, and Dinggang Shen2,5

1College of Computer Science and Technology, Zhejiang University of Technology, Hangzhou, China, 2School of Biomedical Engineering, ShanghaiTech University, Shanghai, China, 3Department of Computer Science and Technology, University of Cambridge, Cambridge, United Kingdom, 4Case Western Reserve University, Cleveland, OH, United States, 5Shanghai United Imaging Intelligence Co., Ltd., Shanghai, China

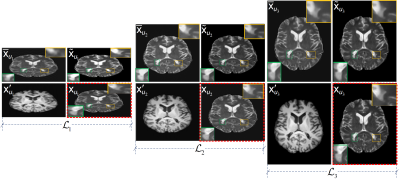

To accelerate multimodal Magnetic Resonance Imaging (MRI) acquisitions, we propose a deep Laplacian pyramid MRI reconstruction framework (LapMRI), which performs progressive upsampling while integrating multiscale prior of T1.

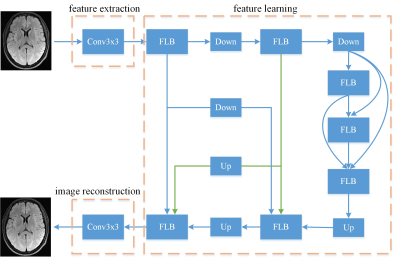

Figure 1: Schematic overview of the proposed LapMRI framework.

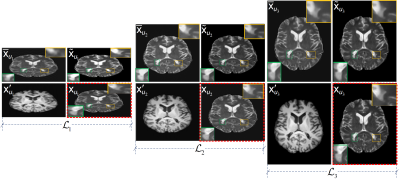

Figure 2: The inputs and outputs of LapMRI(D5C5, Λ=3) at three pyramid levels. For each pyramid level, the two images in the left column are the inputs, and the two images in the right column are the output and the respective ground-truth image, respectively. For visual understanding, the output of each pyramid level is displayed in the image domain, while the respective ground-truth image is framed with a red dotted box.

-

Deep learning-based reconstruction of highly-accelerated 3D MRI MPRAGE images

Sangtae Ahn1, Uri Wollner2, Graeme McKinnon3, Rafi Brada2, John Huston4, J. Kevin DeMarco5, Robert Y. Shih5,6, Joshua D. Trzasko4, Dan Rettmann7, Isabelle Heukensfeldt Jansen1, Christopher J. Hardy1, and Thomas K. F. Foo1

1GE Research, Niskayuna, NY, United States, 2GE Research, Herzliya, Israel, 3GE Healthcare, Waukesha, WI, United States, 4Mayo Clinic College of Medicine, Rochester, MN, United States, 5Walter Reed National Military Medical Center, Bethesda, MD, United States, 6Uniformed Services University of the Health Sciences, Bethesda, MD, United States, 7GE Healthcare, Rochester, MN, United States

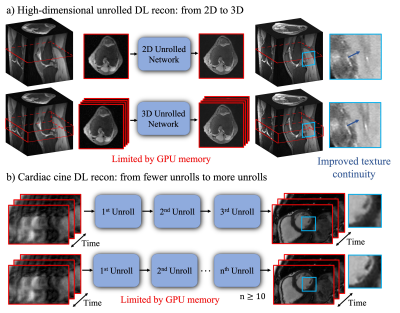

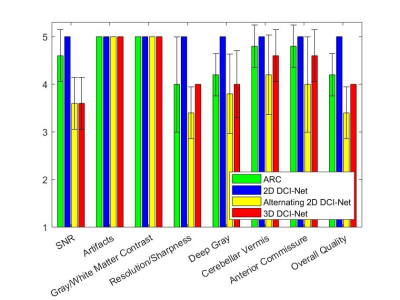

Our deep-learning

reconstruction, DCI-Net, can accelerate 3D T1-weighted MPRAGE scans by an additional

factor of 5 compared to conventional two-fold accelerated parallel acquisition,

while maintaining comparable diagnostic image quality.

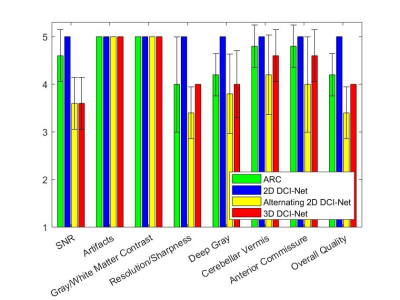

Figure 2. Image quality scores averaged

over 5 subjects for ARC (net R=2.1) and 3 variants of DCI-Net (net R=10) in 8

scoring categories. The error bars denote the sample standard deviation.

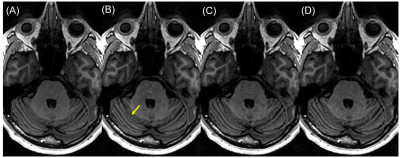

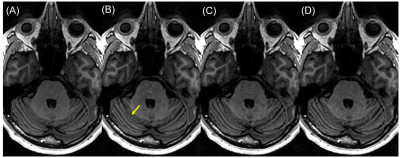

Figure 3. Example image slices from subject D, showing the cerebellar vermis

indicated by the yellow arrow, reconstructed by (A) ARC (net R=2.1), (B) 2D DCI-Net

(net R=10), (C) alternating 2D DCI-Net (net R=10), and (D) 3D DCI-Net (net R=10).

-

Improved CNN-based Image reconstruction using regularly under-sampled signal obtained in phase scrambling Fourier transform imaging

satoshi ITO1 and Shun UEMATSU1

1Utsunomiya University, Utsunomiya, Japan

A

CNN-based image reconstruction using phase scrambling Fourier transform imaging

was proposed and demonstrated. It was shown that proposed method showed that

preservation of structure and image contrast were improved compared to standard

Fourier transform based CS-CNN.

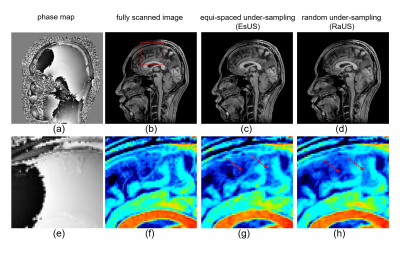

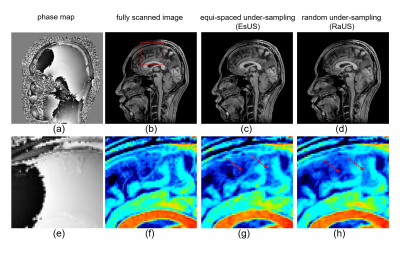

Figure 4. Results of reconstructing spatially phase varied images. Figure (a)

and (b) show the phase map and magnitude of fully scanned image. Figure (c) and

(d) are reconstructed images with EsUS and RaUS, respectively. Enlarged images

corresponding to (a) through (d) are shown in (e) through (h).

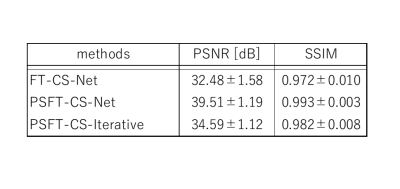

Figure 2. Comparison of PSNR and SSIM between PSFT-CS-Net and FT-CS-Net for

real-value images.

PSNR results using sampling pattern of Fig.1 (a) are shown. Comparison among

CNN reconstruction using PSFT or FT imaging and conventional iterative

reconstruction were made.

-

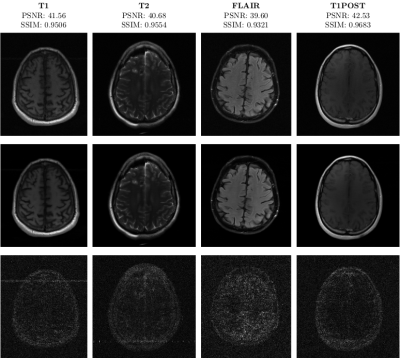

Progressive Volumetrization for Data-Efficient Image Recovery in Accelerated Multi-Contrast MRI

Mahmut Yurt1,2, Muzaffer Ozbey1,2, Salman Ul Hassan Dar1,2, Berk Tinaz1,2,3, Kader Karlı Oğuz2,4, and Tolga Çukur1,2,5

1Department of Electrical and Electronics Engineering, Bilkent University, Ankara, Turkey, 2National Magnetic Resonance Research Center, Bilkent University, Ankara, Turkey, 3Department of Electrical and Computer Engineering, University of Southern California, Los Angeles, CA, United States, 4Department of Radiology, Hacettepe University, Ankara, Turkey, 5Neuroscience Program, Aysel Sabuncu Brain Research Center, Bilkent University, Ankara, Turkey

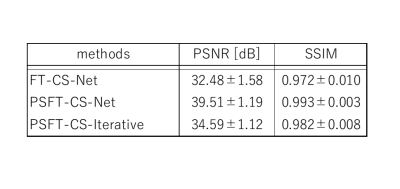

We propose a progressively volumetrized generative model for efficient context learning in 3D multi-contrast MRI accelerated across contrast sets or k-space coefficients. The proposed method decomposes volumetric recovery tasks into a sequence of less complex cross-sectional subtasks.

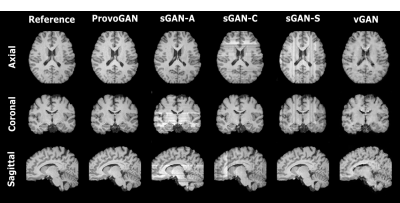

Fig. 1: ProvoGAN performs a series of cross-sectional subtasks optimally-ordered across individual rectilinear orientations (Axial$$$\rightarrow$$$Sagittal$$$\rightarrow$$$Coronal illustrated here) to handle the aimed volumetric recovery task. Within a given subtask, source-contrast volume is divided into cross-sections across the longitudinal dimension, and a cross-sectional mapping is learned to recover target cross-sections from source cross-sections, where the previous subtask's (if available) output is further incorporated to leverage contextual priors.

Fig. 2: ProvoGAN is demonstrated on IXI for T1-weighted image synthesis. Representative results from ProvoGAN, sGAN models (sGAN-A is trained axially, sGAN-C coronally, and sGAN-S sagittally), and vGAN are displayed for all rectilinear orientations (first row: axial, second row: coronal, third row: sagittal) together with reference images.

-

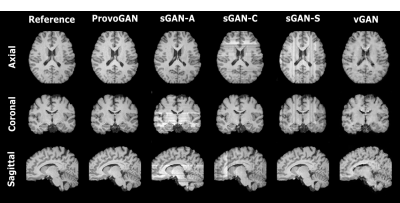

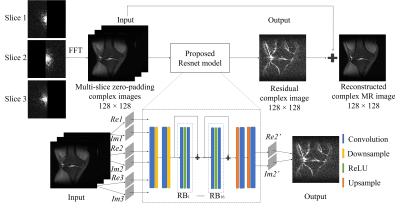

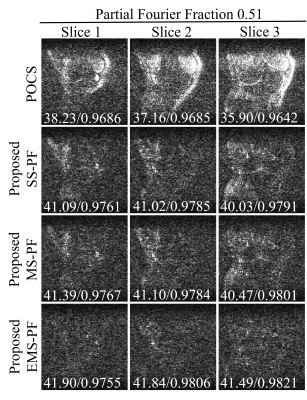

Enhanced Multi-Slice Partial Fourier MRI Reconstruction Using Residual Network

Linshan Xie1,2, Yilong Liu1,2, Linfang Xiao1,2, Peibei Cao1,2, Alex T. L. Leong1,2, and Ed X. Wu1,2

1Laboratory of Biomedical Imaging and Signal Processing, The University of Hong Kong, Hong Kong, China, 2Department of Electrical and Electronic Engineering, The University of Hong Kong, Hong Kong, China

A residual network based reconstruction method is

proposed for multi-slice partial Fourier acquisition, where adjacent slices are

sampled in a complementary way. It enables highly partial Fourier imaging

without losing image details or significant noise amplification.

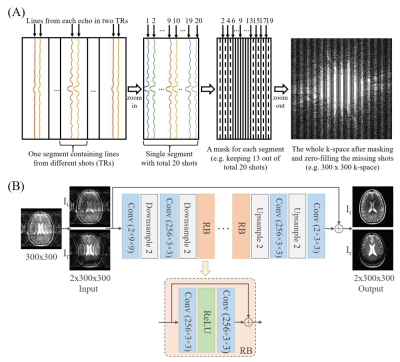

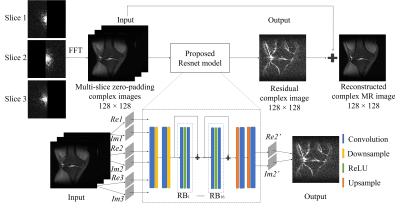

Figure 1 The flowchart of the

proposed EMS-PF reconstruction method, with adjacent slices having complementary

sampling patterns. Re and Im denote the real and imaginary parts

of the reconstructed slice (Slice 2) and its adjacent slices (Slices 1 and 3); Re2’ and Im2’ denote the real and imaginary parts of the predicted residual

image. The ResNet model has 16 RBs and each of them contains 2 convolutional

layers followed by Rectified Linear Unit (ReLU) activation function. In each

convolutional layer, 64 convolutional kernels of size 3×3 each are included.

Figure 3 Error maps of the

reconstructed images in Figure 2 with enhanced brightness (×10) and

corresponding peak signal-to-noise ratio (PSNR) / structural

similarity (SSIM) at PF fraction=0.51. It was obvious that the proposed EMS-PF

outperformed the other methods in terms of reduced residual error.

-

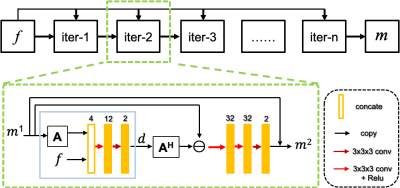

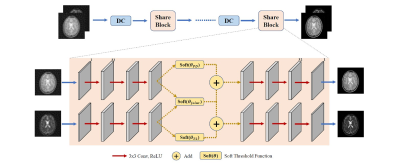

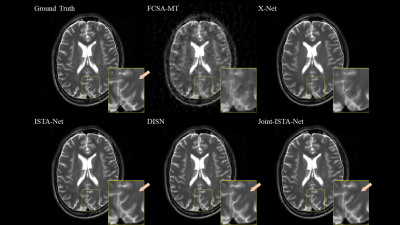

Joint-ISTA-Net: A model-based deep learning network for multi-contrast CS-MRI reconstruction

Yuan Lian1, Xinyu Ye1, Yajing Zhang2, and Hua Guo1

1Center for Biomedical Imaging Research, Department of Biomedical Engineering, School of Medicine, Tsinghua University, Beijing, China, 2MR Clinical Science, Philips Healthcare, Suzhou, China

We design a deep learning model Joint-ISTA-Net, which exploits the group sparsity of multi-contrast MR images in our model to improve the reconstruction quality of Compressed Sensing.

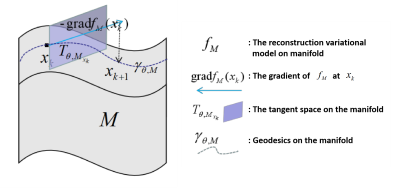

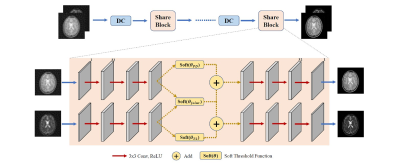

Fig

1. Structure of proposed Joint-ISTA-Net and a iterative Share Block. In Share

Block, three 3x3 CNN layers with Relu stands forward sparse transformation.

Figures in transform domain pass through both individual and joint soft

threshold function to be denoised, and then decoded back to image domain. Here

joint soft threshold function is implemented to exploit the group sparsity

property of multi-contrast MR images.

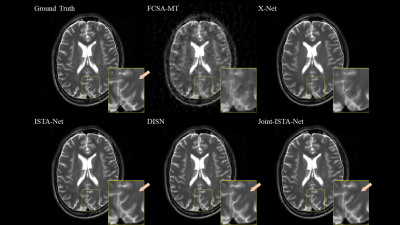

Fig

2. Comparison of reconstruction method with R=10. Proposed Joint-ISTA-Net has

advantages on feature preserving and provides sharper edge.

-

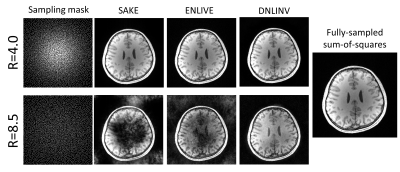

Training- and Database-free Deep Non-Linear Inversion (DNLINV) for Highly Accelerated Parallel Imaging and Calibrationless PI&CS MR Imaging

Andrew Palmera Leynes1,2 and Peder E.Z. Larson1,2

1Department of Radiology and Biomedical Imaging, University of California San Francisco, San Francisco, CA, United States, 2UC Berkeley - UC San Francisco Joint Graduate Program in Bioengineering, Berkeley and San Francisco, CA, United States

We introduce Deep Non-Linear Inversion (DNLINV), a deep image reconstruction approach that may be used with any hardware and acquisition configuration. We

demonstrate DNLINV on different anatomies and sampling patterns and show high

quality reconstructions at higher acceleration factors.

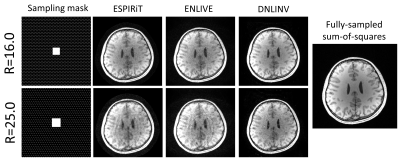

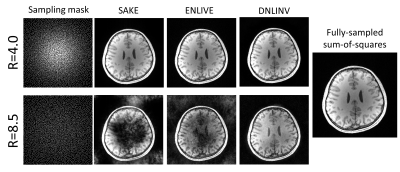

Figure 4. Calibrationless parallel imaging and compressed

sensing on a T1-weighted brain image. All methods were able to successfully

reconstruct the image at R=4.0. However, at R=8.5, only DNLINV was able to

reconstruct the image without any loss of structure. Furthermore, DNLINV reconstructions

have higher apparent SNR.

Figure 5. Autocalibrating parallel imaging with CAIPI

sampling on a T1-weighted brain image. All methods were able to successfully

reconstruct the image at R=16.0 with DNLINV having the highest apparent SNR. At R=25.0, residual

aliasing artifacts remain on ESPIRiT and ENLIVE while these are largely suppressed

in DNLINV.

-

A Modified Generative Adversarial Network using Spatial and Channel-wise Attention for Compressed Sensing MRI Reconstruction

Guangyuan Li1, Chengyan Wang2, Weibo Chen3, and Jun Lyu1

1School of Computer and Control Engineering, Yantai University, Yantai, China, 2Human Phenome Institute, Fudan University, Shanghai, China, 3Philips Healthcare, Shanghai, China

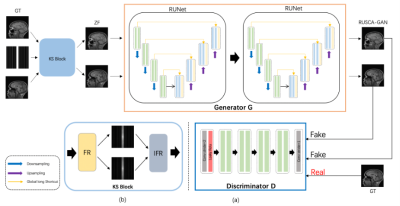

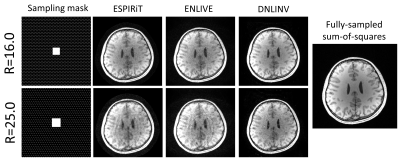

In order to solve the reconstruction effect of CS-MRI under highly under-sampling,we proposed a modified GAN architecture for accelerating CS-MRI reconstruction, namely RSCA-GAN,and added spatial and channel-wise attention in Generative Adversarial Networks.

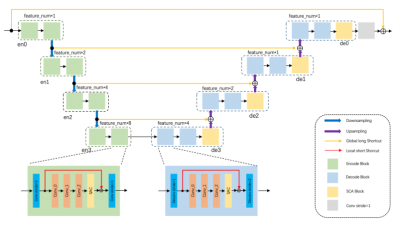

Fig.1.(a) Framework of the proposed method.The generator of the network is connected with two residual autoencoder U-net. The discriminator is composed of 6 layers. (b) Composition of KS-Block.

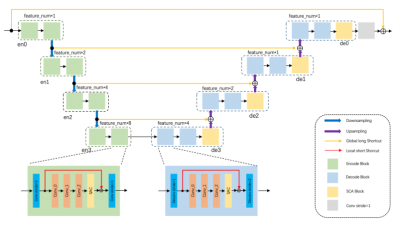

Fig.2.The architecture of Residual SCAU-Net.The encode block is indicated by green, and the decode block is indicated by blue. The 4D tensor is used as input, and using the 2D convolution with filter_size of 3x3 and Stride of 2. The number of feature maps is defined as feature_num=64. The residual block is represented by orange, which is used to increase the depth of the network. SCA Block is indicated by yellow composed of spatial attention and channel attention.

-

Compressed sensing MRI via a fusion model based on image and gradient priors

Yuxiang Dai1, Cheng yan Wang2, and He Wang1

1Institute of Science and Technology for Brain-Inspired Intelligence, Fudan University, Shanghai, China, 2Human Phenome Institute, Fudan University, Shanghai, China

We proposed a fusion model based on the optimization method to integrate the image and gradient-based priors into CS-MRI for better reconstruction results via convolutional neural network models. In addition, the proposed fusion model exhibited effective reconstruction performance in MRA.

Figure 1 The framework of the

proposed fusion model in which the above network is MDN and the below network is

SRLN. $$$N_f$$$ represents the number

of convolutional kernels, DF represents

the dilated factor of convolutional kernel. $$$c_n$$$ and $$$res_n$$$ represent n-th

convolution layer and residual learning respectively.

Figure 2 Reconstruction

results for 30% radial sampling. The first and third row include groundtruth

and reconstruction results of CSMRI methods. The second and fourth row include

radial sampling mask and errors. Values of PSNR and SSIM are shown in the upper

left corner.

-

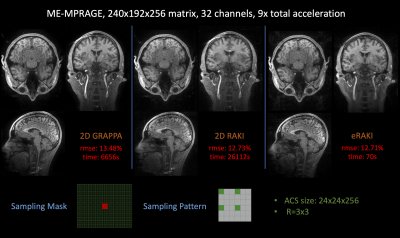

Kernel-based Fast EPTI Reconstruction with Neural Network

Muheng Li1, Jie Xiang2, Fuyixue Wang3,4, Zijing Dong3,5, and Kui Ying2

1Department of Automation, Tsinghua University, Beijing, China, 2Department of Engineering Phycics, Tsinghua University, Beijing, China, 3A. A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital, Charlestown, MA, United States, 4Harvard-MIT Health Sciences and Technology, MIT, Cambridge, MA, United States, 5Department of Electrical Engineering and Computer Science, MIT, Cambridge, MA, United States

Through image reconstruction tests on human brain data set acquired by EPTI, we demonstrated the high efficiency of the kernel-based reconstruction with neural network by shortening the reconstruction time of 216×216×48×32 k-data from over 10 minutes to about 20 seconds.

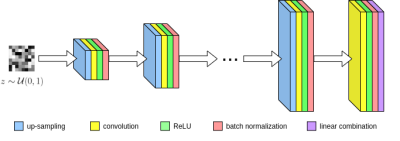

Figure2. Process of restoring the missing k-data in the specified kernel. Extract the acquired data in this kernel and all the data in the target region as a 1D vector respectively. The mapping function can be fitted based on the fully sampled calibration data.

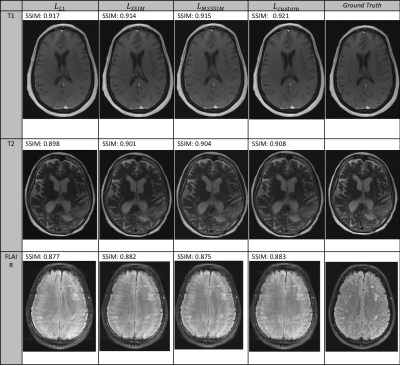

Figure4. Reconstructed images with different (a)loss function: MSE, MAE, Huber (b)number of nodes in each hidden layer (c)multi-contrast and reference images by conventional linear algorithm.

-

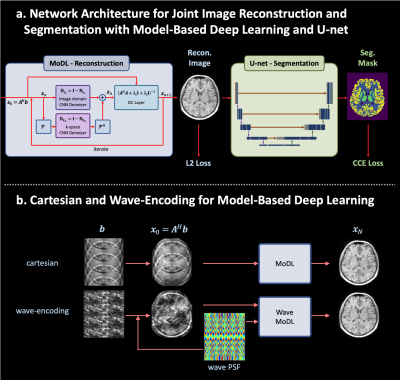

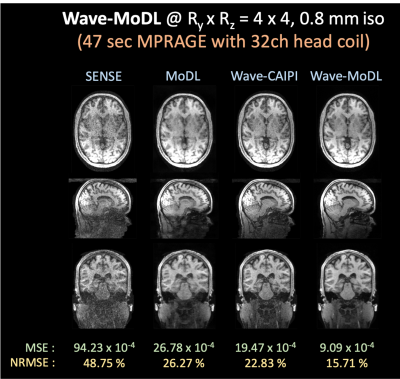

Wave-Encoded Model-Based Deep Learning with Joint Reconstruction and Segmentation

Jaejin Cho1,2, Qiyuan Tian1,2, Robert Frost1,2, Itthi Chatnuntawech3, and Berkin Bilgic1,2,4

1Athinoula A. Martinos Center for Biomedical Imaging, Charlestown, MA, United States, 2Havard Medical School, Cambridge, MA, United States, 3National Nanotechnology Center, Pathum Thani, Thailand, 4Harvard/MIT Health Sciences and Technology, Cambridge, MA, United States

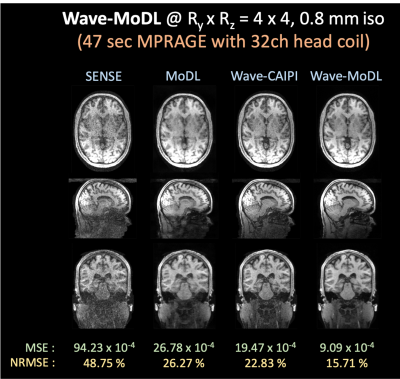

Simultaneously training wave-encoded model-based deep learning (wave-MoDL) with hybrid k- and image-space priors and a U-net enables high-fidelity image reconstruction and segmentation performance at high acceleration rates.

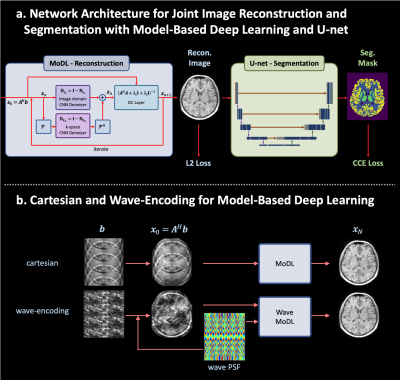

Figure 1. a. the proposed network architecture for joint MRI reconstruction and segmentation with model-based deep learning and U-net. b. the reconstruction scheme using model-based deep learning for cartesian and wave-encodings.

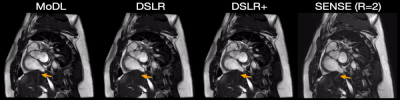

Figure 5. Reconstructed images using SENSE, MoDL, wave-CAIPI and wave-MoDL at RyxRz=4x4 on 32-channel HCP data.