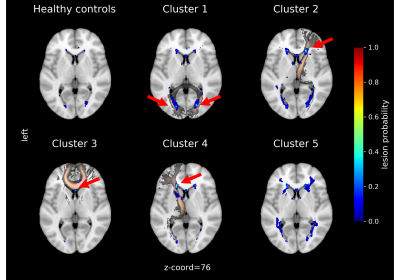

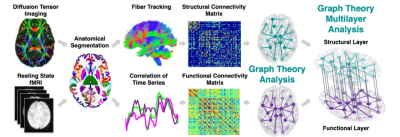

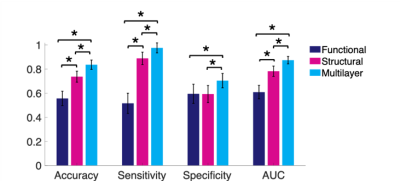

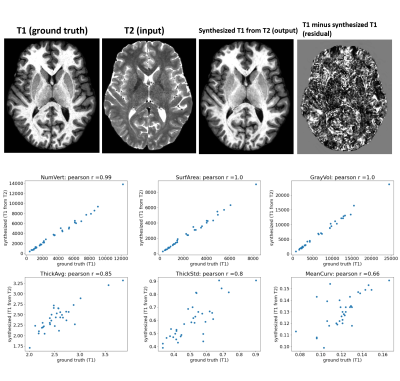

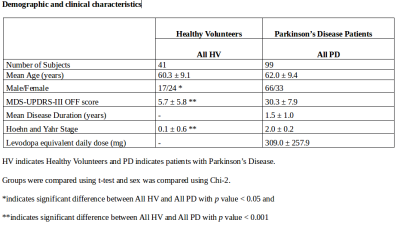

-

Stratifying ischaemic stroke patients across 3 treatment windows using T2 relaxation times, ordinal regression and cumulative probabilities

Bryony L. McGarry1,2, Elizabeth Hunter1, Robin A. Damion2, Michael J. Knight2, Philip L. Clatworthy3, George Harston4, Keith W. Muir5, Risto A. Kauppinen6, and John D. Kelleher1

1PRECISE4Q Predictive Modelling in Stroke, Information Communications and Entertainment Institute, Technological University Dublin, Dublin, Ireland, 2School of Psychological Science, University of Bristol, Bristol, United Kingdom, 3Stroke Neurology, North Bristol NHS Trust, Bristol, United Kingdom, 4Acute Stroke Programme, Radcliffe Department of Medicine, University of Oxford, Oxford, United Kingdom, 5Institue of Neuroscience and Psychology, University of Glasgow, Glasgow, United Kingdom, 6Faculty of Engineering, University of Bristol, Bristol, United Kingdom

Using ordinal logistic regression, T2 relaxation times can be used to calculate the probabilities of an acute ischaemic stroke patient with unknown onset time being within treatment time-windows for intravenous thrombolysis, intra-arterial thrombolysis and mechanical thrombectomy.

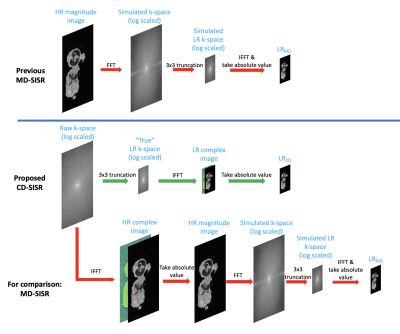

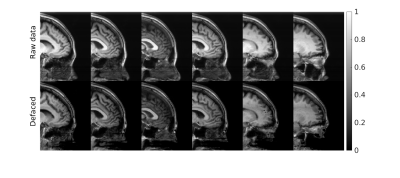

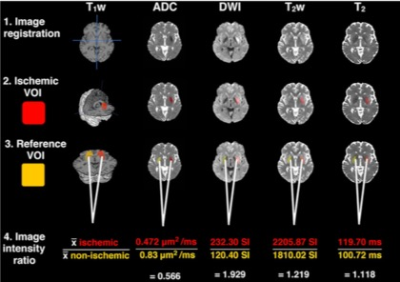

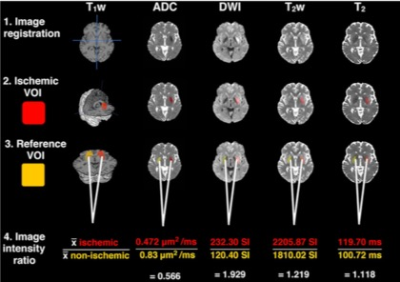

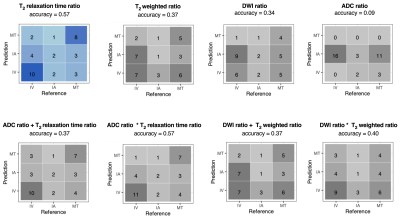

1. All images were resampled to 1mm isotropic resolution and co-registered to the MNI registered T1W image. 2. Ischaemic VOIs were created using previously described ADC and T2 limits to reduce CSF contribution.2,10 3. Non-ischaemic VOIs were created by reflecting the ischaemic VOI across the vertical axis and applying the ADC and T2 limits. 4. Image intensity ratios were computed by dividing the mean values of ischaemic VOIs by mean non-ischaemic VOIs. SI = signal intensity.

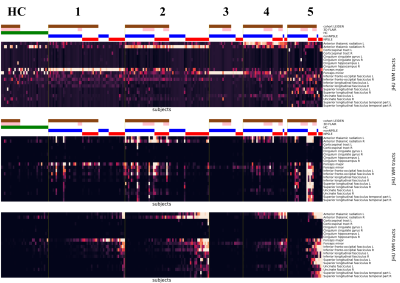

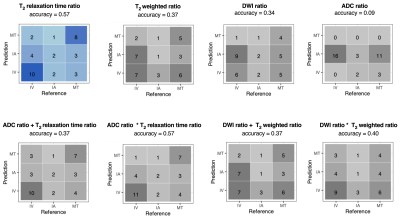

Accuracy and confusion matrices for cumulative ordinal regression models. Darker shades indicate the higher number of correct predictions. The standardised T2 relaxation time ratio was the most accurate at identifying patients within each treatment window. All models identified patients within the middle IA treatment window. In this figure, a + indicates a linear combination of input features, and * indicates the inclusion of an interaction term.

-

Distribution indices of QSM values in M1 enable to classify ALS patients and healthy controls

Mauro Costagli1,2, Graziella Donatelli3,4, Paolo Cecchi3,4, Gabriele Siciliano4,5, and Mirco Cosottini3,4,5

1University of Genova, Genova, Italy, 2IRCCS Stella Maris, Pisa, Italy, 3IMAGO 7 Research Foundation, Pisa, Italy, 4Azienda Ospedaliero Universitaria Pisana, Pisa, Italy, 5University of Pisa, Pisa, Italy

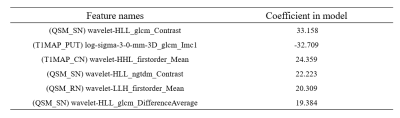

The joint use of different distribution indices of M1 QSM values

in a Support Vector Machine enables to discriminate between patients with ALS

and controls with high diagnostic accuracy.

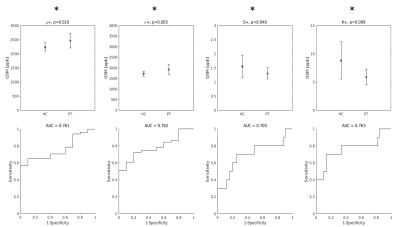

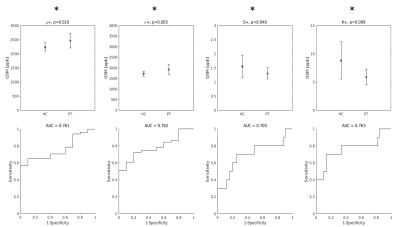

Top row: group differences in the distribution indices of M1 QSM positive values. Asterisks indicate that all differences were statistically significant. Bottom row: diagnostic accuracy of each feature.

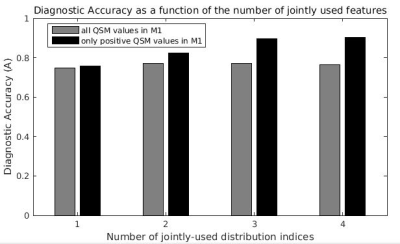

Maximum diagnostic accuracy of SVM classifiers as a function of the number of QSM distibution indices jointly considered. For example, the last black bar on the right indicates the maximum diagnostic accuracy (A = 0.90) obtained with the joint use of all four distribution indices of QSM positive values in M1.

-

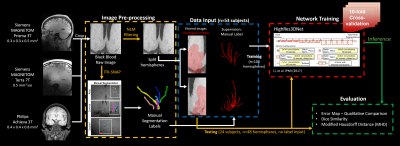

4D flow MRI hemodynamic quantification of pediatric patients with multi-site, multi-vender, and multi-channel machine learning segmentation

Takashi Fujiwara1, Haben Berhane2,3, Michael Baran Scott3, Zachary King2, Michal Schafer4, Brian Fonseca4, Joshua Robinson3, Cynthia Rigsby2,3, Lorna Browne4, Michael Markl3, and Alex Barker1,5

1Department of Radiology, Children's Hospital Colorado, University of Colorado Anschutz Medical Campus, Aurora, CO, United States, 2Lurie Children's Hospital of Chicago, Chicago, IL, United States, 3Northwestern University, Evanston, IL, United States, 4Children's Hospital Colorado, University of Colorado Anschutz Medical Campus, Aurora, CO, United States, 5Department of Bioengineering, University of Colorado Anschutz Medical Campus, Aurora, CO, United States

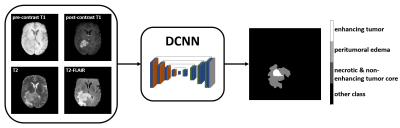

We

found multi-site, multi-vender 4D flow MRI datasets improved performance in cases with

challenging anatomy in

segmenting large arteries, improving flow quantification of difficult cases as

well as overall performance.

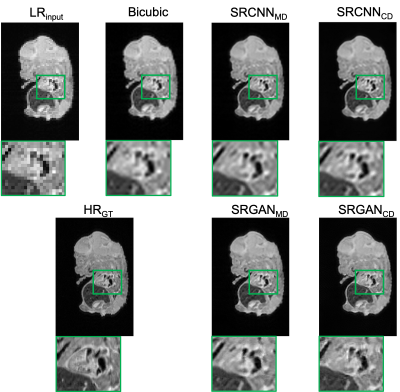

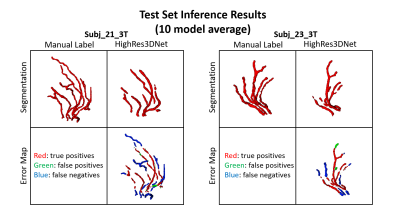

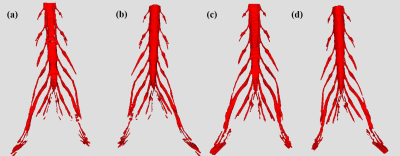

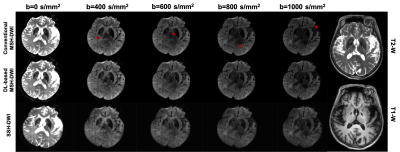

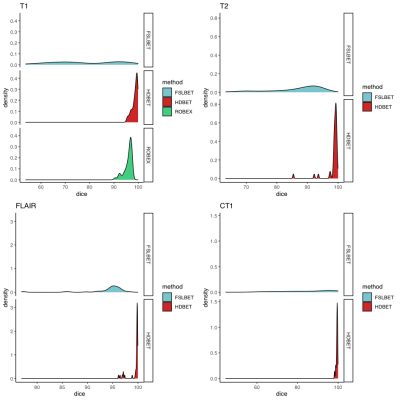

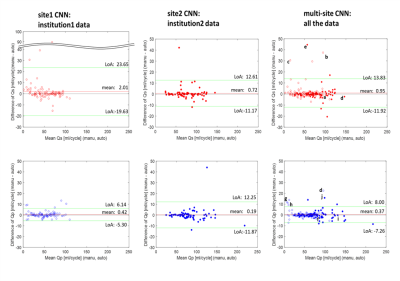

Fig. 3 Some examples of successful/failed

(differences ≥ 10ml/cycle) hemodynamic

measurements in multi-site training. Segmentations of aorta (red) and pulmonary

arteries (PA, blue) from both single-site and multi-site CNN are presented with

Dice scores. The letters correspond to those in Fig. 2. ToF, tetralogy of Fallot; TR,

tricuspid regurgitation; HLHS, hypoplastic left heart syndrome.

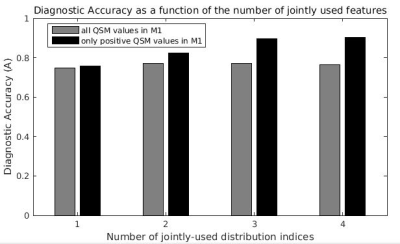

Fig. 2

Bland-Altman plots for net flow in the ascending aorta (Qs, upper row) and main pulmonary trunk (Qp, lower row) quantified by site1 CNN,

site2 CNN, and multi-site CNN. Institution1 data are plotted by open circles

while institution2 data are shown by solid circles. Limits of agreement and

mean differences are presented as green and red lines. The letter labels indicate

successful and failed (differences ≥ 10ml/cycle) examples for flow

quantification. The labels correspond to the segmentations shown in Fig. 3.

-

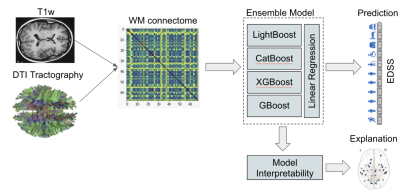

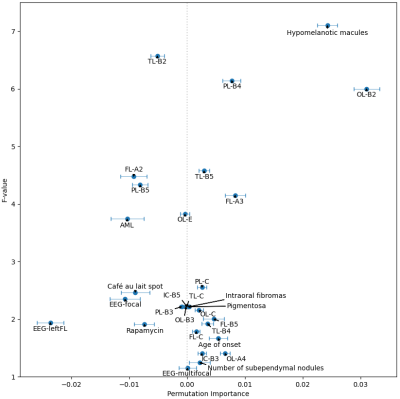

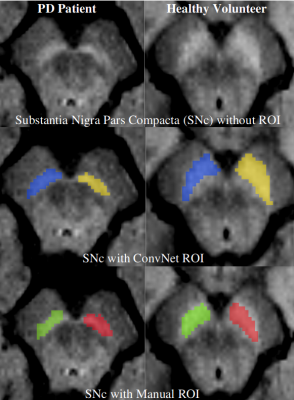

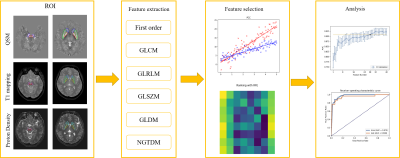

Delineating parkinsonian disorders using T1-weighted MRI based radiomics

Priyanka Tupe Waghmare1, Archith Rajan2, Shweta Prasad3, Jitender Saini4, Pramod Kumar Pal5, and Madhura Ingalhalikar6

1E &TC, Symbiosis Institute of Technology, Pune, India, 2Symbiosis Centre for Medical Image Analysis, Symbiosis Centre for Medical Image Analysis, Pune, India, 3Department of Clinical Neurosciences and Neurology, National Institute of Mental Health & Neurosciences, Bangalore, India, 4Department of Neuroimaging & Interventional Radiology, National Institute of Mental Health & Neurosciences, Bangalore, India, 5Department of Neurology, National Institute of Mental Health & Neurosciences, Bangalore, India, 6Symbiosis Center for Medical Image Analysis and Symbiosis Institute of Technology, Pune, India

This study establishes the utility

of radiomics to differentiate Parkinson’s disease and atypical Parkinsonian

syndromes using routine T1 weighted images.

PD and APS were classified at an accuracy of 92% using random forest

classifiers.

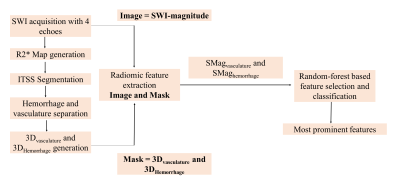

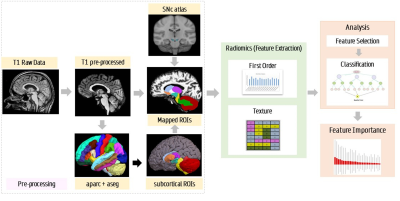

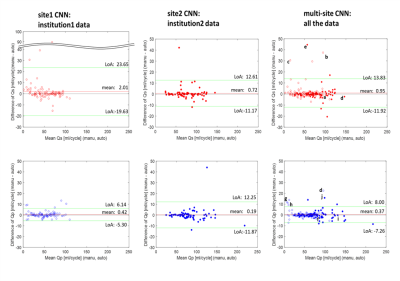

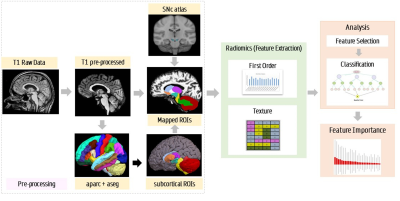

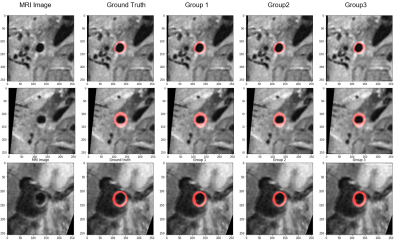

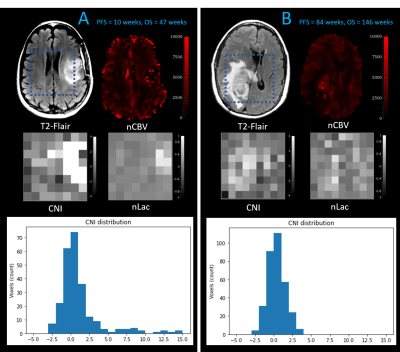

Pipeline for radiomics analysis and feature extraction

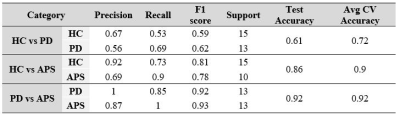

Classification results based on T1 radiomics

-

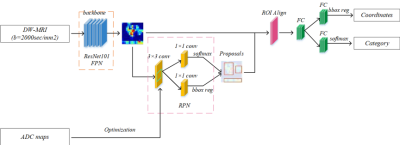

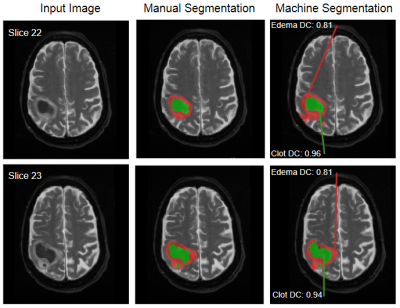

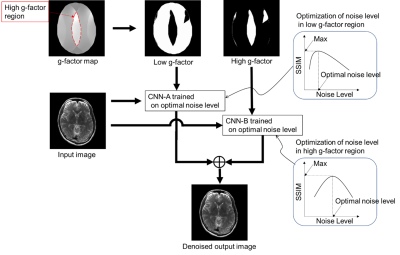

Automatic segmentation of arterial vessel wall on undersampled MR image using deep learning

Shuai Shen1,2,3,4, Xiong Yang5, Jin Fang6, Guihua Jiang6, Shuheng Zhang5, Yanqun Teng5, Xiaomin Ren5, Lele Zhao5, Jiayu Zhu5, Qiang He5, Hairong Zheng1,3,4, Xin Liu1,3,4, and Na Zhang1,3,4

1Paul C. Lauterbur Research Center for Biomedical Imaging, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, shenzhen, China, 2College of Software, Xinjiang University, Urumqi,, China, 3Key Laboratory for Magnetic Resonance and Multimodality Imaging of Guangdong Province, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, shenzhen, China, 4CAS key laboratory of health informatics, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, shenzhen, China, 5Shanghai United Imaging Healthcare Co., Ltd., shanghai, China, 6Department of Radiology, Guangdong Second Provincial General Hospital, guangdong, China

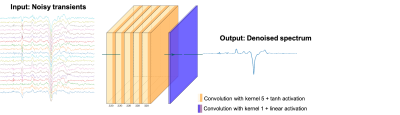

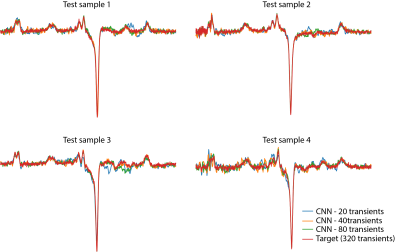

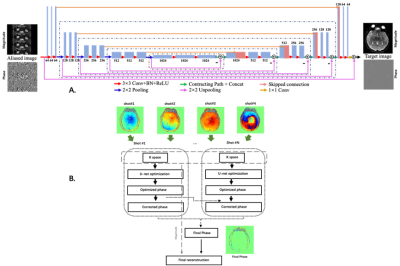

In this study, we developed and evaluated a U-net neural network architecture to segment the arterial vessel wall on original acquired MR vessel wall images and the corresponding images reconstructed from undersampled K-space data.The obtained results for different groups were similar.

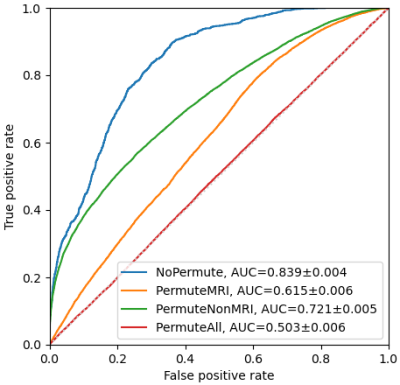

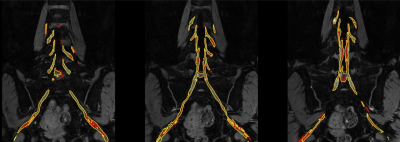

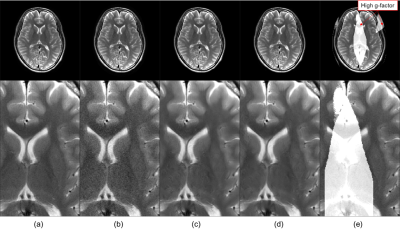

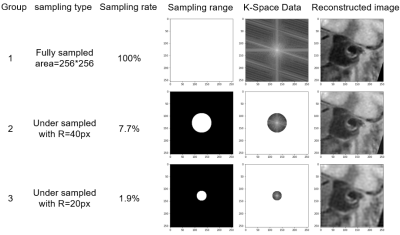

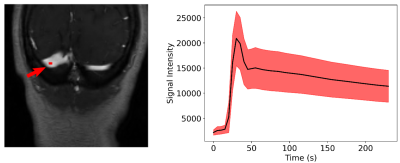

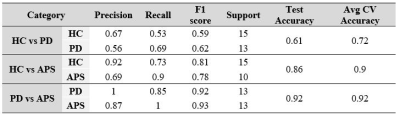

Figure

1 Representative original images and undersampled

images with different undersampled rate.

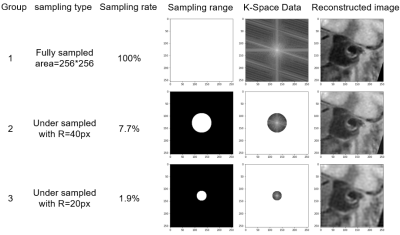

Figure

2 Representative images of the segmentation results

of different three group experiments.

-

Automatic Vascular Function Estimation using Deep Learning for Dynamic Contrast-enhanced Magnetic Resonance Imaging

Wallace Souza Loos1,2, Roberto Souza2,3, Linda Andersen1,2, R. Marc Lebel2,4, and Richard Frayne1,2

1Radiology and Clinical Neuroscience, Hotchkiss Brain Institute, University of Calgary, Calgary, AB, Canada, 2Seaman Family MR Research Centre, Foothills Medical Centre, Calgary, AB, Canada, 3Electrical and Computer Engineering, Hotchkiss Brain Institute, University of Calgary, Calgary, AB, Canada, 4General Electric Healthcare, Calgary, AB, Canada

A deep learning approach was used to estimate a

vascular function from dynamic contrast magnetic images. Our model was able to

generalize well for unseen data and achieved a good overall performance without

requiring manual intervention or major preprocessing steps.

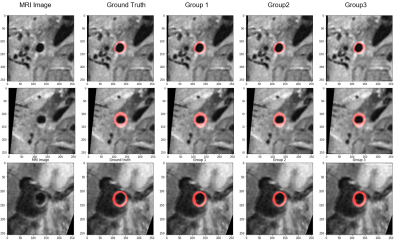

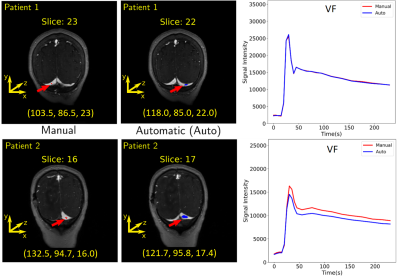

Estimation of the region and

the VF for two patients. The first column shows where the manual region was

drawn. The coordinate of the center of mass (in voxels) is placed below each

image. The second column shows the predicted region. It is possible to observe

that different regions over the transverse sinus can yield similar vascular

functions, as illustrated in the plots of the third column. Plots: Red = manual

VF and blue = predicted VF.

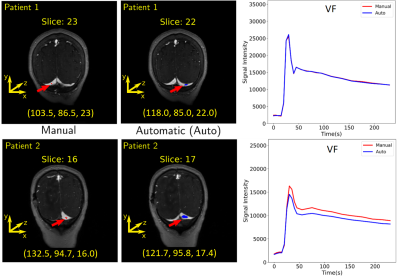

A region of interest is

selected manually over the transverse sinus (left) to estimate the VF (right). To

compute the VF, the region was propagated across the dynamic T1-weighted

images and the average of the intensities of the pixels was computed for each time

point. The mean (black line) and standard deviation (red shaded region) of the 155

VF curves is presented on the right image.

-

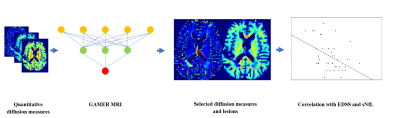

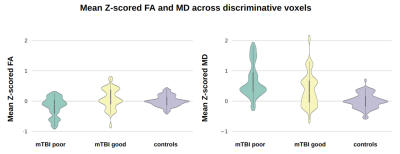

Pattern-based features extraction algorithm in the diagnosis of neurodegenerative diseases from diffusion MRI

Sung-han Lin1, Chih-Chien Tsai1, Yi-Chun Chen2,3, and Jiun-Jie Wang1

1Department of Medical Imaging and Radiological Sciences, Chang-Gung University, TaoYuan, Taiwan, 2Department of Neurology, Chang Gung Memorial Hospital Linkou Medical Center, TaoYuan, Taiwan, 3College of Medicine, Chang Gung University, TaoYuan, Taiwan

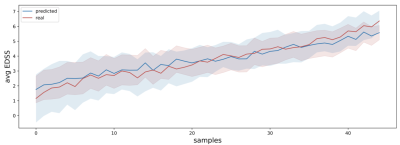

The current study developed a novel feature

extraction algorithm which based on disease pathological changes and the spatial

information of disease affected pattern and its surrounding regions. Newly

extracted features showed improved diagnostic accuracy, especially for MCI

patients.

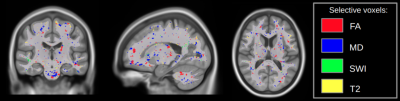

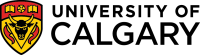

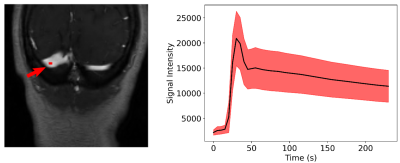

Figure 1. Flowchart of feature extraction. For all four DTI

derived indices in each subject, the feature extraction procedure can be

divided into the primary feature from each anatomical region in the brain (panel

A), and the secondary feature set (panel B), which was derived from the disease

affected pattern. The secondary features were calculated from the product of the

value difference to the distance between two primary features. Only features

were involved in the disease affected pattern and passed the neighborhood

selection were selected.

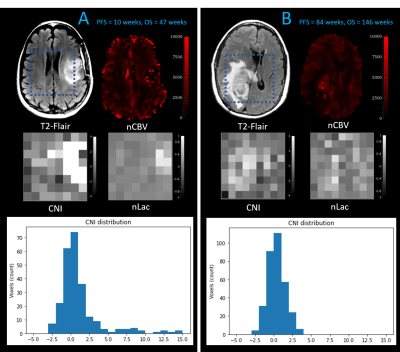

Figure

3. The selected secondary feature set in four DTI derived indices. The selected secondary feature set was showed in mean diffusivity (21 links), fractional

anisotropy (37 links), axial diffusivity (31 links), and radial diffusivity (13

links), respectively. Blue nodes indicate the anatomical regions in the AAL

template. Links between nodes indelicate the secondary features and the thicker

link means the more significance among classes.

-

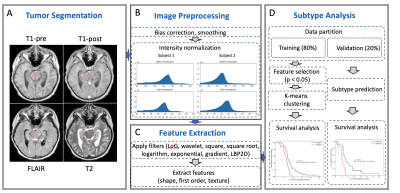

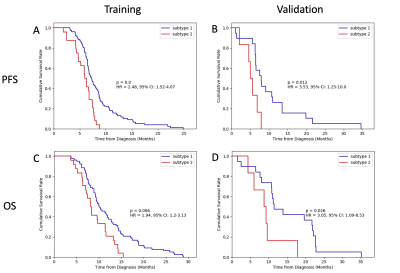

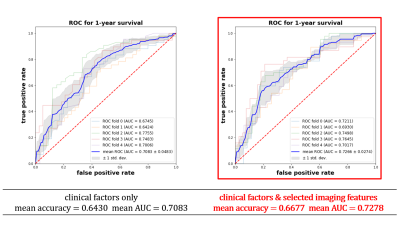

Early prediction of progression free survival and overall survival of patients with glioblastoma using machine learning and multiparametric MRI

Nate Tran1,2, Tracy Luks1, Devika Nair1, Angela Jakary1, Yan Li1, Janine Lupo1, Javier Villanueva-Meyer1, Nicholas Butowski3, Jennifer Clarke3, and Susan Chang3

1Department of Radiology & Biomedical Imaging, University of California, San Francisco, SAN FRANCISCO, CA, United States, 2UCSF/UC Berkeley Graduate Program in Bioengineering, SAN FRANCISCO, CA, United States, 3Department of Neurological Surgery, University of California, San Francisco, SAN FRANCISCO, CA, United States

We

trained and tested random forest models using metabolic, perfusion, and

diffusion images at both preRT and midRT scans, and found that not confining

these metrics to the anatomical lesion boundaries improved outcome prediction.

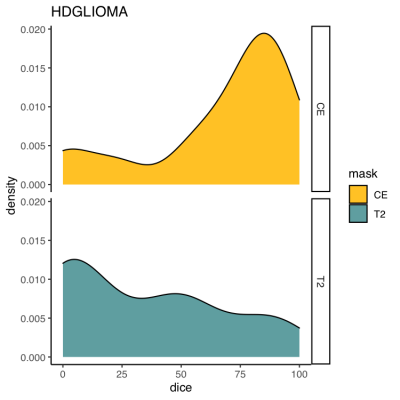

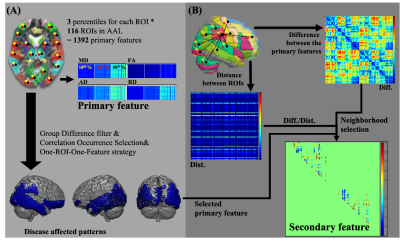

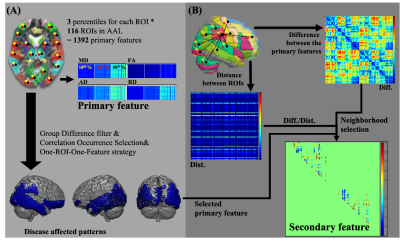

Figure 1: Patient B has a CEL volume of 28.4 cm3,

progressed at 84 weeks, and died at 146 weeks. Although Patient A has smaller

CEL & T2L volumes (CEL=10.9 cm3), they progressed much sooner at

10 weeks, and died at only 47 weeks

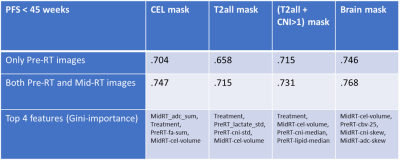

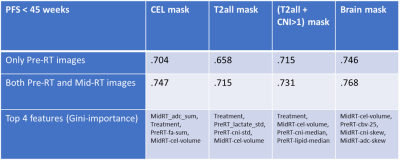

Table 1: Performance of RandomForest model to predict

whether or not OS<45 weeks for each mask using just pre-RT images, and both

pre-RT and mid-RT images

-

Exploring Brain Regions Involved in Working Memory using Interpretable Deep Learning

Mario Serrano-Sosa1, Jared Van Snellenberg2, and Chuan Huang2,3

1Biomedical Engineering, Stony Brook University, Stony Brook, NY, United States, 2Psychiatry, Renaissance School of Medicine at Stony Brook University, Stony Brook, NY, United States, 3Radiology, Renaissance School of Medicine at Stony Brook University, Stony Brook, NY, United States

We have developed an interpretable deep

learning algorithm to predict working memory scores from 2-back fMRI data that was able to create averaged saliency maps

highlighting regions most predictive of working memory scores.

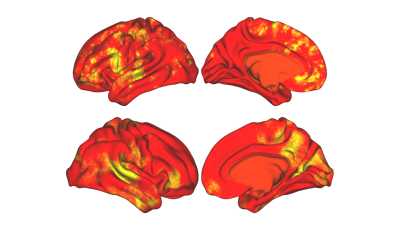

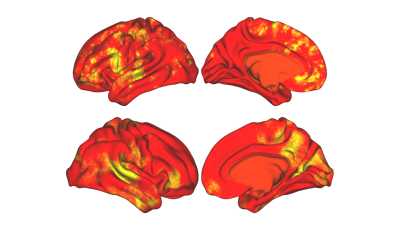

Figure 3: Averaged

saliency maps obtained after training and optimizing the CNN to predict WM

subconstruct scores.

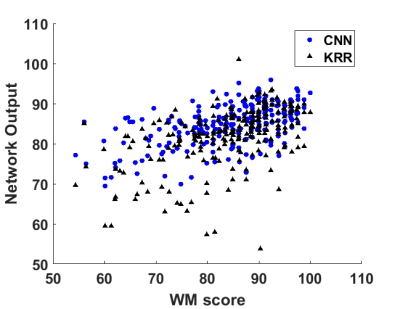

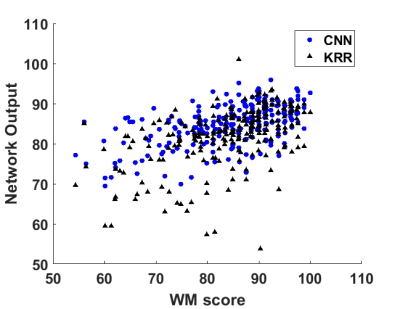

Figure 2: Network

outputs for both CNN and KRR vs ground truth WM score. Blue dots are CNN

outputs and black triangles are KRR outputs.

-

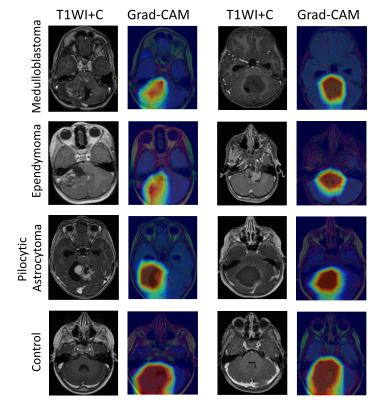

Multi-layer backpropagation of classification information with Grad-CAM to enhance the interpretation of deep learning models

Daphne Hong1 and Yunyan Zhang1

1University of Calgary, Calgary, AB, Canada

Using Grad-CAM, it is feasible to backpropagate classification information into arbitrary layers of convolutional neural networks trained on standard brain MRI. Backpropagation into lower-level layers showed greater localization, and higher levels with greater generalization.

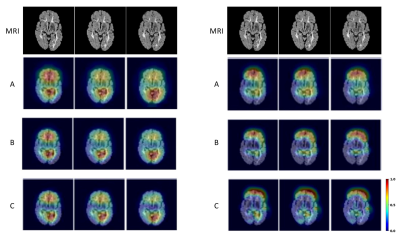

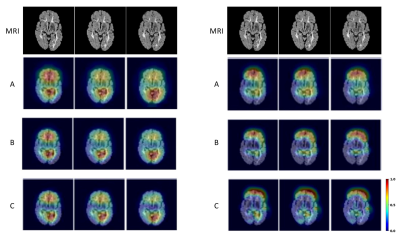

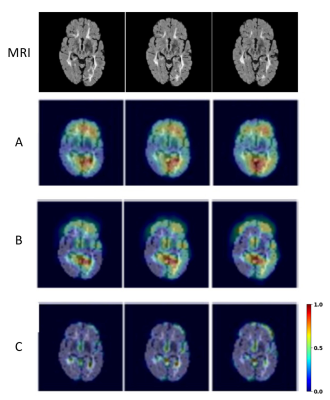

Heatmaps from MRI of a SPMS patient. Shown are FLAIR image slices 57-59 (left to right, top row) out of 135, and corresponding Grad-CAM heatmaps from VGG16 (left) and VGG19 with GAP (right). The CNN layers highlighted in VGG16 with GAP are: A) last convolutional layer – ‘block5_conv3’, B) second last convolutional layer – ‘block5_conv2’, and C) first convolutional layer of the last convolutional block – ‘block5_conv1’; and in VGG19 with GAP: A) last max pooling layer – ‘block5_pool’, B) last convolutional layer – ‘block5_conv4’, and C) second last convolutional layer – ‘block5_conv3’.

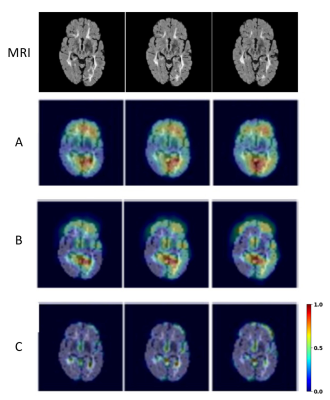

Further heatmaps generated from the brain MRI of the same SPMS patient using VGG19 with GAP. Shown are also MRI slices at 57-59 (left to right, top row) out of 135. The CNN layers highlighted are: A) third last convolutional layer – ‘block5_conv2’, B) first convolutional layer of last convolutional block – ‘block5_conv1’, and C) last convolutional layer of second last convolutional block – ‘block4_conv4’.

-

Improved Outcome prediction in mild Traumatic Brain Injury using Latent Feature Extraction from Volumetric MRI

Sanjay Purushotham1, Ashwathy Samivel Sureshkumar1, Li Jiang2, Shiyu Tang2, Steven Roys2, Chandler Sours Rhodes2,3, Rao P. Gullapalli2, and Jiachen Zhuo2

1Department of Information System, University of Maryland, Baltimore County, Baltimore, MD, United States, 2Diagnostic Radiology and Nuclear Medicine, University of Maryland School of Medicine, Baltimore, MD, United States, 3National Intrepid Center of Excellence, Walter Reed National Military Medical Center, Bethesda, MD, United States

Mild traumatic brain injury (mTBI) patients account for over 70% of all TBI, with some experiencing persistent post concussive symptoms. Here we present a novel method for latent feature extraction from acute volumetric MRI and show how it improved our 18-month symptom prediction in patients.

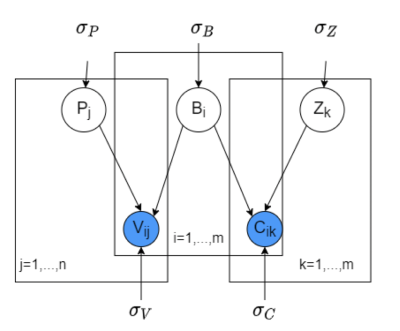

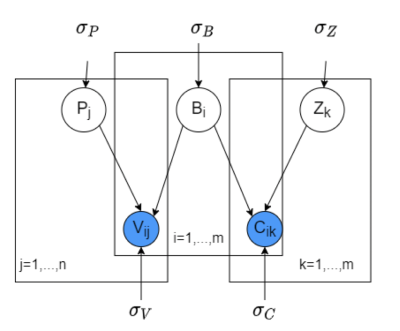

Figure 1: Graphical Model for the brain region volumetric matrix factorization. C = {Cik} is brain region adjacency matrix, B and Z are latent brain region and factor feature matrices with Bi and Zk representing brain-region specific and factor-specific latent feature vectors. Pj represents patient latent vector for patient j. Rij represents the volumetric observation (value) of brain region i for patient j.

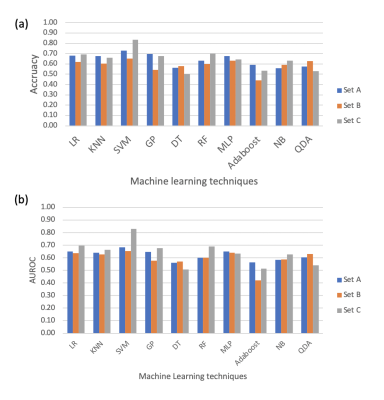

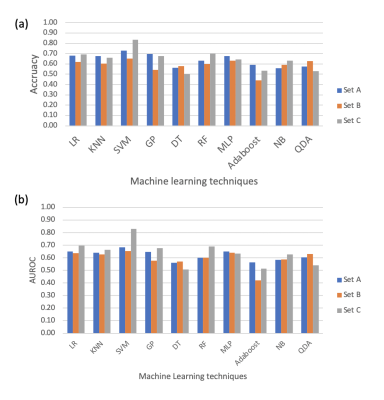

Figure 2: AUROC and Accuracy plots for predicting patient long-term outcome (PCS labels) using different feature sets.

-

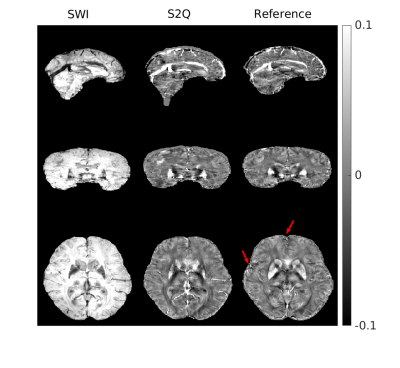

Prediction of iron rim lesions in multiple sclerosis using convolutional neural networks and multi-contrast 7T MRI data

René Schranzer1,2, Steffen Bollmann3, Simon Hametner2, Christian Menard1, Siegfried Trattnig4, Fritz Leutmezer2, Paulus Stefan Rommer2, Thomas Berger2, Assunta Dal-Bianco2, and Günther Grabner1,2,4

1Department of Medical Engineering, Carinthia University of Applied Sciences, Klagenfurt, Austria, 2Department of Neurology, Medical University of Vienna, Vienna, Austria, 3School of Information Technology and Electrical Engineering, The University of Queensland, Brisbane, Australia, 4Department of Biomedical Imaging and Image-guided Therapy, High Field Magnetic Resonance Centre, Vienna, Austria

We developed a pipeline, based on neural

networks, that provides high quality lesion segmentation and automatic

classification of MS lesions based on the presence or absence of an iron-rim.

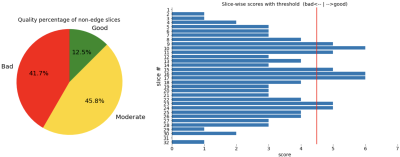

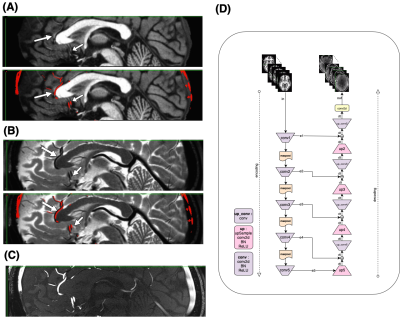

Figure

1.: Lesion segmentation and iron classification

results of the CNNs from the same slice of one representative MS patient: A

segmentation comparison for two MS lesions, between manual expert labeling

(blue) and CNN labeling (red) is shown in the top image. An example for a

non-iron (left) and iron lesion (right) classification from the same area as above is

shown on the bottom. A prominent hypointense and hyperintense iron-rim is

visible in the SWI and QSM image, respectively.

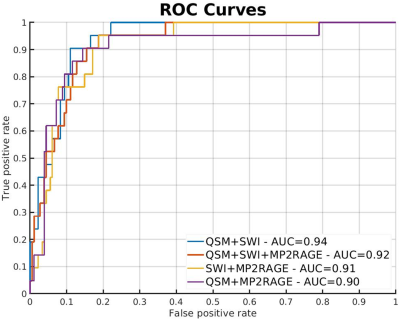

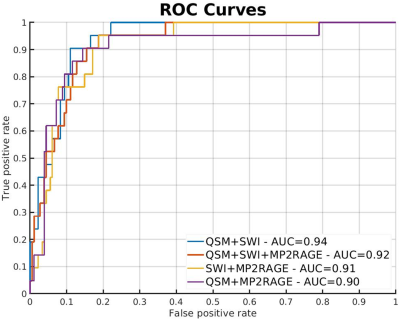

Figure 2.: Receiver operating characteristic curves for all network

combinations: The Graph shows ROC curves with true-positive rate plotted against

false-positive rate for lesion-wise prediction of iron.

-

MRI-ASL Perfusion patterns may predict deep brain stimulation outcome in de novo Parkinson’s Disease

Hanyu Wei1, Le He1, Rongsong Zhou2, Shuo Chen1, Miaoqi Zhang1, Wenwen Chen1, Xuesong Li3, Yu Ma2, and Rui Li1

1Center for biomedical imaging research, Tsinghua University, Beijing, China, 2Department of Neurosurgery, Tsinghua University Yuquan Hospital, Beijing, China, 3School of Computer Science and Technology, Beijing Institute of Technology, Beijing, China

Pre-surgical medication “on” and “off” MR

perfusion patterns may predict the DBS outcome in PD patients by machine

learning models.

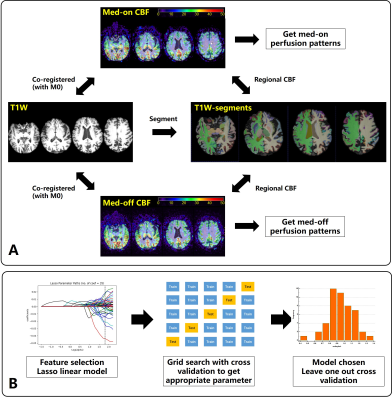

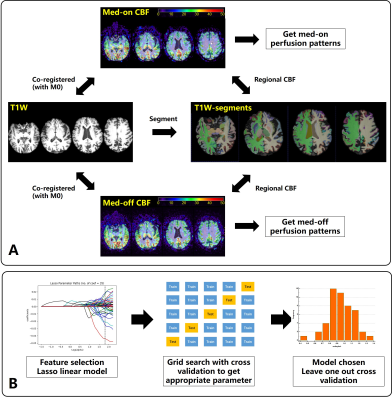

Figure. 1 A: Flow chart of the

image-analysis pipeline. B Main procedures of predictive model construction and

evaluation.

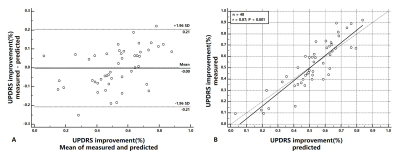

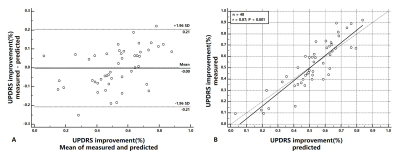

Figure. 2 A: Bland-Altman

analysis of measured and predicted UPDRS improvement, the paired t-test

significance p=0.94 and mean prediction

error of 9.0% UPDRS improvement. B: Correlation analysis of

measured and predicted UPDRS improvement, the correlation r=0.87, p<0.001.

-

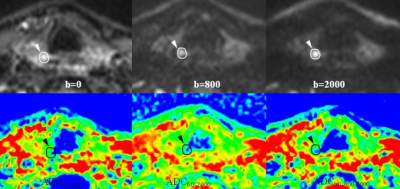

Using MRI and Radiomics to Predict Pain in a Cohort of Trigeminal Neuralgia Patients Treated With Radiosurgery

Kellen Mulford1, Sean Moen2, Andrew W. Grande2, Donald R. Nixdorf3, and Pierre-Francois Van de Moortele1

1Center for Magnetic Resonance Imaging, University of Minnesota, Minneapolis, MN, United States, 2Department of Neurosurgery, University of Minnesota, Minneapolis, MN, United States, 3Department of Diagnostic and Biological Science, University of Minnesota, Minneapolis, MN, United States

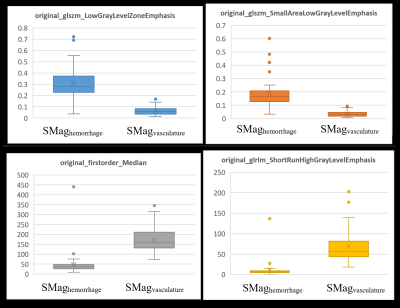

There is a lack of objective measures for diagnosing and classifying trigeminal neuralgia. In this work, we developed a radiomics based model for predicting whether a nerve was affected by pain.

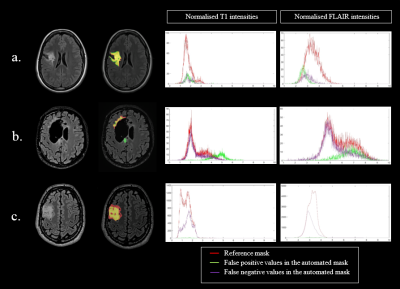

Figure 1: Flowchart detailing the methods used to build the predictive model.

-

Stacked hybrid learning U-NET for segmentation of multiple articulators in speech MRI

SUBIN ERATTAKULANGARA1, KARTHIKA KELAT2, JUNJIE LIU3, and SAJAN GOUD LINGALA1,4

1Roy J Carver Department of Biomedical Engineering, University of Iowa, Iowa City, IA, United States, 2Government Engineering College Kozhikode, Kozhikode, India, 3Department of Neurology, University of Iowa, Iowa City, IA, United States, 4Department of Radiology, University of Iowa, Iowa City, IA, United States

We propose a stacked hybrid learning U-NET architecture that automatically segments the tongue, velum, and airway in speech MRI. The segmentation accuracy of our stacked U-NET is comparable to a manual annotator. Also, the model can segment images at a speed of 0.21s/ image.

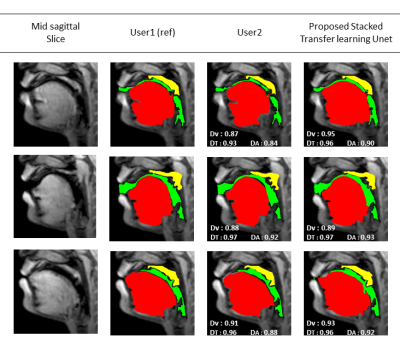

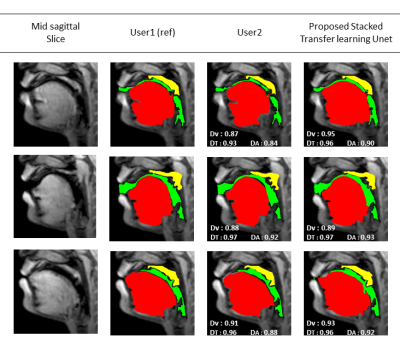

Figure 2: Results of multiple

articulator segmentation on the test data. Three sample postures are shown in

the figure. Reference segmentation from User1 is compared against segmentation

from User2 and the proposed stacked transfer learned based U-NET. The DICE

similarities for the tongue (T), airway (A), and the velum (V) are embedded.

These segmentations demonstrate good quality from the proposed U-NET scheme

with variability in the range of differences between user1-user2 segmentations.

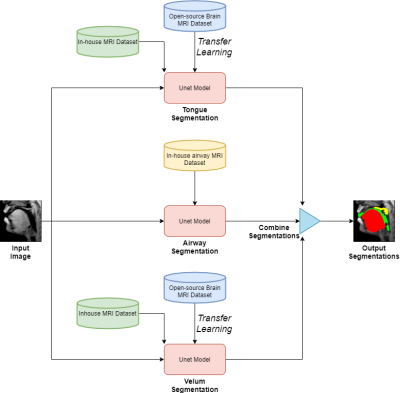

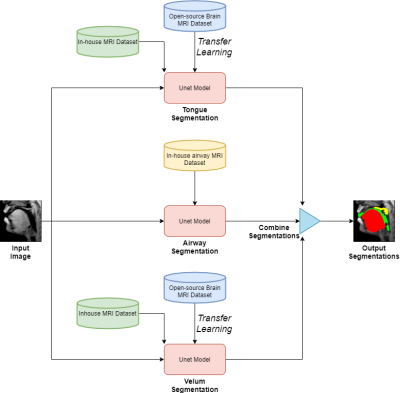

Figure1: Stacked U-net architecture with hybrid learning. Each of the red boxes represents the U-NET model. The U-NET models for the tongue and velum segmentation are pre-trained with an open-source brain MRI dataset [6], and the UNET model for the airway is trained with an In-house airway MRI dataset. Later the velum and tongue U-nets are trained with an in-house MRI dataset which has few manually labeled (~60 images) articulator segmentations. The final output airway, tongue, velum, segmentation is the concatenated segmentations from the individual U-NET outputs.

-

Use scout models for effective dimension reduction and feature selection in radiomics study

Yibo Dan1, Hongyue Tao2, Yida Wang1, Chengxiu Zhang1, Chenglong Wang1, Shuang Chen2, and Guang Yang1

1Shanghai Key Laboratory of Magnetic Resonance, East China Normal University, shanghai, China, 2Department of Radiology, Huashan Hospital, Fudan University, shanghai, China

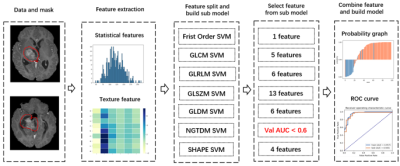

We proposed a heuristic method for

effective dimension reduction and feature selection, which built scout models for

each categories of features to select useful features for final model building.

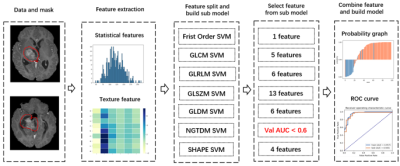

Figure 1. Flowchart of the modeling process

for BraTS 2019 dataset. Pipeline for CAI dataset is similar but for the number

of selected features from each category.

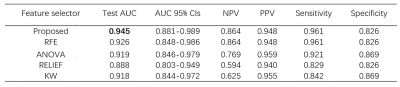

Table 1. Comparison of performance of

proposed approach with classic feature selectors over BraTS2019 dataset.

-

EVALUATION OF A CONVOLUTIONAL NEURAL NETWORK FOR AUTOMATED SEGMENTATION OF LOW-GRADE GLIOMAS

Margaux Verdier1,2, Justine Belko1, Jeremy Deverdun1, Nicolas Menjot de Champfleur1,3, Thomas Troalen2, Bénédicte Maréchal4,5,6, Emmanuelle Le Bars1, and Till Huelnhagen4,5,6

1I2FH , Neuroradiology, CHU Montpellier, Montpellier University, France, Montpellier, France, 2Siemens Healthcare, Saint Denis, France, 3Laboratoire Charles Coulomb, University of Montpellier, France, Montpellier, France, 4Advanced Clinical Imaging Technology, Siemens Healthcare AG, Lausanne, Switzerland, 5LTS5, Ecole Polytechnique Fédérale de Lausanne, Lausanne, Switzerland, 6Radiology Department, Lausanne University Hospital and University of Lausanne, Switzerland, Lausanne, Switzerland

Convolutional neural network correctly

segments low-grade gliomas using common clinical T1 and T2-FLAIR sequences,

facilitating efficient tumor growth evaluation. Segmentation errors can

occur when gliomas show strong heterogeneous intensity patterns.

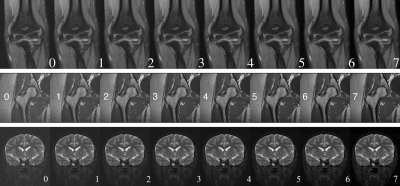

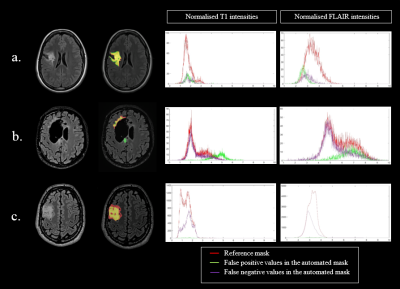

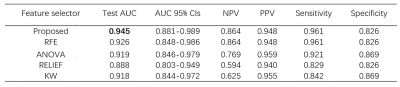

Figure 3: Three

different profiles (left column) with the native FLAIR images and the segmentation masks overlaid on FLAIR

images; reference mask in red, automated mask in green and common area in yellow.

Corresponding histograms of the normalized T1 and FLAIR signal intensities with

manual mask (red), false positive values in the automated mask (green), and false

negative values in the automated mask (purple). a : Best automated segmentation;

b : Poorest automated segmentation; c : Moderate automated segmentation.

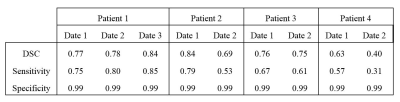

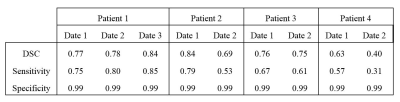

Table 2: Performance of the automated tumor segmentation in

the test patients.

-

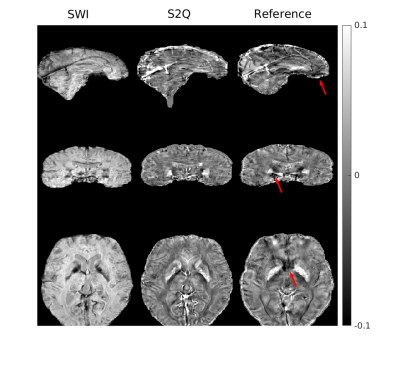

IMPROVING THE CONTRAST OF CEREBRAL MICROBLEEDS ON T2*-WEIGHTED IMAGES USING DEEP LEARNING

Ozan Genc1, Sivakami Avadiappan1, Yicheng Chen2, Christopher Hess1, and Janine M. Lupo1

1Department of Radiology and Biomedical Imaging, University of California San Francisco, San Francisco, CA, United States, 2Facebook Inc., Mountain View, CA, United States

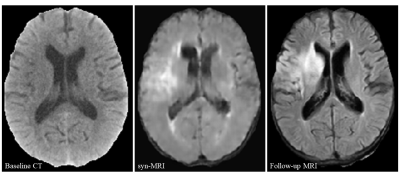

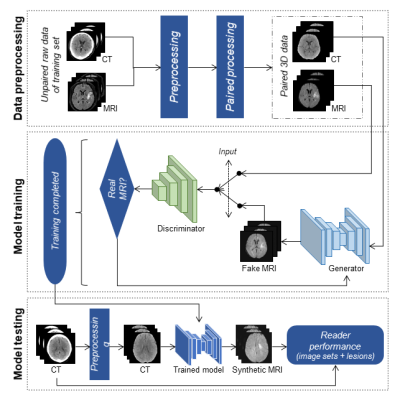

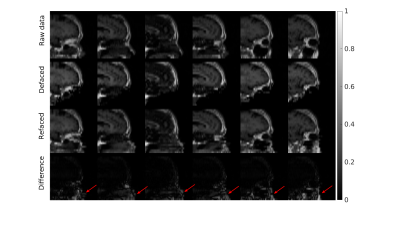

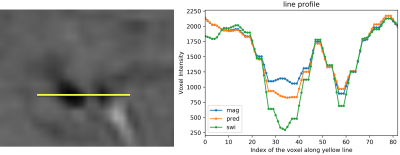

Synthetic SWI data was generated from T2* magnitude images using an LSGAN deep learning model. Findings suggest that our deep learning model is able to improve microbleed contrast on T2* magnitude images.

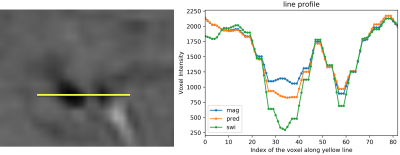

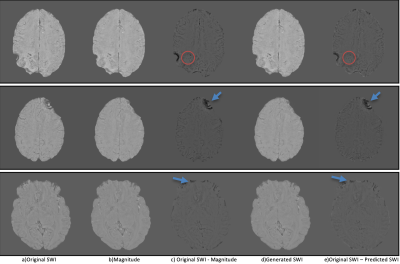

On the left, two CMBs are shown on a SWI image. On the right, voxel intensities of original SWI (green), predicted SWI (orange) and magnitude image (blue) along the yellow line are shown.

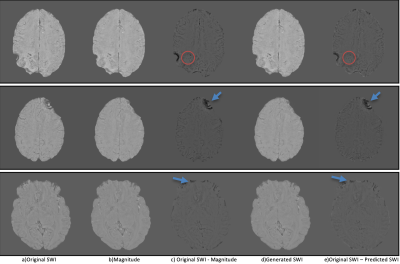

(a) Original SWI, (b) magnitude image, (c) difference image of original SWI and magnitude image, (d) predicted SWI, (e) difference image of original SWI and predicted SWI. Red circles show CMBs in difference images. Blue arrows show phase artifacts in difference images.

-

Automatic Prediction of MGMT and IDH Genotype for Gliomas from MR Images via Multi-task Deep Learning Network

Xiaoyuan Hou1,2, Hui Zhang1,2, Yan Tan3, Zhenchao Tang1,2, Hui Zhang3, and Jie Tian1,2

1Beijing Advanced Innovation Center for Big Data-Based Precision Medicine(BDBPM) ,Beihang University,100083, Beijing, China, 2Key Laboratory of Molecular Imaging, Institute of Automation, Chinese Academy of Sciences,100190, Beijing, China, 3Department of Radiology, First Clinical Medical College, Shanxi Medical University,030001, Taiyuan, China

We

found that the proposed multi-task learning model was potent in predicting

multiple genotype of gliomas preoperatively based on MR images. It indicated

that multi-task learning model reached the level of state-of-the-art machine

learning method in predicting genotype.

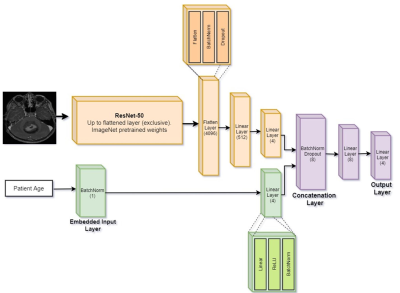

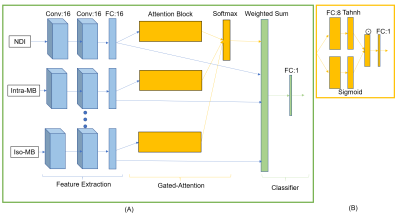

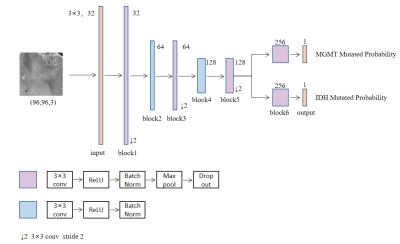

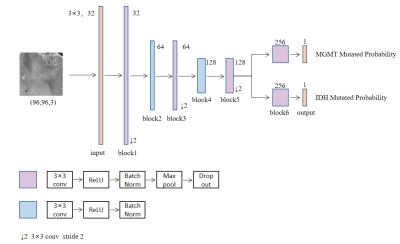

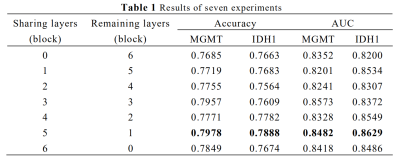

Figure1. Best-performed multi-task

learning model predicting multiple genotype of gliomas preoperatively based on

MR images.

The figure beside the convolution block means the number of convolution

kernel.

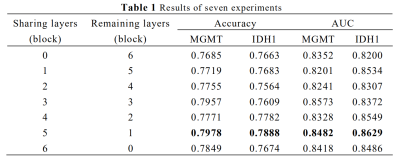

AUC, Area Under Receiver

Operating Characteristic Curve

Sharing blocks means

the number of blocks different branches owned jointly

Remaining blocks means the

number of blocks different branches owned respectively